Auditory adaptations: from everyday listening to compensating for hearing loss

An interview with Professor Andrew King, University of Oxford, conducted by April Cashin-Garbutt

When stepping out onto a quiet street from a noisy restaurant, you may notice a marked change in the level of background noise. Such changes in our acoustic environments happen constantly throughout our daily lives, yet our brains allow us to adapt appropriately.

Andrew King, Professor of Neurophysiology and Director of the Centre for Integrative Neuroscience in the Department of Physiology, Anatomy and Genetics at the University of Oxford, gave a SWC seminar outlining the neural circuits and strategies that underlie these adaptive processes. In this Q&A, he shares more about the highly dynamic way in which sounds are processed in the brain.

Why is it important for the brain to be able to process sounds in a dynamic way?

In the same way that the brain is able to operate over a vast range of light levels, it can also operate over a huge range of sound levels, from the very faintest sounds to those that are painfully loud. To process this information efficiently and accurately, and to reliably encode what matters to the organism, it is essential that individual neurons, and the listener as a whole, are able to adapt and focus the neural resources on the particular sounds that matter.

Furthermore, when we’re in a very noisy environment, the brain adapts to reduce the impact of noise so that we can more efficiently perceive particular sounds, such as the words of someone you are trying to talk to across the table in a crowded bar or restaurant. Of course, attention plays an important role in this, but pre-attentive adaptive mechanisms also help to reduce the effects of background noise.

How much is known about the adaptable properties of auditory neurons and how they deal with our constantly changing acoustic environments?

It was first demonstrated several decades ago by one of the very first people to record from neurons in the central nervous system, Edgar Adrian, that when a stimulus is presented for a short period of time, the firing of action potentials from the neuron that’s being recorded will typically decline over the duration of the stimulus. This is the classical concept of adaptation.

Adaptation is important as we’re generally not interested in stimuli that are present in a constant fashion. For example, we don’t want to be continually aware of the fact that we’re sitting on a chair or wearing clothes. Those things are almost oblivious to us primarily because of adaptation.

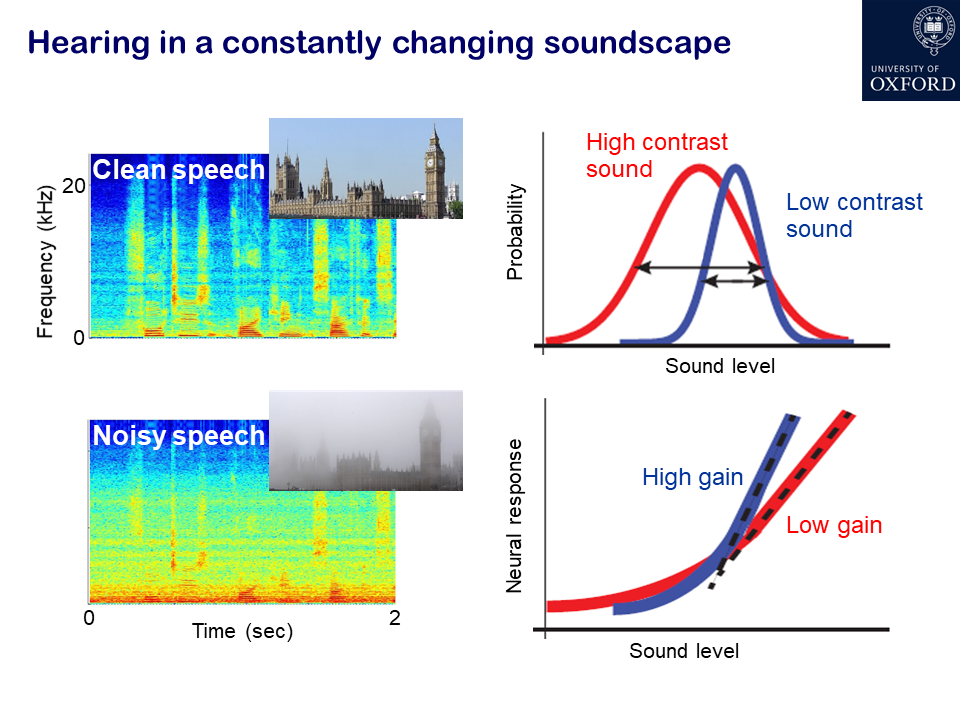

What has developed more recently is an understanding of how adaptation helps the brain to deal with more real-life acoustic environments that we naturally encounter, which involve more complex statistics than a constant stimulus. One of the areas we work on in my lab is adaptation to sound statistics, such as contrast. For example, if you have a sound presented in silence, that’s a high contrast signal, whereas embedding the same sound in the presence of background noise reduces the contrast.

Just over 10 years ago, we showed that neurons in the auditory cortex of experimental animals continually adjust their sensitivity to compensate for changes in contrast. This helps to match the neurons’ dynamic range – the range of sound levels that can be encoded by a change in firing rate – to the current distribution of sound levels. Importantly, contrast adaptation helps to remove the effects of background noise. Other studies have subsequently shown that this also happens in the human brain. There is a unique opportunity to record neural activity at a single cell level in the human brain in patients who are undergoing surgery for treatment of epilepsy. The surgeon has to know which part of the brain tissue to remove and so electrode arrays are placed on the brain of those patients, enabling studies to be carried out, with the patients’ consent, that show that noise adaptation enhances the cortical representation of speech.

We also look at how adaptation takes place in the auditory system over longer timespans. For example, what happens if there is a longer-term change in the inputs due to hearing loss? This is an extremely important issue, which is becoming increasingly significant as people live longer because most of us will experience some level of hearing impairment as we get older. For example, more than 25% of people aged 60 or over have their lives affected to varying degrees by hearing loss.

Recently, a fairly compelling link has been established between hearing loss and dementia, so it is really important to understand what changes take place in the brain following hearing loss and whether and how they might be related to dementia. We are trying to understand whether the brain can adapt to reduced or abnormal acoustic input in ways that help to recover function. It is well known, for example, that someone who has a cochlear implant – the most successful neuroprosthetic device – will initially really struggle to understand their newly restored hearing, but they typically get better over time as they learn to interpret the highly distorted signals provided by the cochlear implant as speech. That’s a manifestation of the plasticity of the brain and its capacity to adapt to abnormal inputs.

What have been your key findings so far across the timespan of adaptation to inputs?

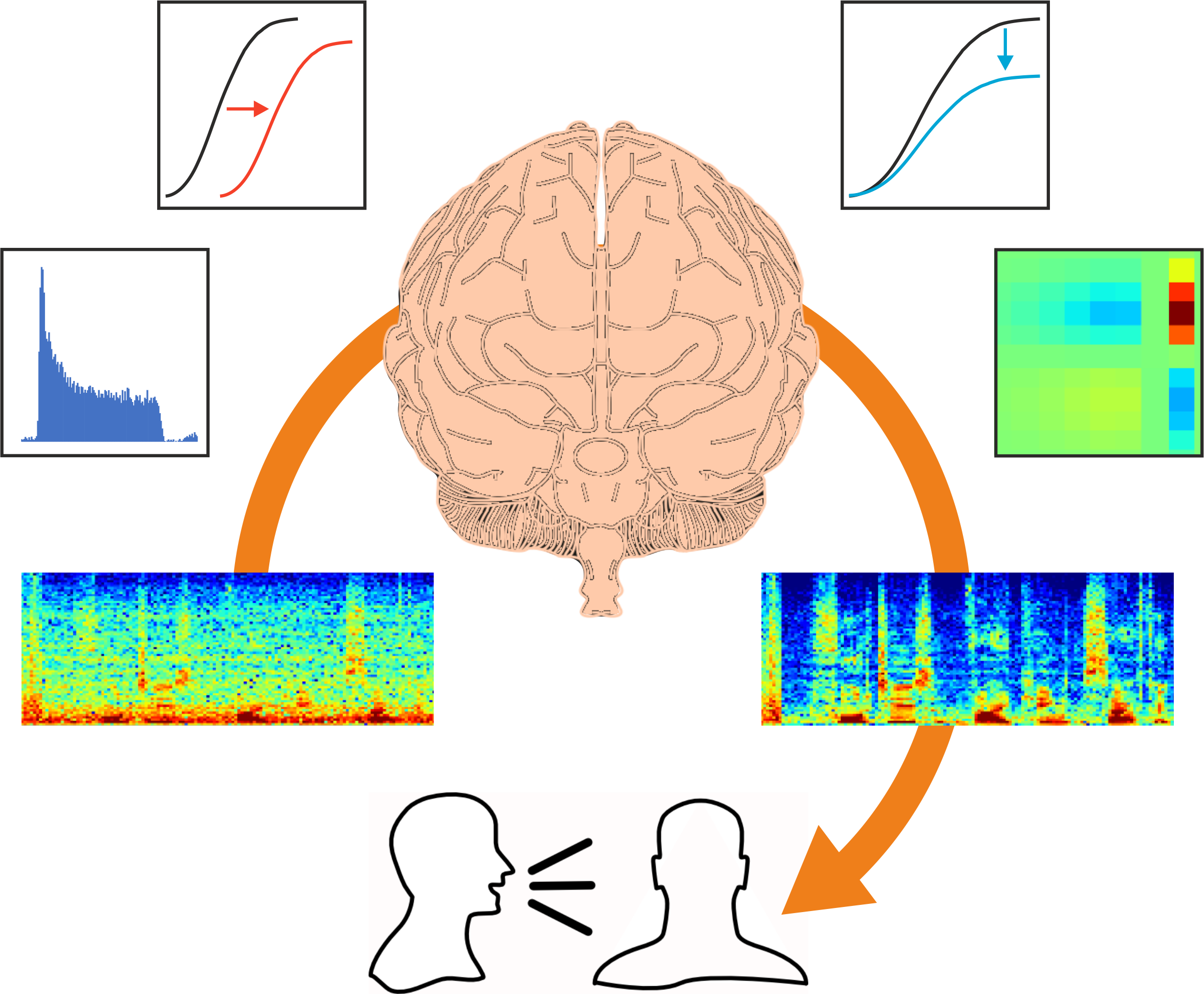

Firstly, looking at short-term adaptation to changes in sound statistics, we discovered that neurons in the auditory cortex rapidly adapt to changes in sound contrast, turning the gain of their responses up or down with the contrast of the sound. This process helps to construct representations in the brain of natural sounds, like speech, that are robust in the presence of noise.

We looked into the neural circuitry involved by also recording from the auditory midbrain and thalamus, and found that contrast adaptation builds up along the auditory pathway, with the most noise-tolerant sound encoding occurring in the auditory cortex.

Another important aspect of our acoustic environments is reverberation – the many delayed versions of the original sound that result from reflections off the walls and other hard surfaces in a room. Reverberation varies according to the type and size of the room you are in and distorts the sounds reaching our ears. Normally, the brain deals very well with this – we only really notice the echoes in a large and highly reverberant space like a cathedral – but this can be more of a problem for hearing impaired people. It is not well understood how the brain deals with reverberation.

A recent discovery in our lab is that neurons in the auditory cortex adapt to reverberation by adjusting their inhibitory response properties as the size of the room changes. By simulating virtual rooms of different sizes over headphones and recording from neurons in the auditory cortex, we found that the time course of the inhibitory components of the neurons’ receptive fields increases in a larger room, with longer-lasting reverberation, and decreases in a smaller room.

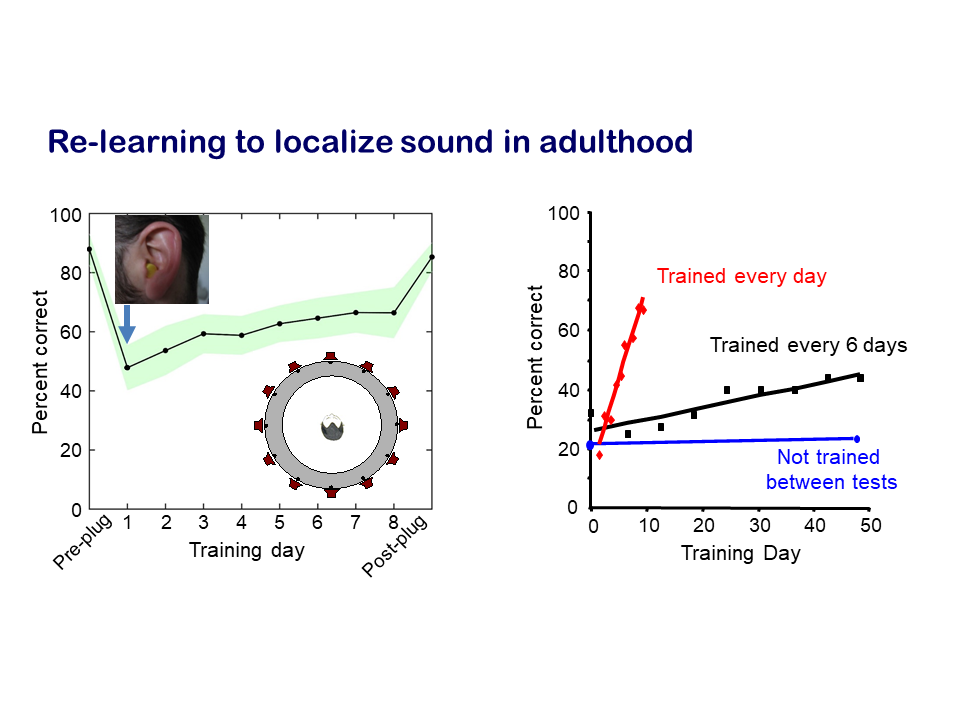

Our research on plasticity in the brain resulting from more persistent changes in input due to hearing impairments focuses on the capacity of the auditory system to compensate for loss of hearing in one ear. We measured how well human listeners and experimental animals are able to localise sound, which relies principally on a comparison of the sounds between two ears, and showed that an attenuation in input due to hearing loss in one ear greatly impairs localisation accuracy. But, with training, the brain is able to learn to compensate for the imbalance in inputs between the two ears. This adaptation happens quite quickly – over a matter of days – and relies on training in the sound localisation task.

We then used the animal models to look at what was happening in the brain. Once again, we found that the auditory cortex was the key area, which appears to play a crucial role in learning to localise sounds accurately in the presence of a temporary hearing loss in one ear. In addition to the many subcortical levels of processing that convey auditory information from the ear to the cortex, there are also massive descending projections that are poorly understood. For example, the cortex modulates activity in the auditory thalamus, midbrain and brainstem. For this particular question, we showed that descending projections from the cortex to the midbrain are critical for the capacity to adapt to hearing loss in one ear.

That resonates with a completely separate, parallel series of studies, which has been carried out mainly in the US, suggesting that learning to play a musical instrument or singing can promote the development of speech perception, particularly in the presence of noise. This is also thought to involve descending signals from the cortex to the brainstem, which improves the representation of speech sounds. So there seems to be a nice parallel between the benefits of musical training on speech perception, particularly for children in a noisy classroom, and our experimental studies looking at the role of training in promoting the way the brain adjusts to hearing loss. In both cases, descending projections in the auditory system seem to be important.

What implications do these findings have?

Firstly, from a neuroscience perspective, these findings are adding to our knowledge of how hearing works. Secondly, we are addressing questions that are highly relevant to individuals with hearing impairment.

The World Health Organization has highlighted that in a few years’ time, hearing loss will be one of the top 10 disease/disability burdens in terms of its socioeconomic impact. People with hearing loss are more likely to become isolated and less likely to interact with others, and this is probably at least part of the explanation for the link with dementia.

We are addressing how the brain adjusts to hearing loss, specifically in terms of hearing loss in one ear. But the more that we can learn about the remarkable adaptive capabilities of the auditory system in general, the better we will be able to understand what goes wrong as a result of hearing loss.

As I mentioned before, people with a hearing impairment struggle in a noisy, echoey environment, whereas that’s less true of those with normal hearing. By understanding the neural mechanisms that enable the brain to deal with these changes in statistics, we can get an insight into what might be going wrong in individuals with hearing impairments, and then potentially replicate those mechanisms in speech recognition devices or in prosthetic devices that are used for restoring hearing.

What’s the next piece of the puzzle your research is focusing on?

We are focusing on a broad range of questions using both experimental and computational approaches to study auditory function. In the case of our recent work on cortical adaptation to reverberation, we want to understand the neural circuitry involved in allowing the inhibitory components of the receptor fields of neurons to be dynamically adjusted as the room statistics change. We also need to find out whether this form of adaptation emerges at the level of the auditory cortex or whether it is found subcortically too.

We don’t just look at the auditory system in isolation. As we live in a rich multisensory world, we also investigate how our hearing is affected by other senses, such as what we see and what we touch, as well as by motor-related signals. That’s an area that is adding to this general body of work on the context-dependence of auditory processing.

In terms of the hearing loss studies, we found that the brain adapts to hearing loss in one ear by adjusting the relative contributions of the different spatial cues available to localise sounds. Localisation of sound in the horizontal plane relies primarily on the detection of tiny differences in the timing and intensity of sounds between the two ears. But the outer ear, the visible part of the ear, also contributes to sound localisation by filtering the incoming sounds to produce what are known as spectral shape cues. When the brain adapts to hearing loss in one ear, the spectral cues are up-weighted, meaning the brain learns to rely more on these cues. We want to understand how that happens at a neuronal level and particularly whether neurons “multiplex” these cues so that their weighting can be altered dynamically according to the prevailing hearing conditions.

Finally, if we use animal models to better understand what happens in the brain, it is essential to show that these findings are relevant to humans. This is why we run parallel studies looking at the capacity of human listeners to adjust to transient hearing loss in one ear. However, so far, this work has been carried out in laboratory conditions, where the subjects sit in a soundproof room and are presented with sounds from different locations and asked where they think the sound came from. If this research is to be translationally relevant, it is really important that the same type of plasticity takes place in the more realistic, noisier and reverberant environments that we naturally encounter. We are therefore looking at the generalisation of our research to these more naturalistic hearing conditions. Our hope is that this will eventually lead to the development of training paradigms that could be designed and implemented, in an easy way, for individuals with hearing impairment.

About Professor Andrew King

Andrew King is a Wellcome Principal Research Fellow, Professor of Neurophysiology and Director of the Centre for Integrative Neuroscience in the Department of Physiology, Anatomy and Genetics at the University of Oxford. He studied physiology at King’s College London and obtained his PhD from the MRC National Institute for Medical Research. Apart from a spell as a visiting scientist at the Eye Research Institute in Boston, he has worked at the University of Oxford since then, where his research has been supported by fellowships from the Science and Engineering Research Council, the Lister Institute of Preventive Medicine and the Wellcome Trust.

Andrew’s research uses a combination of experimental and computational approaches to investigate how the auditory brain adapts to the rapidly changing statistics that characterize real-life soundscapes, integrates other sensory and motor-related signals, and learns to compensate for the altered inputs resulting from hearing impairments. He is a winner of the Wellcome Prize in Physiology, a Fellow of the Royal Society, the Academy of Medical Sciences and the Physiological Society, and is a Senior Editor at eLife. He is also the Director of the graduate training programme in neuroscience at the University of Oxford.