Cooling the cortex to understand vocal communication

An interview with Professor Michael Long, New York University, conducted by Hyewon Kim

With more in-person social interactions returning to our daily routine, the way we have been communicating is receiving increasing attention. Professor Michael Long of New York University has been studying vocal communication in songbirds and is now expanding the scope of his research to interactive vocal communication in singing mice and humans. In a recent SWC seminar, Prof Long delved into his research methodology and suggested model of how the brain accomplishes this complex behaviour. In this Q&A, he describes the parallels between vocal communication in the songbird, singing mouse, and human, as well as his use of cortical cooling to understand the brain mechanisms.

In the beginning of your talk, you mentioned how the pandemic has, to a certain level, influenced our ability to socialise. What is your take on how the lockdown may have impacted our communication abilities in this period of transition back to normal?

I think the lockdown has strongly impacted our ability to communicate. What I’ve learned in studying both human communication as well as interactive vocal behaviours across other animals is that we have evolved to be extremely good at sharing information with other people. So, we use all kind of cues to get information from our conversational partners, from how they are talking to what gestures they are using, etc. Many of those cues are unfortunately lost as we enter into a two-dimensional world. And it makes that clear back-and-forth much more laboured and less seamlessly efficient – it can be exhausting. We have done the natural experiment of pretending that online means allow for a real conversation, realising that there’s something missing.

What we are studying in my lab is how the brain can allow for this very fast back-and-forth by planning and preparing our answers – having them ready at the forefront to exchange, almost like hitting a tennis ball back-and-forth.

Why is vocal communication so important in social animals?

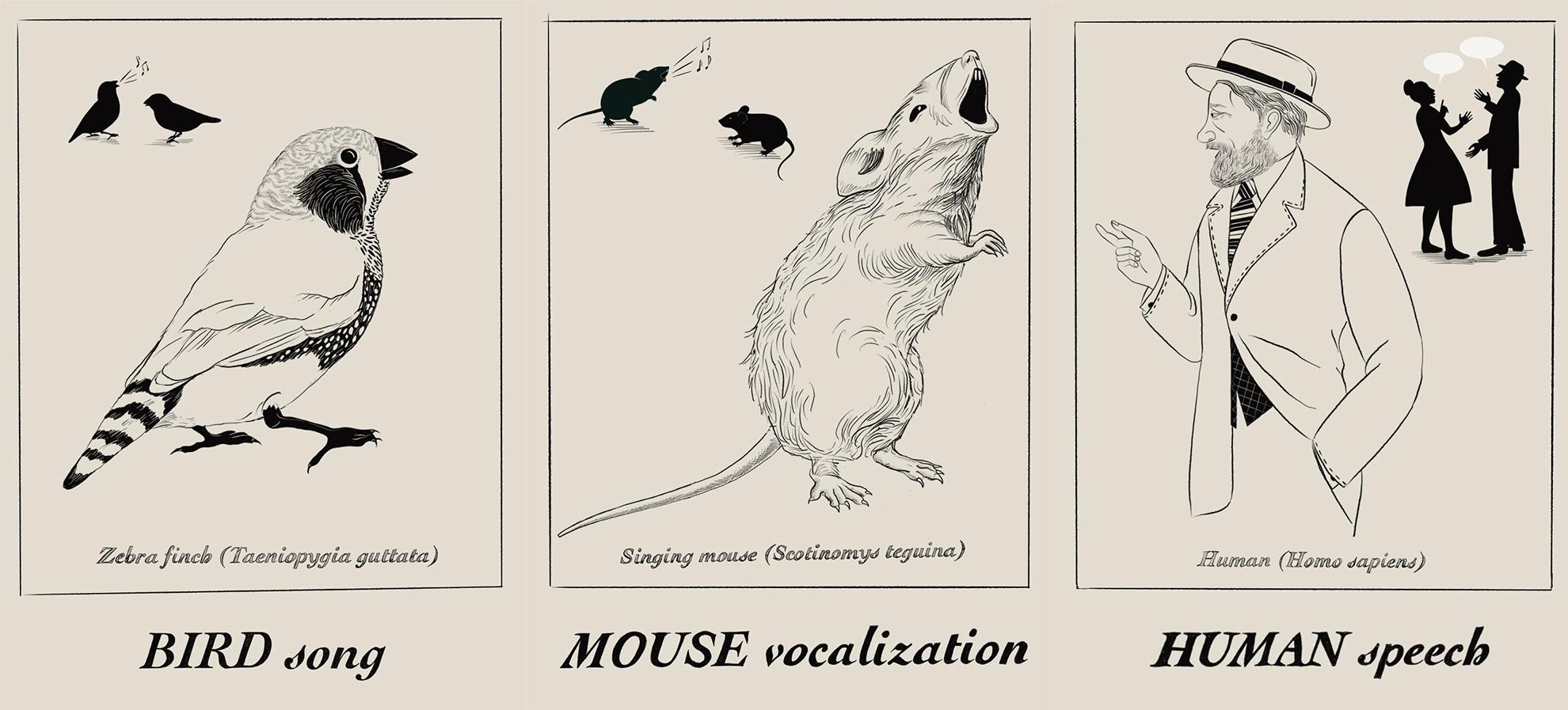

Vocal communication can convey information efficiently and in specific contexts. We study songbirds that have a courtship song that the male can present to the female as part of a mating process. We also study Costa Rican singing rodents – we’re still trying to understand what their singing means, but it’s clearly about territory and can be a mark of aggression as well.

Why it’s deeply important to me is that firstly, 15% of our population suffers from a communication disorder, ranging from hearing deficits all the way to any one of the many disorders that can affect speech: apraxia, stroke-related damage, developmental disorders, and autism. I have one relative who had a stroke and lost his ability to speak, and another relative who is autistic. And in these cases, it can be a major barrier – a source of frustration not only for them, but for everyone around them, including their caregivers.

It’s clear to me that even though we understand linguistics and language in a bubble, how language is used in a relevant way to convey information is something we know very little about. That is why we study communication - because I think it’s very relevant in these cases where that ability is stolen away, which causes a major problem.

How much is known about the parallels between bird song, mouse vocalisation, and human speech?

We have just written an article in the Annual Review of Neuroscience, where we described very fine points on those parallels. But in broad strokes, I’d say the bird and singing mouse are almost non-overlapping in what they do well. The zebra finch – our favourite songbird - can learn a single vocalisation. They learn from their father – can vocally imitate what he sings, memorise that song, and practice a ton to try and get that song right. It’s a wonderful model of vocal imitation enabled to fine-tune all the little tricks to get that song into a perfect copy.

Image credit: Julia Kuhl

The singing mouse is different. If you look at the context of what the bird does, the male sings to the female, there’s mating, and that’s it. It’s not much of a conversation. But, the singing mouse is a constantly conversing animal. And we’re very excited to study female-to-female as well as male-to-male vocal interactions, where there is some richness. But for now, we’ve looked primarily at male-to-male vocal interactions, where a singing mouse will perform his song, which is about 10 seconds long, and within a fraction of a second, another mouse will take up that conversation and answer the call. So it serves as an incredible model for what is referred to as counter-singing. In some sense, the bird is a wonderful model for speech because of their ability to vocally imitate. And the singing mouse is an example model as well, thanks to its back-and-forth usage of that.

In work that I didn’t get a chance to mention in my talk, we have also been studying singing in parrots, which actually do both: they have interactive vocalisation as well as very elaborate imitation, and they can learn hundreds of human words. This is unexplored territory.

Why has it been a challenge to understand the neural mechanisms underlying coordinated vocal exchanges?

A major challenge for studying coordinated vocal exchanges is that we don’t have great models for this. The most used model in neuroscience – the mus musculus, or house mouse – can create ultrasonic vocalisations, and there are research groups that have done fantastic work looking into the mechanisms of how those vocalisations are produced and perceived. But, unfortunately, it doesn’t appear that those vocalisations are really used in an interactive way. Typically, the animal will produce these kinds of ultrasonic vocalisations in response to stimuli – the odour of a female or a sense of higher vigilance – but they don’t have that clear conversational exchange. So you have an animal that has wonderful tools that have evolved to help us understand the animal, but the behaviour is limiting in this case.

In response to this, we decided to work with Steven Phelps at the University of Texas, Austin, who has gone into Costa Rica every year to collect singing mice. And at this point, we have now created a colony that is even bigger than Steven’s that live right here in New York City. They produce wonderful songs. In a sense, the ability to marshal a variety of tools for understanding neural circuits, connections, and dynamics has been something that has transformed our field. But we had still lacked the behaviour of interest.

So, another challenge is now that we have a brand-new animal, all the things that we’d taken for granted in the house mouse is not there for the singing mouse: an atlas and different transgenic lines that allow us to piece apart at the cell-type level how these kinds of behaviours work. These tools allow us to figure out how creatures do what they do. My stance is that studying behaviour should always come first in answering this kind of question.

How does your research try to further understand the brain processes involved in interactive vocal behaviours in songbirds and rodents?

For the interactive element, we’re pushing towards studying singing mice and parrots, which have rich interactions. Songbirds do interact vocally, but they do so with a very simple, unlearned call or vocalisation. In terms of understanding the neural mechanisms of this interaction, songbirds are far enough from us evolutionarily that I can’t imagine that the same neural mechanisms are at play in the songbird versus the human. But, what we can understand is how at the algorithmic level these problems can be solved by the brain. And if the human solves it in a different way, at least we have a better idea of how to get at that solution. We can start to build better therapeutic interventions in the case of these communication disorders.

Studying humans have been one of the most thrilling and frustrations experiences of my life. With the creature that has nearly 100 billion neurons in the brain, how do you start to understand the complexity of what’s going on? Our work with humans has been trying to push beyond boundaries of what the pioneers have done – the work done only in the last decade by Eddie Chang and others – that had looked at on one hand, the sensory side (how we perceive voice and speech), and on the other hand, the motor side (how we make syllables and speech).

There is a middle piece too, which is how we plan what we are saying, the more cognitive side that we think are critical for interactive vocalisations. This is something that hasn’t been looked at, so this is what we’d like to do and where we’re trying to go as a group – not only to see how different simple animals can solve this problem, but also how humans can do this.

What have been your key findings so far? Were you surprised to find that when you cooled the orofacial motor cortex (OMC), only the song duration and not the note duration was slowed?

What I liked about cooling is that it’s the lowest tech method I could come with, in a sense. There are so many revolutionary ways to change neural activity at a cell-type-specific level – with light, a ligand, pharmacogenetics – being able to manipulate neural circuits with a kind of high degree of precision. Although many of these optogenetic tricks and high-end newly emerging technologies have the benefit of precision, they often break behaviour. If you turn a cell type on or off, behaviour is often going to be one of the first casualties of this. So, it ends up clouding interpretation. Many different areas, if you turn them on or off, cause gross changes in behaviour.

Cooling, on the other hand, leaves the spikes alive. It doesn’t cool all the way down to the point where spikes are eliminated. Instead, it takes that complex, beautiful series of spikes that unfold in time, and linearly changes timing. It’s a way of being able to look at what an area of the brain is doing within the scope of a given behaviour.

We did it first in songbirds over a decade ago, and we could make birds sing in slow motion. Everything changed – the song, individual notes – every little piece swelled up as if we had taken the bird and played it back on a slow recorder. Interestingly, although we slowed it down, the pitch wasn’t changed. This tells us that pitch is determined elsewhere, in the more immediate motor circuits and the vocal organ.

In the singing mouse, we see a clear dissociation between the length of the song and how the notes are created. We interpret that as a kind of handshake, or teamwork, between the neocortex and brainstem. The neocortex drives the length of the song and how fast that goes, how it stops and starts (because the cortex is trying to get along, or coordinate, with the other animal). The brain stem drives the length of the notes themselves. We’re not really cooling the brain stem down, but any kind of cooling of the surface does a great job of selectively affecting the cortex, while leaving anything beneath of the cortex pretty much unaffected. We could see this kind of beautiful dissociation.

In the human, we used cooling as well, and saw a clear dissociation there too. When we cooled one area of the brain, we saw changes in the timing just like we saw in the songbird, and other changes in quality when we cooled other parts of the brain. Cooling as a tool allows to bring those changes out that we wouldn’t be able to see using other tools.

The beauty of cooling is that it is unlike any other methods, which typically involve an actuator, an expression of a protein – either with a virus or electroporation – and this changes the brain itself. Surface cooling, on the other hand? We have done a retrospective study on 50 or so patients and none of them have suffered any adverse side effects at the level of behaviour or brain function, in the scans that we’ve done. And we didn’t anticipate that it would because it’s a small amount of cooling, administered to the surface with a sterile probe. It has revealed to be a very safe and effective method as well.

How do your findings relate to emerging human studies on cortical areas relevant for speech?

Interestingly, cooling was a great demonstration that brought us together with our present collaborators, initially looking at these larger brain areas and what they’re doing while we manipulate those areas. There are reasons to revisit these behavioural studies. But I think once we have established what these areas are doing, we want to start digging deep and find out what’s going on electrophysiologically.

So, we’ve shifted a bit from cooling into electrophysiological recordings, and now, focusing on electrocorticography, which involves metal sensors right on the surface of the brain. These have relatively good temporal and spatial precision, and it’s for patient volunteers that have either tumours or epilepsy. We can record activity by looking primarily at high gamma band, which is roughly equivalent to multi-unit firing in a large swath of cortex.

What we’re very excited about doing is developing methods that will now allow us to record individual units at the level of groups of cells. We can now say that it’s not enough to know there is a lot of activity in this area, but want to see what the nature of that activity is at the level of the neural circuit. That’s the dream. We’re pushing toward that by developing new methods for recording those circuits.

What’s the next piece of the puzzle your research is focusing on?

Earlier this year, we published a finding that exposed a new functional structure in the brain that we are calling the interactive planning hub. This is an area that lights up, not just when I’m hearing my conversational partner talk, and not when I’m talking myself, but selectively when I’m planning what I’m about to say in the service of conversation.

It was exciting to harness the temporal and spatial precision of electrocorticography in this way – the brain area emerged not only in the context of very rigorously defined, laboratory-based, structured tasks, but also in free conversation. You could talk to a patient and ask them about a football game that they watched on television, what they had for lunch, states they visited – and in these totally free and unstructured conversations, we can see planning happening in real-time in their brain. It’s as if we’re reading their minds! It’s completely fascinating, to be able to go into that linguistic operation and see what the neurons are doing. This is tremendously exciting and the direction in which we’d like to go.

You briefly talked about humour and timing in your talk. What is your take on their relationship?

I don’t formally study humour at all, but I love comedy and stand-up comedians. It’s such a difficult thing to do – to say something and have the words provoke a consistent emotional reaction, so much so that the audience pays money to have that emotional reaction in a consistent way over an hour-and-a-half! I’ve heard people that refer to humour as a shared secret – something that you’ve noticed and somebody else can point out. And that shared insight is something that is funny; an example of this observational humour is Jerry Seinfeld.

Timing is critical in humour. You can see cases where the same joke can be delivered with precise versus loose timing, and their respective effects on emotion. Humour is also about expectation. There is a large literature about how we can’t tickle ourselves. If a feather is moving in an unexpected direction, that can be incredibly ticklish, but if you know exactly where that feather is going to be, it’s not as ticklish. On a cognitive level, I think humour is similar to that. It’s something that runs in a different direction from our expectation, and that direction is something that can provoke reaction. All in all, humour is an exciting subject to study.

About Professor Michael Long

Michael Long is the Thomas and Susanne Murphy Professor of Neuroscience at the NYU School of Medicine. He completed his graduate studies with Barry Connors at Brown University where he investigated the role of electrical synapses in the mammalian brain. During his postdoctoral work with Michale Fee at MIT, Long began to study the songbird model system to uncover the cellular and network properties that give rise to learned vocal sequences. Since beginning his laboratory in 2010, Long has focused his attention on the neural circuits underlying skilled movements, often in the service of vocal interactions. To accomplish this, the Long lab has taken a comparative approach, examining relevant mechanisms in the songbird, a newly characterized neotropical rodent, and humans. In addition to federal funding, the Long lab has also received support from NYSCF, the Rita Allen Foundation, the Klingenstein Foundation, and the Herschel-Weill Foundation.