Understanding the neural basis of visual memory

An interview with Associate Professor Nicole Rust conducted by April Cashin-Garbutt, MA (Cantab)

Nicole Rust, an Associate Professor in the Department of Psychology and a member of the Computational Neuroscience Initiative at the University of Pennsylvania, recently gave a talk to members of the Gatsby Computational Neuroscience Unit and Sainsbury Wellcome Centre on how the brain signals whether we’ve encountered specific objects or scenes before. I caught up with Nicole to find out more about her research.

How much is currently known about the way in which our brains signal whether we have encountered specific objects or scenes before? Have all the brain regions involved been identified?

There is a long history in studying the answer to the question: have you seen this before? To summarise, psychologists have found evidence for two different processes in the brain. An effective analogy for these two processes, and something we have probably all experienced, is when you are walking down the street and you see someone you know, but for a few moments you don’t remember where you know them from.

The first process, the sense of remembering that you know the person, is called familiarity and those memories are thought to reside in perirhinal cortex in the human brain. The second process, where you remember how you know the person, is called recollection and that is thought to reside in the hippocampus.

It is believed that when you are asked whether you have seen a certain image before, you bring together both these types of memory to help you answer the question.

What are the main techniques you use to test hypotheses about how our visual memory percepts vary when we have seen certain objects before?

There are three primary techniques that we use to study visual memory. Firstly we study human behaviour and how well people remember pictures, how long they remember them for, with what detail they remember them and so forth. This is really important to us as we are interested in investigating how our own brains work.

Secondly, we use animal models to record signals from the brain at the level of detail we need to understand visual memory, which involves recording from individual neurons as animals are performing visual memory tasks. We also use optogenetics to perturb neural circuits to look at causality.

Finally, we use computational modelling to pull together all the data.

Please can you describe your research on memory and vision? How good are our brains thought to be at remembering whether we’ve seen things before?

I come at studying memory from studying vision and I think of the problem of vision as the problem of what are you looking at. To solve the question of memory, you need to figure out what you are looking at and then work out whether you have ever seen that before.

We are trying to find the signal that correlates with your percept, or your feeling, of remembering. Just because we find that signal in a brain area, doesn’t mean the memories are stored in that region. This is a really important distinction.

The brain area that is the focus of the most recent memory work in my lab is a high level visual area called inferior temporal (IT) cortex, which is a brain area that we know to be involved in recognising objects. Classic psychology experiments focused on memory processing do not really predict this region to be involved in the act of remembering.

It was really human behavioural experiments inspired us to look at the IT cortex. The experiments involved showing people thousands of photographs, one at a time, over hours and then at the end showing the subject two pictures side by side and asking, ‘Which one did you see’?

Humans are really good at this, and choose the correct photo over 90% of the time. Remarkably, we can do this well even when the pictures are very similar, for example the same object pictured on the same background but with a little configurational change.

One example is a picture of bread in a bread box, where in one picture the bread is at the front of the bread box and in the other it is at the back. People are still really good at remembering which of those two pictures they saw and one can conclude from this that we are storing memories in our brains with a lot of visual detail. We are not just storing information about the objects we’ve seen, for example remembering that we saw bread, but we are storing almost as much detail as contained in a photograph.

Visual memory is a form of learning that happens after single exposures to an image. It is known to happen with massive capacity, such that one can remember having seen a single image viewed in a sequence of thousands and thousands of images. Nobody has found the capacity limit for visual memory, and even after viewing 10,000 images, there is no indication of a ceiling for remembering pictures. In these experiments, people view each image for 2-3 seconds and the memories last for hours and days, and thus this is a form of long-term memory.

These experiments inspired us to look at visual cortex and see if it is somehow involved in visual memory, perhaps not in memory storage, but in memory signalling. Our experiments support the hypothesis that IT contains a signal that correlates with behavioural reports of remembering and forgetting pictures.

Does the ability to remember differ by the type of object?

There has been a considerable amount of work on this question and the loose rule of thumb is that the more compelling an image is, the more likely you are to remember it. There are a lot of things that can impact memorability, for example, when compelling captions such as a joke were added to the images, you are more likely to remember the image than if it just had a boring caption.

One of my favourite studies is one in which the investigators took blobs of paint and compared blobs that resembled faces with those that didn’t and they found you are more likely to remember blobs of paint that resemble objects.

So it seems as if we can systematically predict which images we will remember and which ones we won’t. Things that look visually similar and are abstract, such as different leaf patterns, are very difficult to remember.

How much is currently known about prosopagnosia (face blindness)?

Prosopagnosia is a well-documented phenomenon where people cannot remember faces. For example, prosopagnosiacs can’t remember the face of their mother, even though they are looking at her face. The neural correlate of prosopagnosia, as far as I know, is currently completely unknown.

What is thought to be happening in the brain when people experience déjà vu?

Very little is known about what is happening in the brain when people experience déjà vu. Interestingly, patients with epilepsy sometimes experience déjà vu before a seizure. It is thought that epilepsy starts at a focal point and then spreads. One of the places epilepsy tends to reside is in the medial temporal lobe, which is involved in memory.

Part of the problem is that déjà vu is very hard to study as it doesn’t happen very often and to study it you would need to induce it somehow and so far no one has figured out a reliably way to trigger déjà vu.

What do you think the future holds for understanding the neural basis of visual memory?

I think it is a hugely exciting time, with the emergence of all of these technologies, many of which are happening here at UCL and across the globe. Our ability to record from large swaths of the brain simultaneously, through technologies such as Neuropixels, coupled with our ability to manipulate that activity causally using techniques such as optogenetics, is very powerful.

I am very excited about the implications of a recent series of experiments, called the ‘engram experiments’, for our ability to understand visual memory. These experiments have been replicated in a number of different labs, and were significantly advanced by Susumu Tonegawa’s lab at MIT with the development of new technologies designed to tag the specific subpopulation of neurons that are activated during memory storage. Later, those neurons can then be causally perturbed to test whether their activation has the same effect as normal memory recall.

The primary challenge to studying memory is that we may believe that a structure, such as the hippocampus, is involved in memory storage, but we can’t causally test this by activation of the entire hippocampus to see if a particular memory comes back! Rather, there is good reason to think that a particular subset of neurons are responsible for storing any particular memory and that memory recall involves the activation of that particular pattern.

The Tonegawa experiments focused on a very basic form of memory that involved the association between an auditory stimulus and a physical event. They found that reactivation of the neurons that were activated during memory storage replicated the same behaviour observed during normal memory recollection. This is the strongest test thus far of our hypotheses about how memories are stored. I believe that the same type of approach can be used to test visual memory as well.

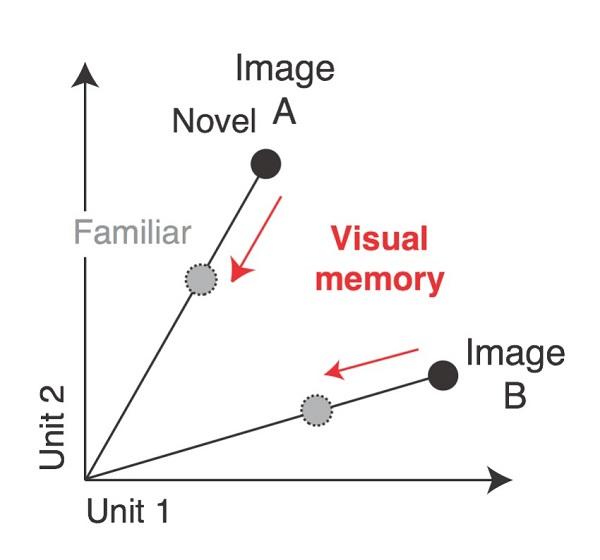

Our specific hypothesis is that visual memories are signalled by reducing the firing rate of responses of neurons, such that during the first exposure to an image, specific neurons in IT cortex respond with high firing rates and upon repeated exposure of the same image, firing rates of those neurons are reduced. In other words, the feeling of familiarity is the reduction of firing rates relative to their levels for the novel image. Now that we’ve found that memory correlates with a reduction in firing rate, the next steps involve causally testing this hypothesis.

Where can readers find further information?

Meyer T, Rust NC (in press) Single-exposure visual familiarity judgments are reflected in IT cortex. eLife. Preprint: bioRxiv 197764; doi: https://doi.org/10.1101/197764.

About Associate Professor Nicole Rust

Nicole Rust is an Associate Professor in the Department of Psychology and a member of the Computational Neuroscience Initiative at the University of Pennsylvania. She received her Ph.D. in neuroscience from New York University, and trained as a postdoctoral researcher at Massachusetts Institute of Technology before joining the faculty at Penn in 2009.

Research in her laboratory is focused on understanding the neural basis of visual memory, including how we remember the objects and scenes that we have encountered, even after viewing thousands, each only for few seconds. To understand visual memory, her lab employs a number of different approaches, including investigations of human and animal visual memory behaviors, measurements and manipulations of neural activity, and computational modeling.

She has received a number of awards for both research and teaching including a McKnight Scholar award, an NSF CAREER award, a Alfred P. Sloan Fellowship, and the Charles Ludwig Distinguished teaching award. Her research is currently funded by the National Eye Institute at the National Institutes of Health, the National Science Foundation, and the Simons Collaboration on the Global Brain.