Jérôme Lecoq presents technical developments in neuronal recordings and the future of open brain observatories

Large-scale calcium imaging and standardized, open brain observatories were presented by Jérôme Lecoq, Ph.D. Senior Manager, Optical Physiology, Allen Institute during a recent seminar at Sainsbury Wellcome Centre.

Jérôme began by outlining the rationale behind high-throughput calcium imaging for large scale neuronal recordings, before discussing standardized and reproducible data generation. He then followed with presentation of a visual coding dataset and concluded with a discussion of the future of the Allen Brain Observatory.

Rationale

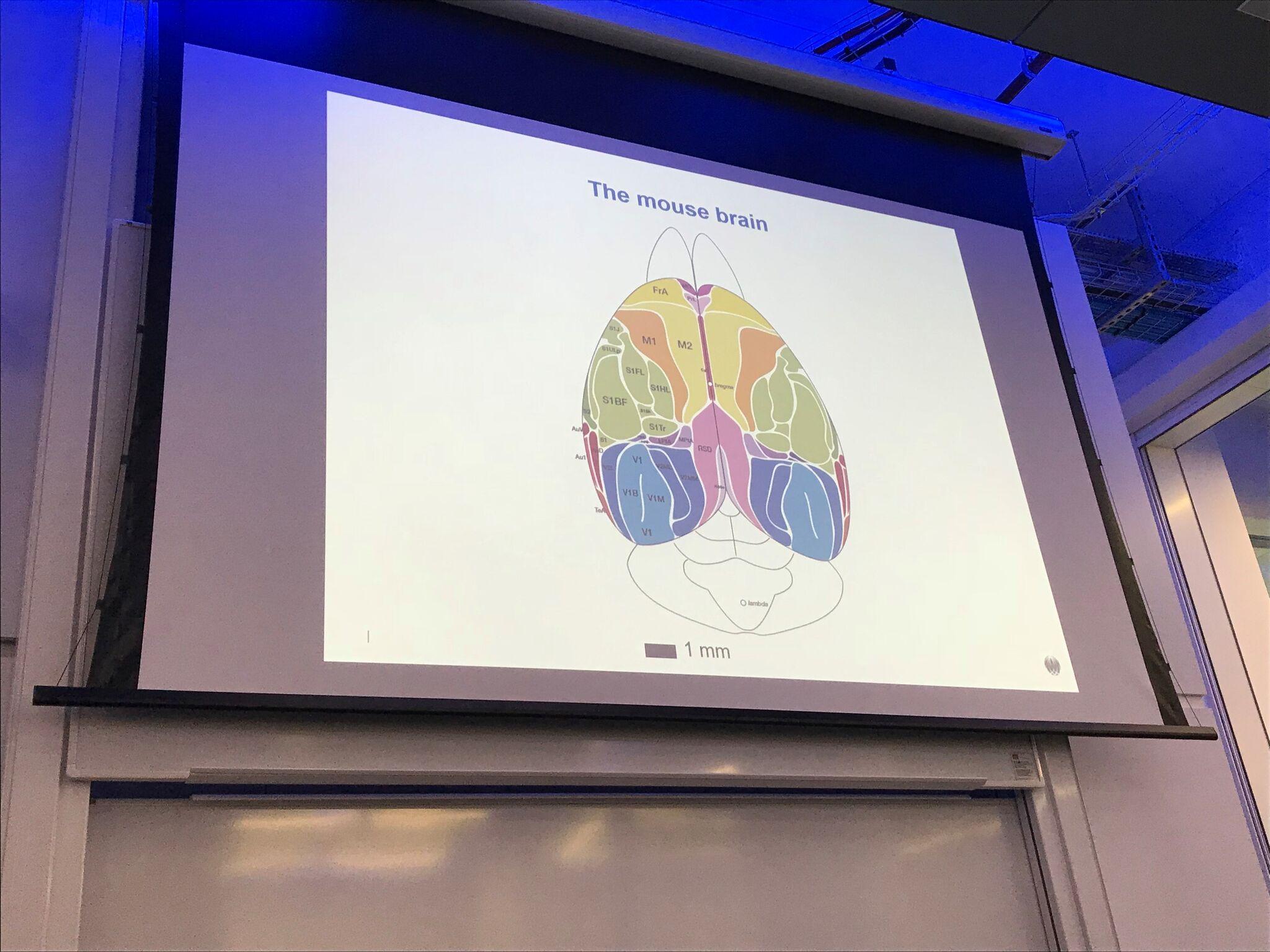

The ultimate near term goal of the Allen Institute is simple: to understand how sensory information is represented and processed in the cerebral cortex. At the Institute, researchers work on the mouse brain and record from multiple brain areas to see how they interact in order to understand behaviours.

Over the past decade or so, Allen Institute teams have generated a number of datasets from the mouse brain that have allowed them to build complementary models of how brain systems works. The caveat of this asset, Jérôme explained, is that if you want to build models of the visual cortex, you have to understand how the components you have characterised work in vivo.

High-throughput calcium imaging for large scale neuronal recordings

Jérôme described how when he started his postdoc he used two-photon calcium imaging in a single field of view, which meant that he was missing a large amount of the information outside of this field and in connected areas. And so they designed a technical path to address part of this problem so that they could record from two areas at the same time, which involved miniaturised optical lenses that fit the scale of the mouse brain much better.

They leveraged this technology to show that they could use this tool to record from two areas, V1 and LM, at the same time in a behaving animal. Jérôme also described how this has been scaled-up so the field now has the capability to access different fields of view at random locations and record traces from these brain areas.

More recently they have been working on a system to record entire areas, such as the visual cortex. The technology behind this is a system that has 16 laser beam paths and uses careful optical engineering. The instrument enables the recording of up to a couple of thousand neurons at the same time. Why is this important? Jérôme explained how there is a lot of spread of activity so it is important to record the activity in the entire visual cortex.

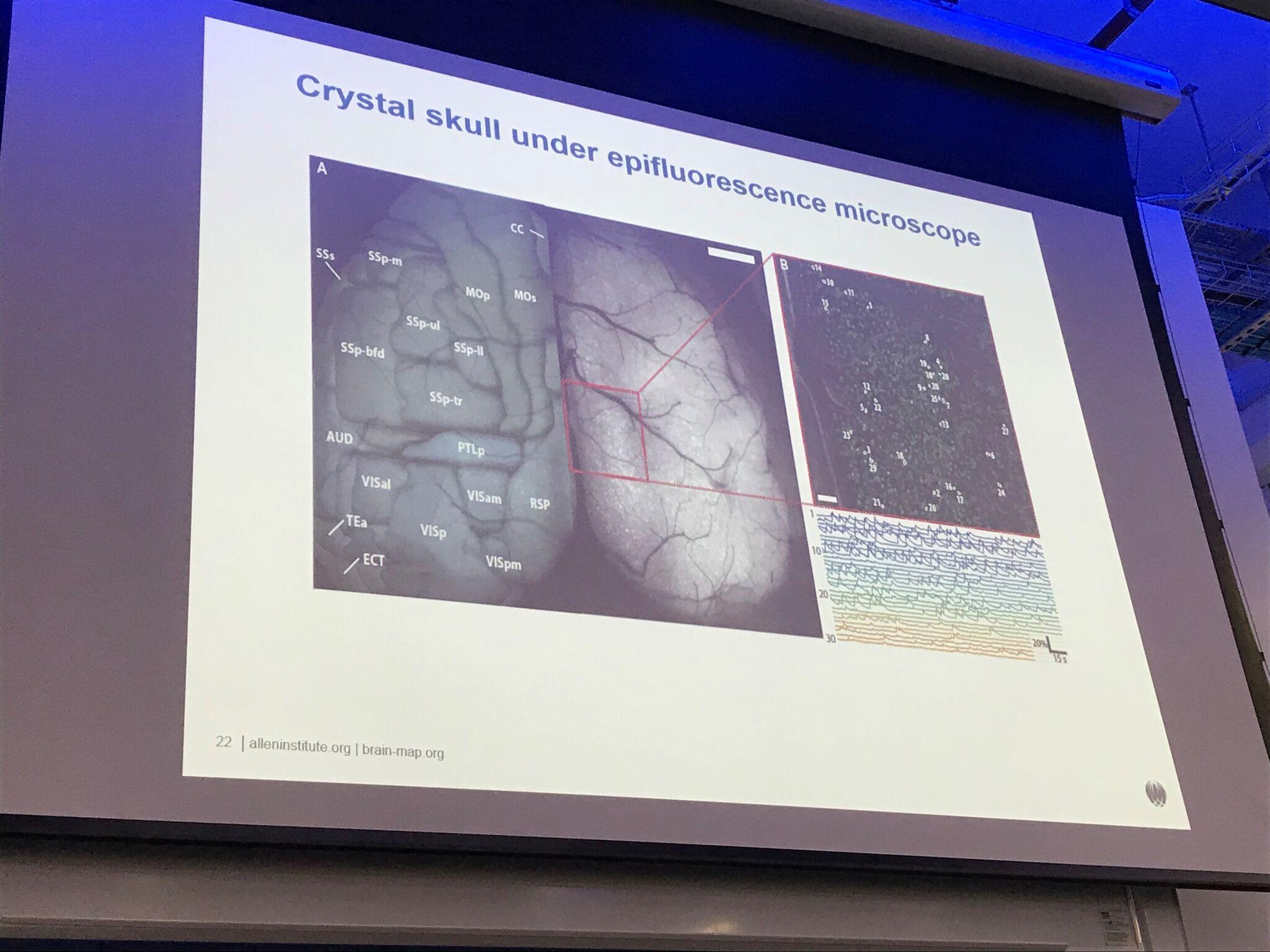

Although we now have the tools to scale-up calcium imaging, Jérôme described the problem of the limitations of surgical preparations. In response he showed a very influential protocol where optical windows to the brain now give access to the activity of the entire dorsal cortex, in real-time, chronically with cellular resolution. Furthermore, multiple imaging technologies are now available to parse this information effectively and this has involved decades-worth of technical developments across many laboratories.

Concluding this section of the talk, Jérôme expressed that we now have the tools to tackle many of the hypotheses that we can test on the cortex and the challenge now is not to build better microscopy tools, but actually to use them at scale.

High-throughput system neuroscience for standardized and reproducible data generation

Referring back to the goal of understanding how sensory information is represented and processed in the cortex, Jérôme explained how if you want to avoid typical overfitting problems, you need to do many, many more experiments.

Consequently, Jérôme described how they built a unique platform for large scale systems neuroscience where available throughput reached around 60 experiments per week with around 75% success rate and high experimental quality. Thus an entire PhD worth of data can be collected in a matter of weeks!

Jérôme also outlined how they tackled the need for standardized data generation by carefully training dedicated teams for each step of the process. Confirming that entirely new experimentalists can be trained on any part of the pipeline in about a month thanks to 34 Standard Operating Procedures covering 335 pages.

Visual coding dataset

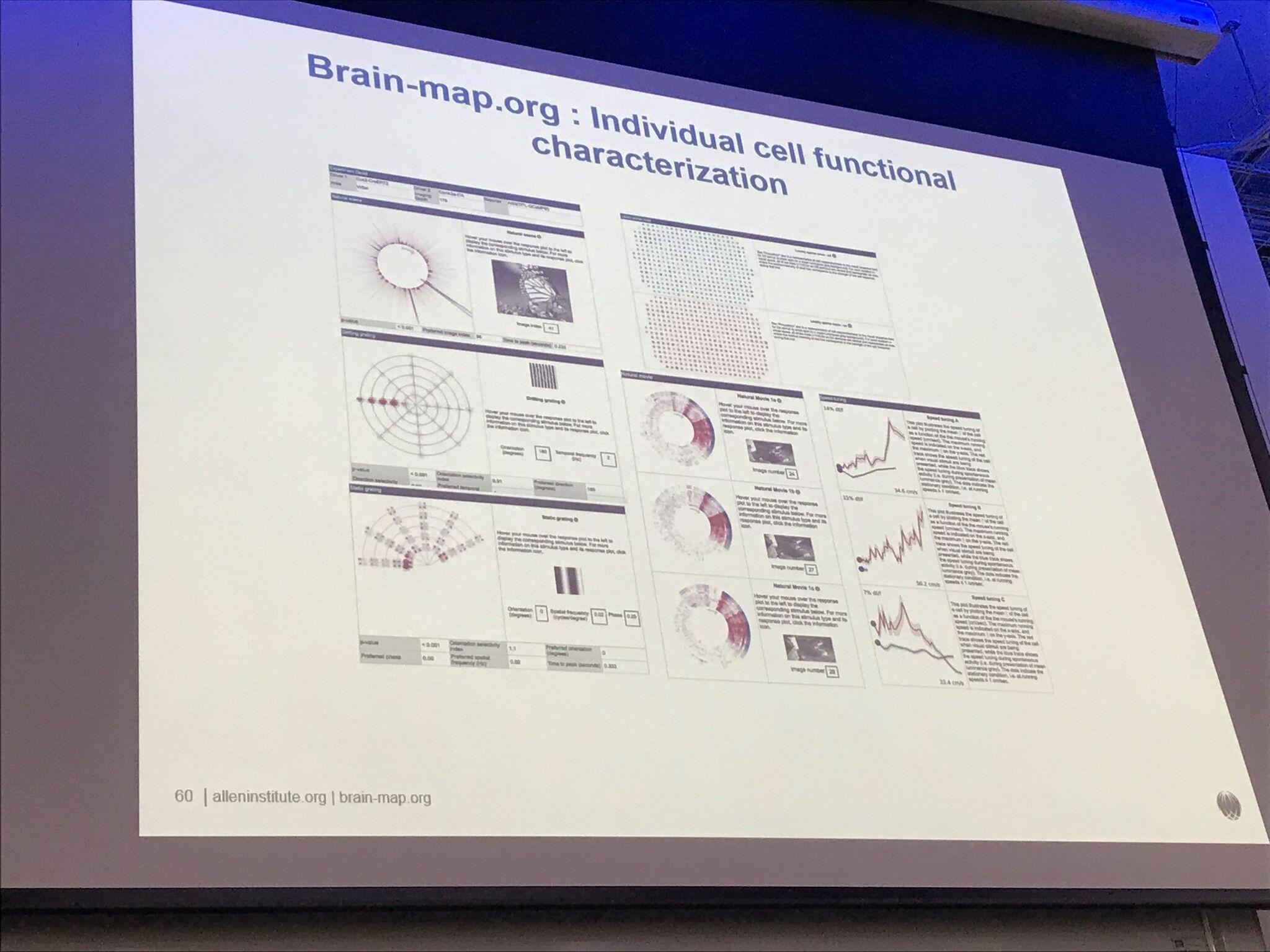

In the next part of his talk Jérôme spoke about what they have done with this pipeline in the last few years. His talk focused on how they recorded the response of cell populations of both excitatory and inhibitory types to standardized sets of visual stimuli, across all cortical layers and large portion of the visual cortex, to create an open map of cellular functional properties across the visual cortex co-registered with connectivity and cell type characterization.

Jérôme highlighted how all the data is available on http://brain-map.org/ and ongoing analysis of functional properties have revealed distribution of visual responses across layers, cell populations and visual areas. Both a web-app and an open-source SDK allows researchers across the world to leverage this resource.

The Allen Institute provide an API, so you can install the software on your machine and then directly access the data. This provides an opportunity for scientists across the globe to look at the results and compare with their own analysis and highlight interesting phenomena that they may want to dive deeper into with their own experiments.

Future of the Allen Brain Observatory

In the final part of his talk, Jérôme outlined his views on the future of the Allen Brain Observatory. The next step in their work is to look at how visual information is processed. To understand how the cortex is working you need to have the animal engaged in a task, thus they are looking to have behaving animals in the pipeline.

Jérôme described how this step brings its own challenges as they will need to scale-up recordings to many more mice in this context. And so to do this they have converged on a very simple detection of change task, where the animal is presented with a stimulus, for example an image with a certain contrast, and then another stimulus, that is either the same or different, and the animal receives a reward if the stimuli are different. In the next year, the Allen Institute are going to scale-up recordings across many different brain areas while the animal is performing this task.

Another aspect Jérôme emphasized was that beyond multi-area recording, it will be important for technology to record from multiple brain layers at the same time, and next year this technology will be incorporated into the pipeline, so they will have three capabilities:

- High-throughput experimental recording

- Multi-area recording

- Layer imaging

Concluding, Jérôme remarked that now that the team has the capability to do large scale systems neuroscience, the future for this project is to make use of this capability to test ideas and accelerate hypothesis testing. There are multiple ways this could be done. For example, a data scientist could analyse the data and find interesting phenomena they want to dive deeper into, then submit a proposal to the Brain Observatory, which a committee could review and design an experiment and run it to test the idea.

Another avenue could be that experimental labs could send in proposals on interesting phenomenon that they want to scale-up recordings. Last year, this idea was piloted and Jérôme stated that he is really excited to see how this progresses, as it may be a new way for the field to generate knowledge.

In summary, Jérôme highlighted how large scale calcium imaging technical developments have unlocked our access to millions of neurons across the entire dorsal cortex. High-throughput systems neuroscience have enabled reproducible, standardized and efficient data generation. A standardized map of functional responses is now freely available for researchers across the world to explore and this social experiment in systems neuroscience may accelerate scientific discoveries and hypothesis testing.

Contact:

April Cashin-Garbutt

Communications Manager, Sainsbury Wellcome Centre

a.cashin-garbutt@ucl.ac.uk

+44 (0) 20 3108 8028