How does the brain accurately represent self-motion during navigation?

By April Cashin-Garbutt

When was the last time you moved around the room? And when did you last think about how your brain enables you to do this? To navigate successfully in an environment, the brain needs to keep track of the direction we are heading. This representation of our orientation must be continuously updated as we move around. While it is widely accepted that a type of cells in the brain, called angular head velocity (AHV) cells, can track the speed and direction of head orientation, the mechanisms that determine their activity remain unknown.

Elucidating ego-centric navigation

One reason the mechanisms underlying AHV coding, and other types of self-centred navigation signals, have been difficult to elucidate is that there are many different sensory sources that can contribute. For example, to track head turns, information can come from the vestibular system, visual system, proprioception, eye movements, or feedback signals of the motor command. In freely moving animals, it is hard to disentangle all these sensory sources. On the other hand, in head-fixed animals, where the sensory stimuli can be controlled, it is hard to know the relevance of the recorded neuronal activity to real world navigation.

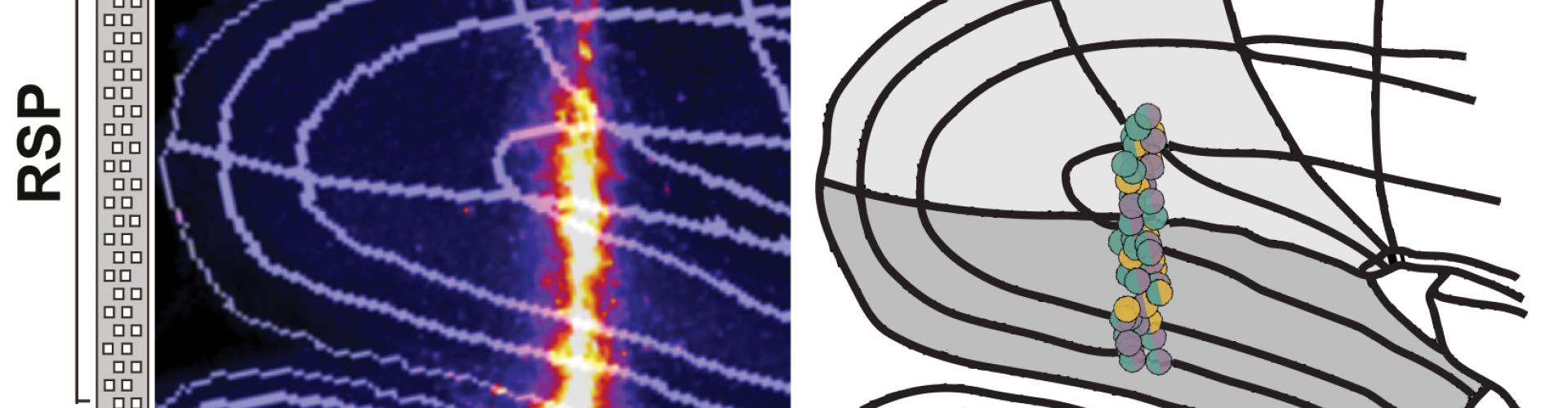

In a recent BioRxiv pre-print, Sepiedeh Keshavarzi, Senior Research Fellow in the Margrie Lab, et al. combined these two methods by recording from the same neurons during both freely moving and head-fixed conditions. By focusing on the retrosplenial cortex (RSP), an area of the brain previously shown to be important in spatial orientation and self-motion guided navigation, their aim was to determine which of the sensory sources contribute to the activity of the AHV cells and to what extent.

First they identified the AHV cells in the freely moving mouse. Then they tracked the same neurons in a head-fixed preparation where the mouse was passively rotated in the dark. This approach allowed the removal of some sensory/motor information including locomotion, motor command for voluntary head movements, neck on body proprioception, and visual flow.

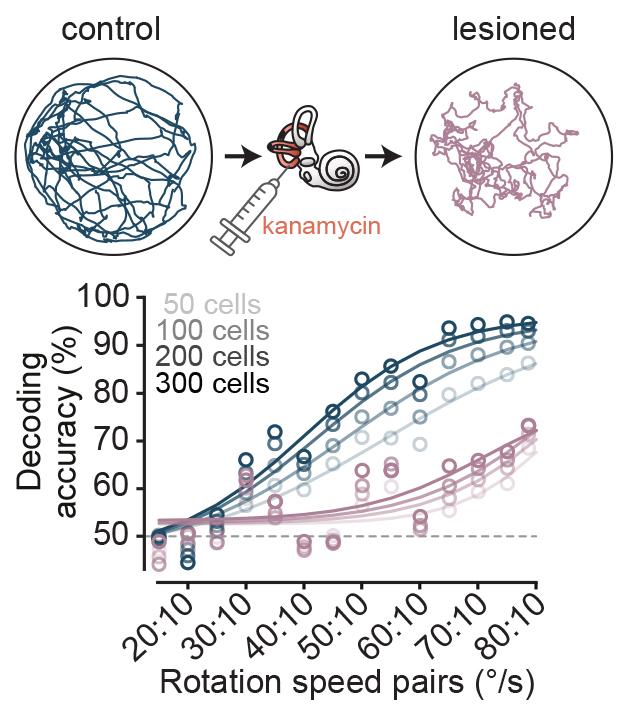

The team then made a quantitative comparison of the tuning of AHV cells in freely moving animals with the head fixed preparation. They found that 80% of AHV cells were also encoding angular velocity during passive rotation, and many of them had identical tuning profile between the two conditions. This implied that either vestibular signals or feedback from reflexive eye movements were the underlying input, as the other sensory sources were not present in the head fixed preparation. Sepiedeh Keshavarzi then conducted vestibular lesions and pupil tracking, and was able to nail down the vestibular system as the workhorse for angular velocity signalling of the head.

Understanding the role of visual input

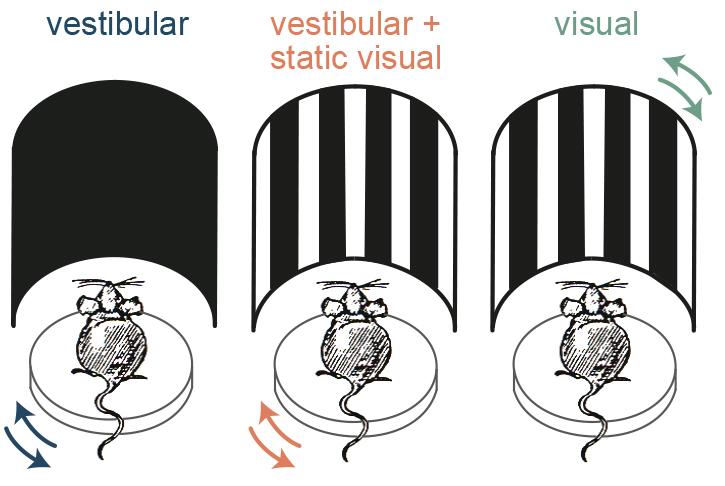

It is known that RSP is heavily connected with visual areas, so the next question was whether the AHV cells also receive visual input. To determine this, the team presented simulated optic flow by applying the motion profile of their rotation platform to a surround visual stimulus. They found that in addition to the vestibular stimuli, the AHV cells responded to the simulated optic flow. But what is the importance of this visual input to the AHV cells – do they contribute to angular head velocity signalling?

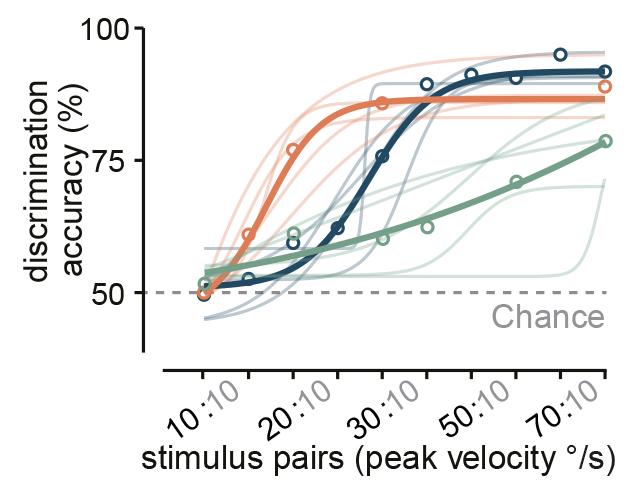

The researchers found that the visual input increases the gain of angular velocity coding, and therefore enhances the accuracy. They showed this both at a single cell level and at a population level by using decoding methods.

But is this behaviourally-relevant for the mouse?

The next question was whether mice can actually use combinatory vestibular and visual cues to estimate their angular velocity better, or whether they just switch to using visual stimuli in such experiments. Answering this question was very important because, despite the extensive use of rodents as a model to study spatial navigation, such behavioural experiments had only been performed in humans and monkeys.

To test this, the researchers trained mice in a go/no-go task to discriminate the speed of their own angular motion either using vestibular or visual information alone or using the combination of the two. They found that, similar to the neuronal data, the animals made the most accurate choices when both sensory sources were available, and when they only used visual input their performance was significantly worse. Thus the mice were not just switching to using visual stimuli and instead they used combinatory information to have a more accurate estimation of their own motion.

Addressing controversies

This finding also sets to rest some of the controversies in the field. A number of previous experiments showed that vestibular signals are inhibited during voluntary head movements and that the signals we see are more related to passive motion. But in navigation, these vestibular signals are thought to be important because if you carry out vestibular lesions the animals perform poorly in spatial tasks. However, it was not known for sure whether this was due to the impact on the vestibular input or other signals that contribute to angular velocity coding such as proprioception or eye movements. This new research brings clarity to the field.

The study also shows how extensive angular velocity coding is in the RSP. The current network models of the mammalian head direction system assumes that AHV cells primarily reside in subcortical regions, and higher-order cortical areas, such as the RSP, are thought to inherit a pure world-centred head direction signal. The existence of such a widespread AHV coding in the RSP calls for a revision to these classical models. In addition, while it has been suggested that visual inputs to the RSP play a role in stabilising our sense of direction by presenting environmental landmarks, this research shows that self-generated visual flow input to the RSP can also improve spatial orientation.

Next steps

Despite the clarity this research provides, there are still so many unknowns. For example, what pathways bring the vestibular and optic-flow information to the RSP? To what extent does the RSP contribute to self-motion perception and path integration? Are these processes dependent on discrete brain areas or on a mini consort of regions that collectively signal self-motion information?

The next step will be to address such questions by manipulating neuronal activity in different regions including the RSP and the areas connected to it, and combine such manipulations with perceptual and navigations tasks.

Read the pre-print on BioRxiv: The retrosplenial cortex combines internal and external cues to encode head velocity during navigation