From humans to machines – how language shapes our worlds

By April Cashin-Garbutt

Once language is learned, it can constrain perception. For example, the ability to distinguish different shades of colour is related to the number of words used by language to describe them. The intriguing impact of language inspired our PhD students to choose ‘Mind your language’ as the theme for this year’s student symposium at SWC. From humans to machines, the joint SWC/GCNU student organising committee delivered a fantastic line-up of speakers who shared exactly how language and statistical learning shapes our worlds.

The joint SWC/GCNU student organising committee who organised a fantastic line-up of speakers and also delivered an inspiring student engagement session at the end of the symposium where groups met with speakers and practiced proposing a grant pitch for a collaboration between SWC and the speaker, judged by SWC Group Leaders. Pictured left to right (back row): Alice Koltchev, Rodrigo Carrasco Davis, Nicole Maug, Kira Düsterwald, Mattias Horan and (front row) Sarah Elnozahy, Nicole Vissers

The development of language

Beginning with the development of language, the first speaker Judit Gervain, Professor of developmental psychology at the University of Padua, shared how statistical learning, the mechanism invoked in the development of many different elements of language, is present in numerous parts of nature and even plants use statistical learning to orient themselves towards water sources.

Judit defined statistical learning as sampling frequency of occurrence, co-occurrence and other statistical characteristics of objects in the environment. While very useful for learning about the environment, she explained the drawback of statistical learning is that it is almost too powerful and can lead to combinatorial explosion, as there are a huge number of potential entities and statistics from which an organism can learn. For example, the statistical regularities in language hold over a variety of units in the speech input including words, syllables, and phonemes.

In her work on speech perception, Judit shared how she researches the objects that babies track in speech so that they can learn language from the sounds they perceive. She described the hierarchies of neural oscillations that babies have access to, some of which foetuses can begin to hear around 24-28 weeks of gestation, and proposed that the brain and language may have co-evolved, with language evolving units that nicely fit the brain’s oscillations.

After sharing her research involving EEG recordings of a few locations on the heads of newborns and 6-month old babies, Judit outlined how units may be preferentially-weighted as a function of development to contribute to more efficient statistical learning. For example, Judit’s team found theta suppression in 6 month-olds. While this result was initially puzzling, Judit hypothesised that this suppression of the syllable level may allow babies to dedicate resources to the phonemic level so they can start learning words and grammar.

The ability to learn to communicate was also the focus of a talk by virtual speaker Ahana Fernandez, a behavioural biologist and postdoctoral researcher at the Museum of Natural History Berlin, Leibniz Institute for Evolution and Biodiversity Research. Instead of human babies, Ahana’s research focuses on bat pups.

In her talk, Ahana highlighted how vocal imitation, the ability to learn from a vocal tutor, is not widespread in animals. Her research focuses on one particular species of bat, the Greater sac-winged bat, that is known to be capable of vocal imitation and undergoes conspicuous vocal practice behaviour for seven to ten weeks, daily practicing multisyllabic vocal bouts of up to 43 minutes!

In comparison, in human babies precanonical babbling (of non-speech sounds) occurs in the first 2-3 months of life, followed by canonical babbling (of speech sounds) and variegated babbling (speech sounds that are more varied and less repetitive) from 6-7 months to 1 year, which is around the time when babies typically begin to produce their first words. By analysing the babbles of bat pups, Ahana performed the first formal comparison of babbling behaviour in mammalian vocal learners and found that bat pup babbling is characterised by the same features as babbling in human infants.

Using communication to work together

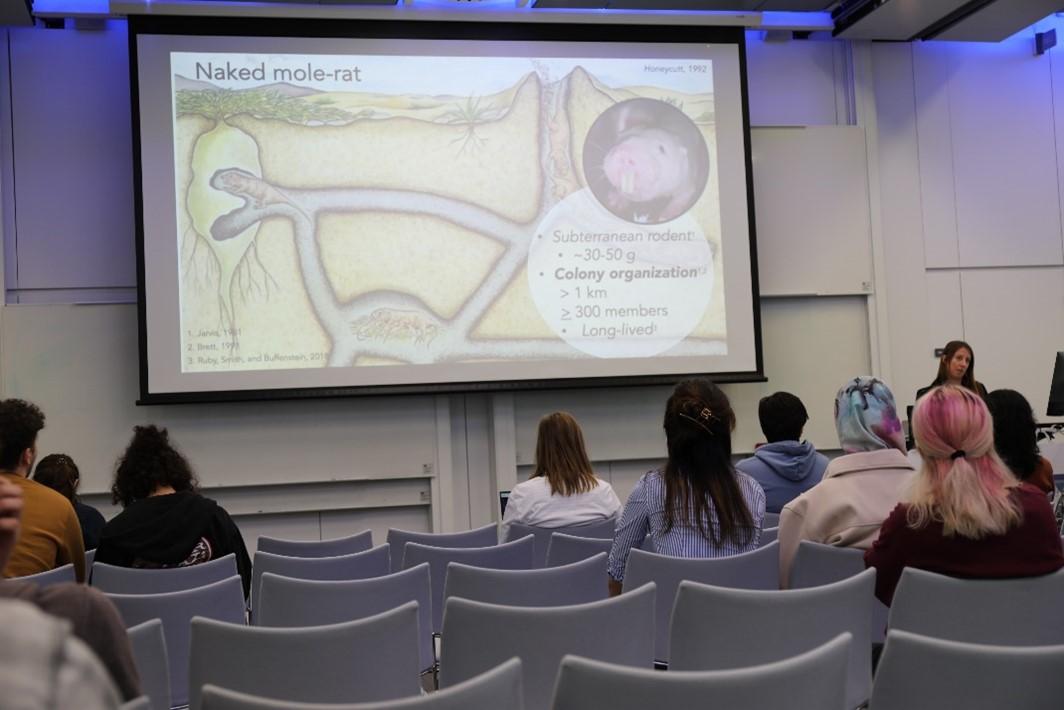

Speaking further about the importance of research in bats, Ahana highlighted how they have a large diversity of social systems and vocal repertoires, and have evolved complex vocalisations. The influence of sociality on vocal communications was a topic shared by another speaker at the symposium, Alison Barker, Group Leader at the Max Planck Institute for Brain Research. Alison imparted lessons from underground: linking social dynamics and vocal communication in the naked mole-rat.

A member of the African mole-rat family found in Somalia, Ethiopia and Kenya, the naked mole-rat has very limited access to resources due to the little rainfall and scarcity of food available. Despite this challenging environment, the naked mole-rat has thrived in these areas due to its social structure with more than 300 members living together in each group.

In Alison’s talk, she described her research studying the vocalisations that enable naked mole-rats to live in such complex hierarchical social structures, allowing them to communicate and effectively distribute tasks. She spoke of her work investigating how vocal dialects are established and maintained, and how colony dialects evolved. Alison ended her talk with some fascinating open questions including how can we adapt machine learning and other computational tools to different datasets?

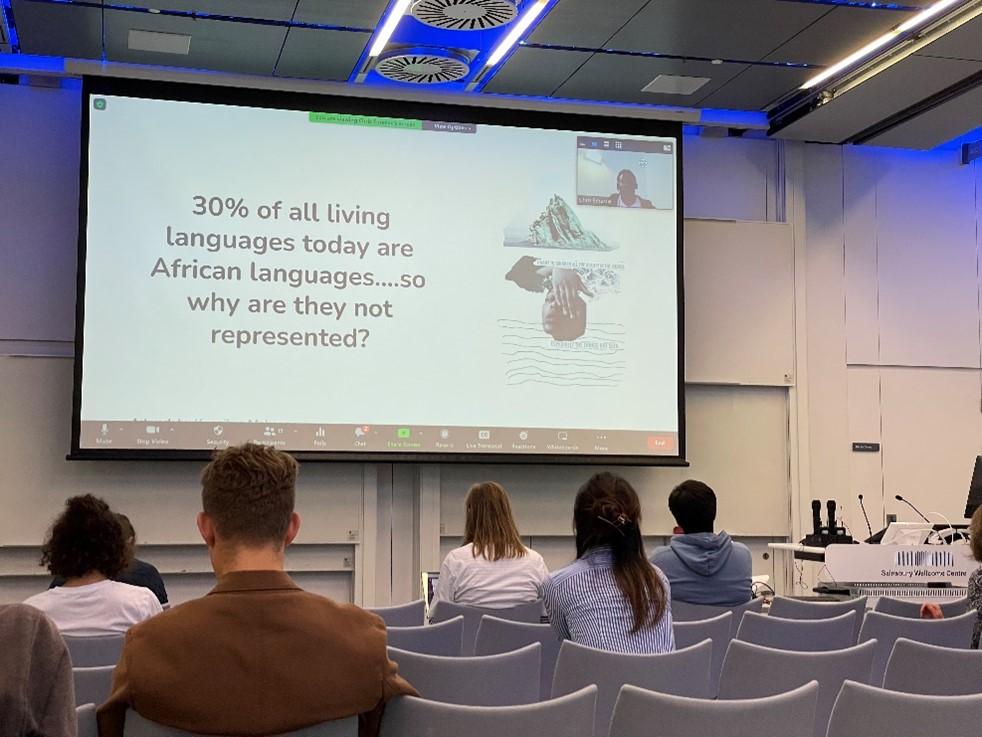

Datasets were also a topic touched upon by virtual speaker Chris Emezue, a Masakhane researcher, graduate student at Technical University Munich and research intern at MILA. Chris highlighted the lack of representation of African languages in Natural Language Processing (NLP) and the ways we can use the few datasets we have available to make robust models without more data.

Chris shared that there are over 2000+ African languages, but Alexa, Siri and Google Home do not support a single one. Masakhane is a grassroots organisation that aims to change that. Working on the principle of Ubuntu, which means, “I am because we are,” Masakhane engages in participatory research as a means to ensure everyone who should be in the room is in the room.

Using Ubuntu as a research philosophy has led to many accomplishments for Masakhane and the community have now published a range of papers, including a Case Study in African Languages that went on to win a Wikimedia Foundation Research Award of the Year for their novel approach for participatory research around machine translation for African languages.

The language of machines

Building a perfect language-understanding machine was the focus of a talk by Felix Hill, a research scientist at DeepMind, who shared important insights about how language and intelligence interact. Felix described the impact of embodiment and the agent’s perspective on the world. He showed how artificial agents perform differently on a task where they receive the same instructions, such as ‘put the object on the bed,’ but they receive visual information from either a 3D first person perspective or a 2D third person (top down) perspective.

The 3D, first-person agents learned to be more ‘systematic’ and were naturally able to generalise. Felix explained that egocentricity explains most of this difference as the first person perspective is desirable in this task, which involves language and specifically applying known ‘verbs’, which describe actions, in novel constructions.

Felix also described another way language interacts with intelligence through relations and abstraction. He highlighted how we often use language to explain why something went well or badly. For example, we learn better from teachers who mark our exam papers and write down why particular answers are wrong. In the same vein, Felix explored whether explanation on top of reinforcement learning (RL) might allow artificial agents to learn faster or better.

Through an odd-one-out task, Felix shared how explanations can help artificial agents to move past focusing on shortcut features to learn more difficult ones. Thus highlighting how building a generally intelligent agent may benefit from building a perfect language-understanding machine.

Music, the language of the soul

Knowing whether something is right or wrong was also a focus of a talk by virtual speaker David Quiroga, a postdoc in Robert Knight’s lab at UC Berkeley. David described how when we listen to music we always try to predict when the next beat will come, for example when dancing or playing music together. Just like in language, we learn expectations in music listening through statistical learning.

David spoke of the brain as a prediction machine that creates a model of the world and has an amazing ability to predict the next note in a piece of music. This has consequences for our experience of music, as a surprising chord can make a piece of music more pleasurable. However, David also talked about uncertainty in music listening. Uncertainty may modulate how much weight we give to unexpected events as the brain does not want to learn from noise. Research has found that surprising events are liked more under low uncertainty and less under high uncertainty. Thus, there is a sweet spot when creating music and a surprising chord is only more pleasurable when the rest of the piece is predictable and not when the piece is chaotic.

Language and culture – changing the ways we think

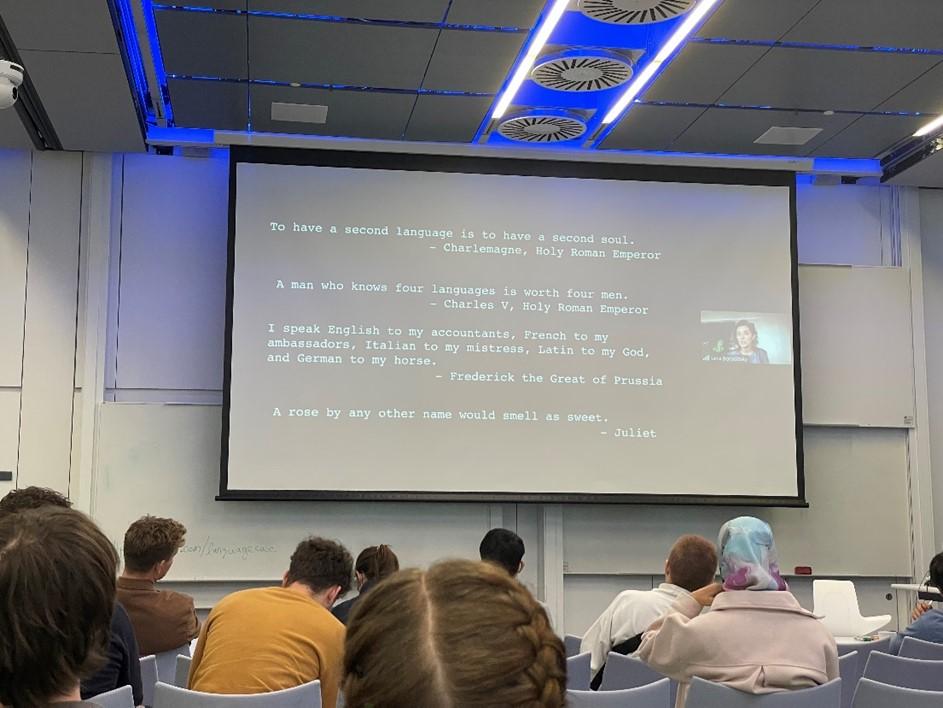

“To have a second language is to have a second soul” – these famous words by Charlemagne, Holy Roman Emperor, was quoted by the final speaker of the symposium, Lera Boroditsky, Professor at UCSD, who shared her research on the ways in which linguistic background shapes the way we structure our thoughts.

The English language for example is obsessed with time and Lera highlighted how ‘time’, ‘year’ and ‘day’ are three of the top five nouns in English. But time is not something we experience directly, we cannot see time, nor taste it nor smell it, yet we represent it by laying it out on calendars and even in our hand gestures. In English, we typically think of the future as ahead of us and we say things like ‘looking forward to meeting you’ and gesture forwards when we refer to the future. However, the linguistic structure used to describe time is not the same across cultures.

Lera shared that in the Aymara language, which is spoken in Bolivia, the past is in front and the future is behind, for we already know what has happened in the past so we can see it in front of us, whereas the future we cannot see so it is behind. She also explained how time does not have to flow relative to the body. In Pormpuraaw, a remote Australian Aboriginal community, time is represented strikingly differently. Instead of front-to-back or back-to-front, Pormpuraawan’s represent time with respect to cardinal directions East to West. Thus time always goes in the same direction based on the landscape rather than the individual.

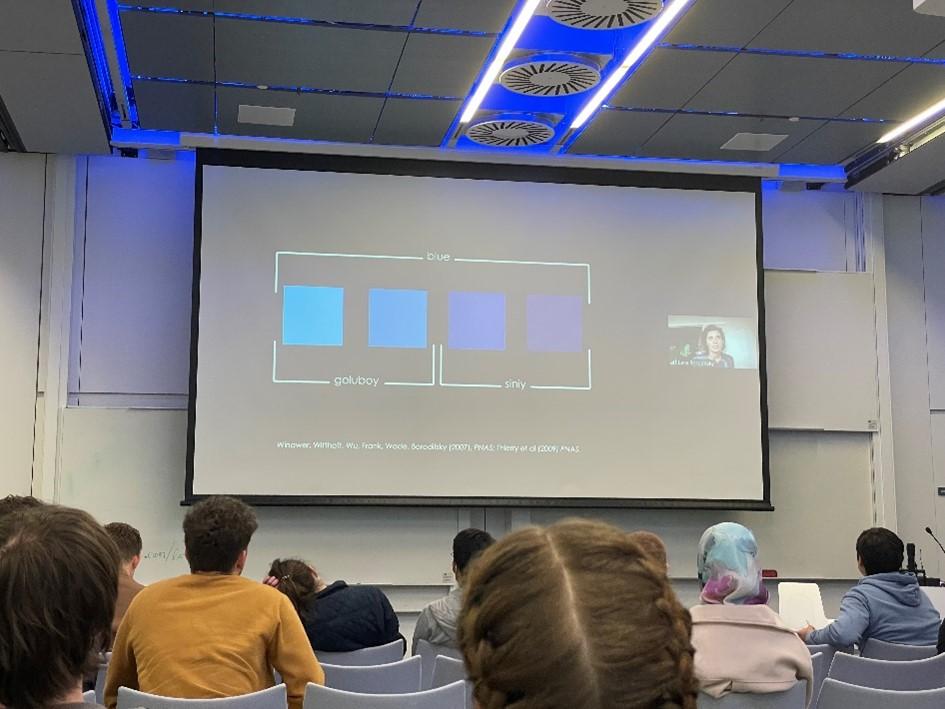

Time is not the only concept that differs depending on linguistic structures and in her talk, Lera shared another fascinating example of the concept of colour. In English we have the concept of ‘blue,’ but in Russian there is not a word that corresponds to the same concept of blue that you can use to name all the different hues of blue that the English word means. Instead, Russian very consistently distinguishes between shades of light blue and dark blue using two different colour words. Russian speakers have a lifetime of experience of having to distinguish between things that fall into one category versus the other, whereas in English you are not required to make these distinctions. During brain studies where individuals see the same sequence of colours, speakers of languages that make this distinction like Russian, show a categorical shift marked by the brain when the sequence turns from light to dark blue, whereas this does not occur in speakers of languages that don’t make this distinction like English.

Lera ended her talk by reinforcing the importance of language on shaping the way we think of the world. Language bequeaths to us the cultural and intellectual heritage of thousands of generations before us. Ideas are built into language and help us to get orientated and allow entry into different forms of thinking. But Lera also warned how languages reduce cognitive entropy. She explained this concept by describing how languages give us a particular way to think, or a particular set of roads for our minds to travel, and once we are on those roads we tend not to look outside those tracks for other ways to think. Once you understand the power language has in guiding thought, it gives you the opportunity to think about how you want to think. And so perhaps we should all take heed of the SWC and GCNU PhD students symposium theme ‘Mind your language!’