Long-term learning trajectories: uncovering the role of dopamine

By April Cashin-Garbutt

Dopamine plays an important role in learning. However, we know little about how it governs diverse learning across individuals over long periods of time. A research collaboration between the Lak Lab at the University of Oxford and the Saxe Lab at the Sainsbury Wellcome Centre at UCL set out to investigate the role of dopamine and the rules that govern long-term learning.

Led by first author Samuel Liebana, an Oxford DPhil student at the time of the study and now a postdoc in the Saxe Lab, the team uncovered that long-term learning in the brain involves dopamine teaching signals that shape diverse yet systematic learning trajectories. The study, published today in Cell, shows how this learning mechanism can be understood theoretically using neural networks. The findings could have future implications for understanding individual differences in learning, improving educational curricula, furthering AI and revealing the mechanisms of disorders involving dopamine.

Learning over long time periods

Like many things in life, learning to play tennis can take a long time. Some people start by learning how to do forehands without practicing backhands, whereas others may try to learn both strokes from the beginning. But what is the best method and how does the brain achieve such long-term learning?

“Long-term learning has been difficult to reproduce in a lab setting because it requires long periods of time. However, in our study we developed a learning paradigm for mice that takes around 15-25 days. The mice developed different strategies, which allowed us to explore their diverse learning trajectories,” explained Dr Liebana.

The researchers needed to develop a paradigm that was in the sweet spot of complexity – too simple and most individuals would learn in the same way, too complex and the mice would be unable to learn altogether.

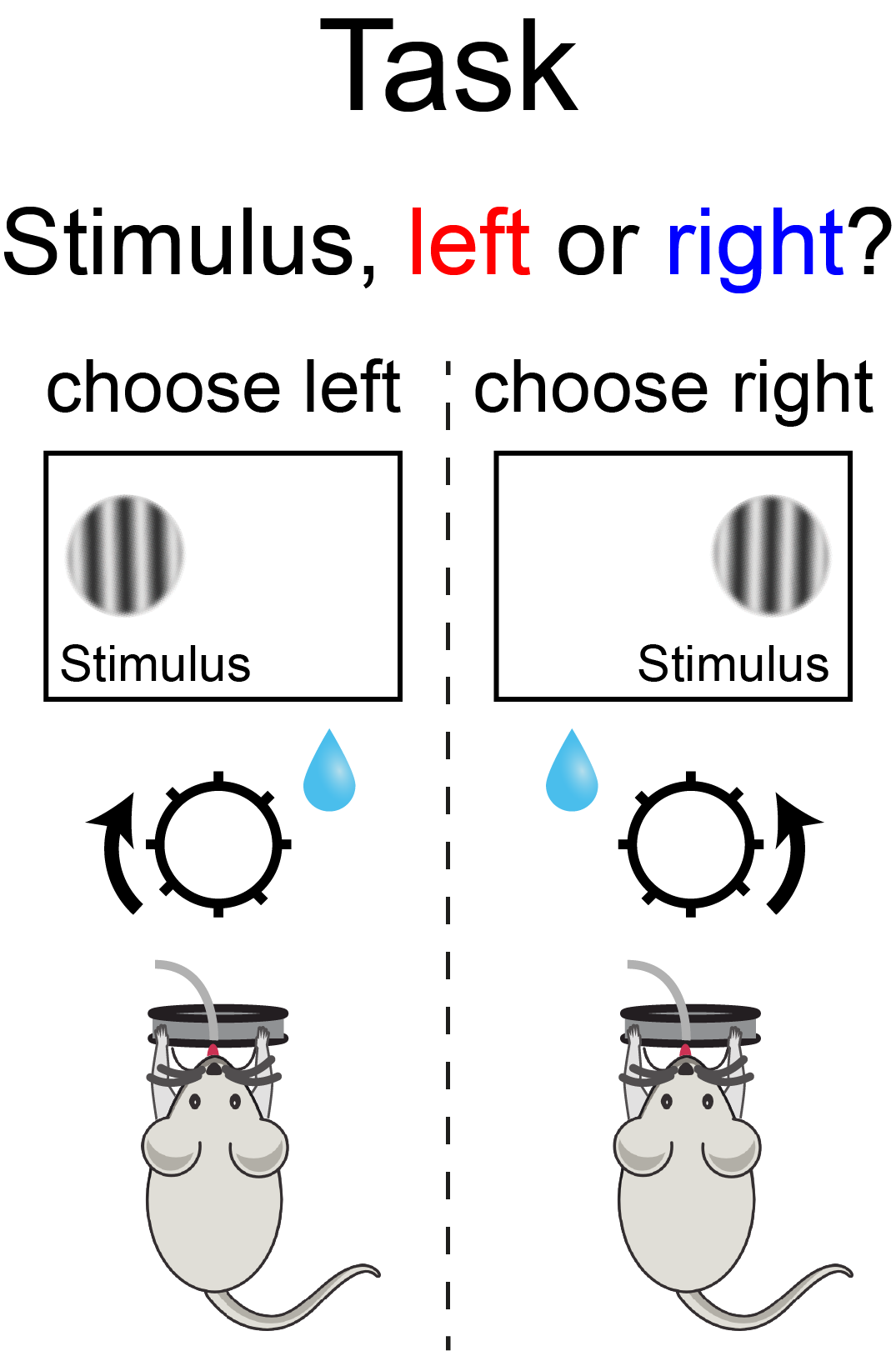

The task involved showing mice a visual stimulus either on the left or right half of a screen. Over a few weeks, the mice learned to use their front paws to turn a wheel to move the visual stimulus to the centre of the screen and receive a reward. While the task was simple enough for most of the mice to learn, it was also complex enough for three main learning trajectories to emerge.

Figure 1: Task schematic. Mice learned to turn a wheel to move a left/right stimulus to the centre of a screen and obtain a reward.

Understanding diversity in learning

The team found considerable diversity in behaviour across mice, particularly early on in the task when the mice were turning the wheel without using the visual stimuli. Some mice chose mostly left, others mostly right, and some in a more balanced fashion.

“There are some trials in which no stimulus is presented, and we just record the intrinsic bias of the animal to choose left or right. These trials were crucial as they revealed to us what the animals did in the absence of visual stimuli throughout learning. The behaviour on those trials was key to being able to predict and assess their later strategies,” explained Dr Liebana.

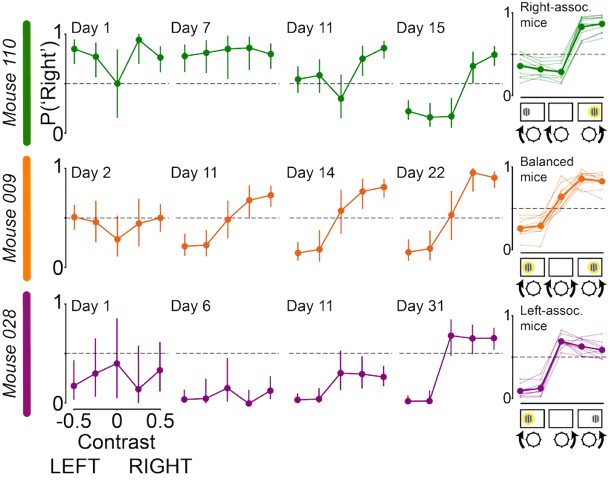

The diverse behaviour early in learning predicted the mice's strategies later in learning – when they were able to perform the task accurately. Those animals that were initially right-biased developed a strategy where they only used the stimulus on the right side of the screen and never on the left (i.e., they made the same choices for trials with the left stimulus and without a stimulus). Conversely, left-biased animals only used the left stimulus. The balanced animals, that turned the wheel both left and right early on, developed a strategy using both left and right stimuli.

“We were really surprised by how well we could predict the final strategy from the early bias. It was remarkable to find that we could predict what behaviour would look like 20 days down the line from behaviour in the first four or five days,” commented Dr Liebana.

Figure 2: Mouse learning trajectories in the form of psychometric curves over learning. Psychometric curves show the proportion of choices reporting right, P(‘Right’), as a function of the position and contrast of the visual stimulus. The first four columns show the psychometric curves from four example days of three example mice, and the last column shows average expert psychometric curves clustered by trajectory type.

Investigating the role of dopamine

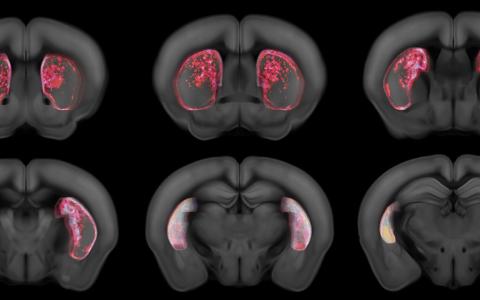

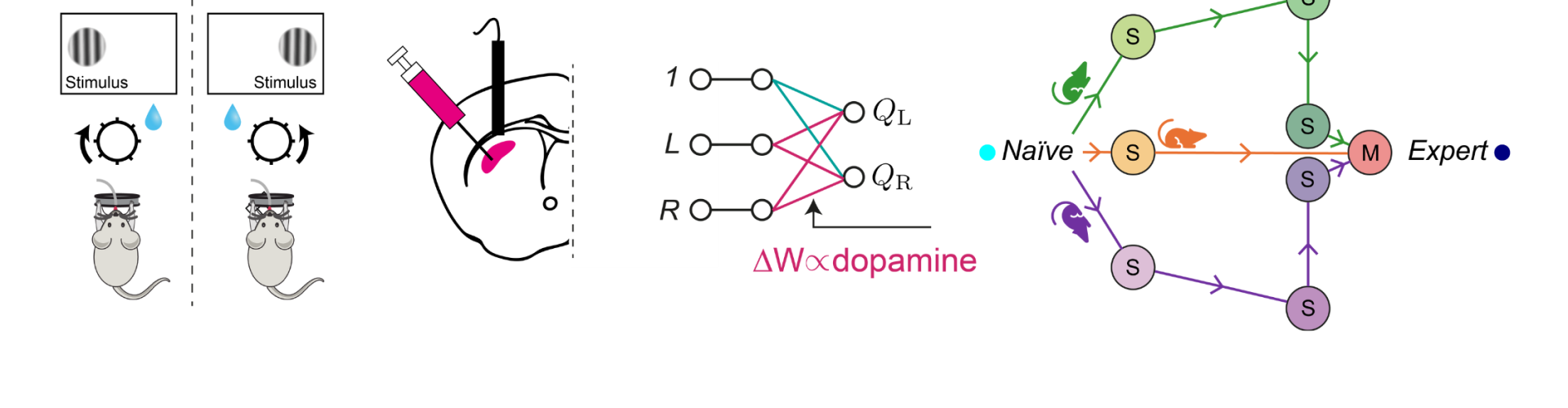

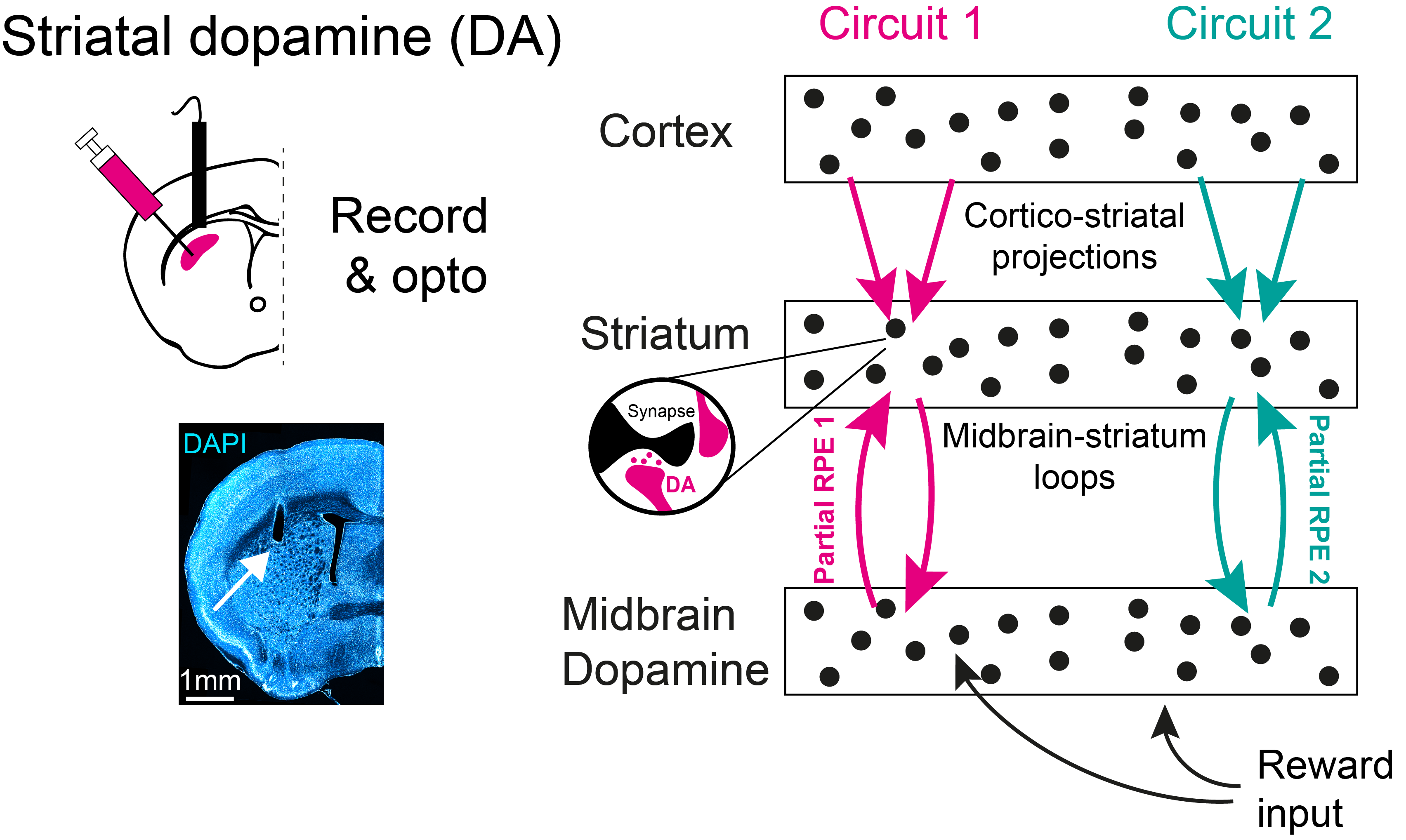

The team imaged dopamine release in both the dorsomedial and dorsolateral striatum. They demonstrated that dopamine is involved in long-term learning through updating associations between stimuli and actions. However, they found that the common description of dopamine encoding a 'total' reward prediction error (RPE, the difference between actual and predicted reward) was not an accurate depiction of the patterns of release they observed. Instead, the patterns of dopamine release were different across the dorsal striatum. Dopamine acted as a partial RPE, reflecting and updating associations proportional to the difference between actual reward and a reward prediction based on the partial cortical information available to each striatal circuit.

Figure 3: Striatal dopamine was recorded using fibre photometry and manipulated using optogenetics. Dopamine signals acted as partial reward prediction errors (RPEs) reflecting the difference between actual reward and partial reward predictions in each circuit.

To verify whether dopamine plays a causal role in driving learning, the researchers used optogenetics to turn dopamine neurons ON and OFF. First, they inhibited dopamine release, which showed that dopamine was necessary to learn the task. Secondly, they stimulated dopamine release with an excitatory opsin, which confirmed the partial reward prediction error hypothesis.

Using neural networks to understand long-term learning rules

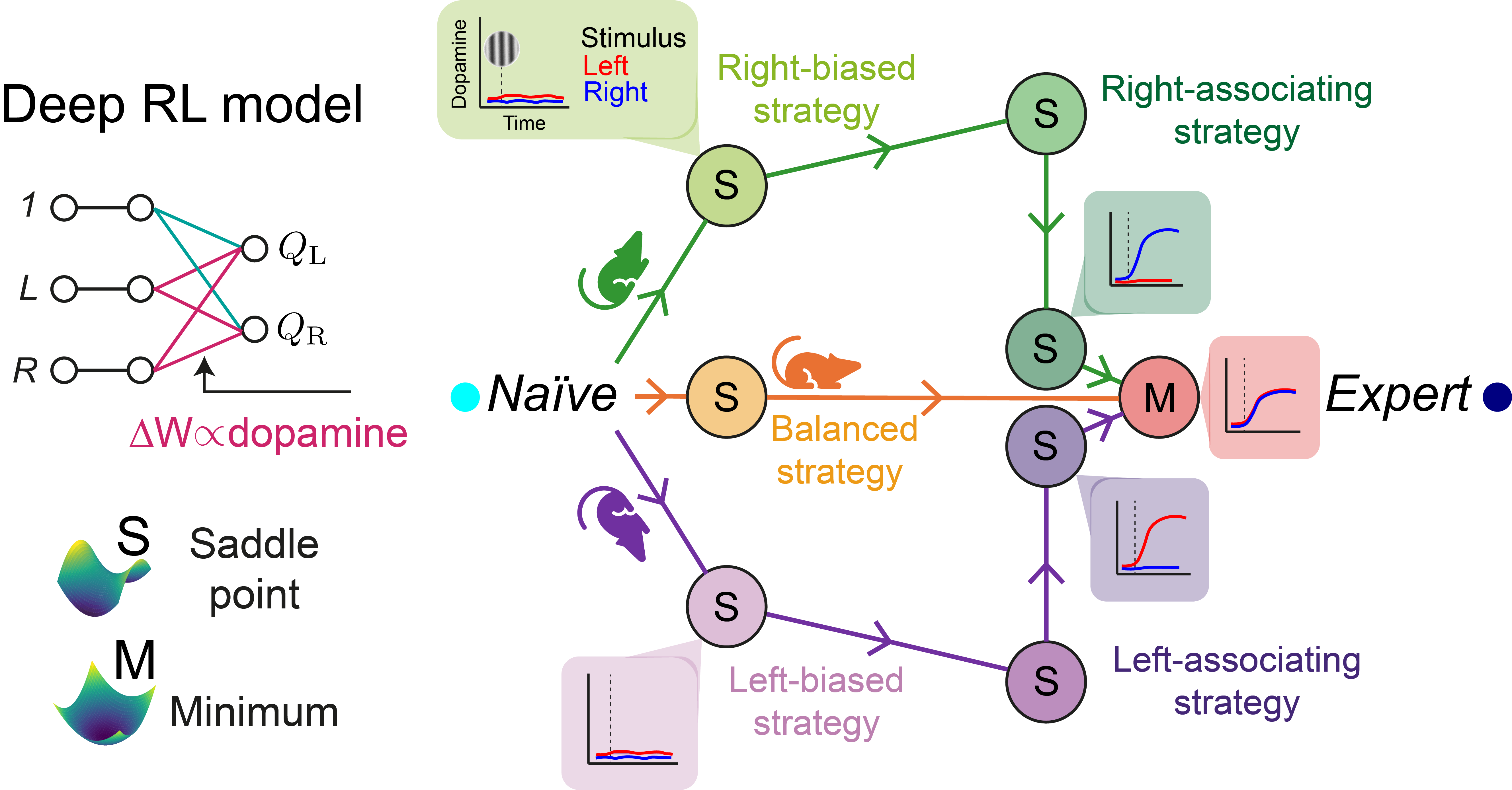

The team used a deep neural network model to capture the mice's learning trajectories. The network was trained with teaching signals that mimicked the recorded dopamine and thus updated parameters based on partial reward prediction errors.

“We essentially showed that when we train a deep neural network with this learning rule on the same task, it reproduces the evolving behaviour of the mice and also the patterns of dopamine release,” explained Dr Liebana.

The team also demonstrated that the learning rule can be written as gradient descent on a particular loss. This helped them to understand the learning mechanism and analyse long-term learning from a theoretical perspective. They found that the learning dynamics are governed by a hierarchy of saddle points, in the vicinity of which learning temporarily slows down.

The saddle points explained the mice's diverse progression through strategies, as well as why mice often remained stuck using certain strategies.

Figure 4: Schematic of the deep neural network model and hierarchy of saddle points that governed the diverse yet systematic learning dynamics.

Implications for education, AI and maladaptive behaviour.

The team are now exploring why the brain's learning mechanism may be governed by saddle points. One idea is that they allow complex tasks to be divided into smaller digestible chunks, which can be learnt and consolidated before moving on to the next.

“I hope that by understanding more about the saddle points in learning trajectories, we may one day be able to help design educational curricula that lead people down the quickest path to learning, or to effectively learn specific styles,” concluded Dr Liebana.

In addition to possible educational implications, the study could offer potential improvements for AI. Understanding the learning dynamics of neural networks through their saddle points could considerably improve training algorithms. Further, the dopamine-based learning rule proposed in the study differs from those commonly used to train neural networks, yet it possesses unique features that could prove useful in certain settings.

Finally, the results demonstrate that there exist a multitude of dopaminergic teaching signals in the brain which each modify different components of behaviour. This knowledge could help target diagnostic and therapeutic approaches to help in the correction of maladaptive behaviour and other disorders involving dopamine.

Find out more

- Read the paper in Cell, ‘Dopamine encodes deep network teaching signals for individual learning trajectories’ DOI: 10.1016/j.cell.2025.05.025

- Learn more about research in the Saxe Lab and Lak Lab