Neuroscientists welcome a paradigm shift in sensory physiology

By Hyewon Kim

Last month, neuroscientists hailed from around the world and across London to participate in discussions centring on how best to implement neural recordings and data analysis of freely moving animals in ethologically relevant tasks. Organised by SWC Senior Research Fellow Sepiedeh Keshavarzi and Francis Crick Group Leader and SWC affiliate Petr Znamenskiy, the Senses in Motion symposium brought together multiple streams of research findings and future directions that encouraged a continued pursuit of understanding how brain processes in freely moving animals give rise to complex behaviour.

For some of the speakers, it was the first time in a while to see three-dimensional faces in the audience. The energy was palpable – attendees were excited to see each other in the crowd, putting a face to a familiar name. The goal of the Senses in Motion symposium was to draw a bridge between potential collaborators with the hopes of sparking new experimental and data analysis paradigms, energised by the influx of novel ideas presented in a line-up of neuroscientists breaking the mould.

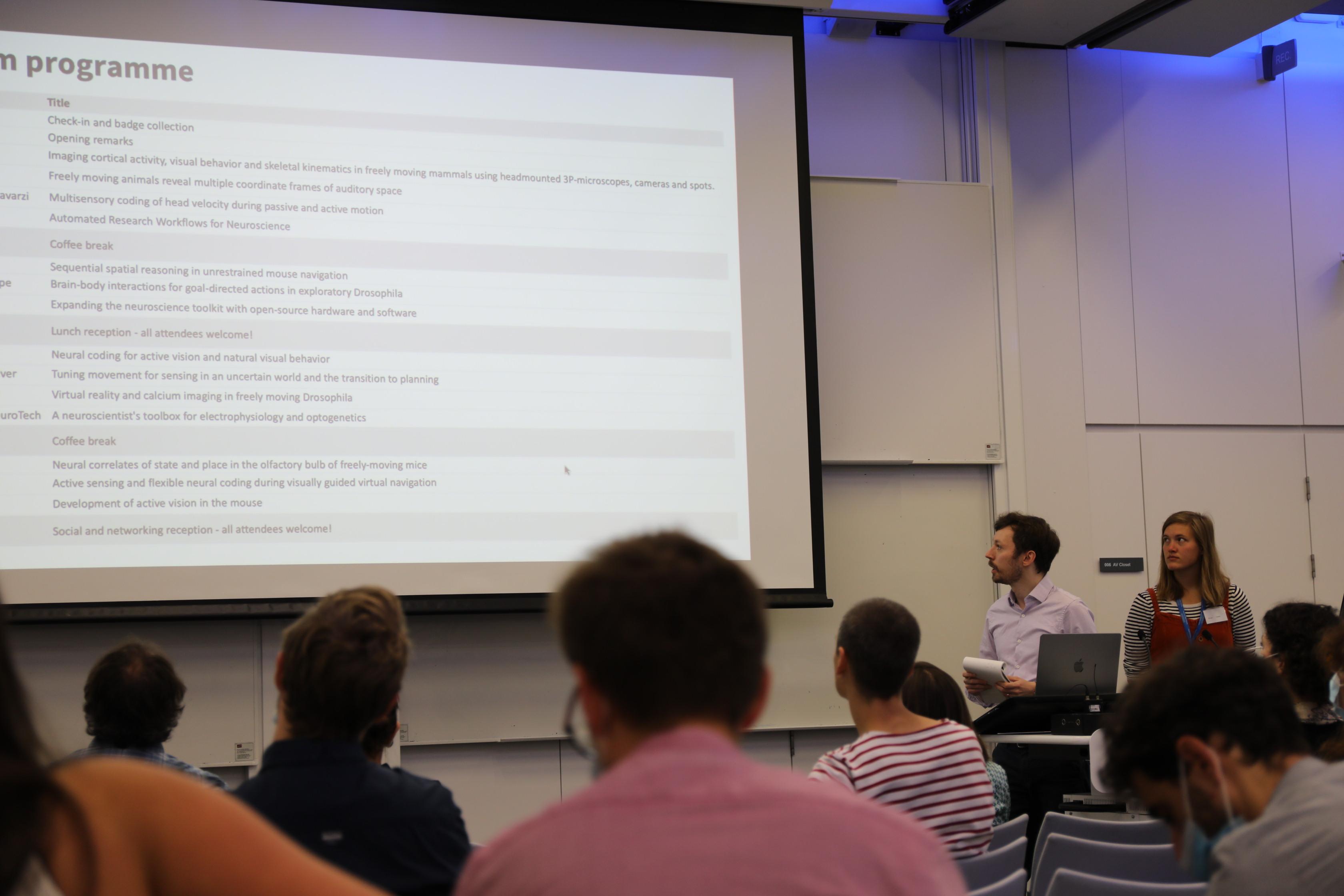

Chairs of the first session, Petr Znamenskiy and Nicole Vissers, described the day’s programme.

Using new technology to study freely moving behaviour

How does the brain create hypotheses to make sense of the world? Jakob Voigts, Group Leader at Janelia, began his talk by pointing out that in language, the words in a sentence only make sense in the context of the words that come before it. To investigate how the brain creates hypotheses in the brain of a model organism, Jakob’s team reduced the torque applied to the mouse’s head during open electrophysiology, using a light cable he described as a “wet noodle”. This helped them achieve robust neural recordings in a freely behaving mouse. When the mouse was presented with increasingly informative landmarks, the team found that retrosplenial cortex neurons encode hypotheses, or history-dependent integrated representations. The same sensory input could have different meaning for the brain. The plotting of neural state space showed that through learning, the retrosplenial cortex can separate hypothesis states depending on self-motion, without external context input provided by other brain regions.

Jakob Voigts presented his work on how a mouse can use an internal model of the world to disambiguate otherwise ambiguous stimuli during navigation by engaging in sequential spatial reasoning.

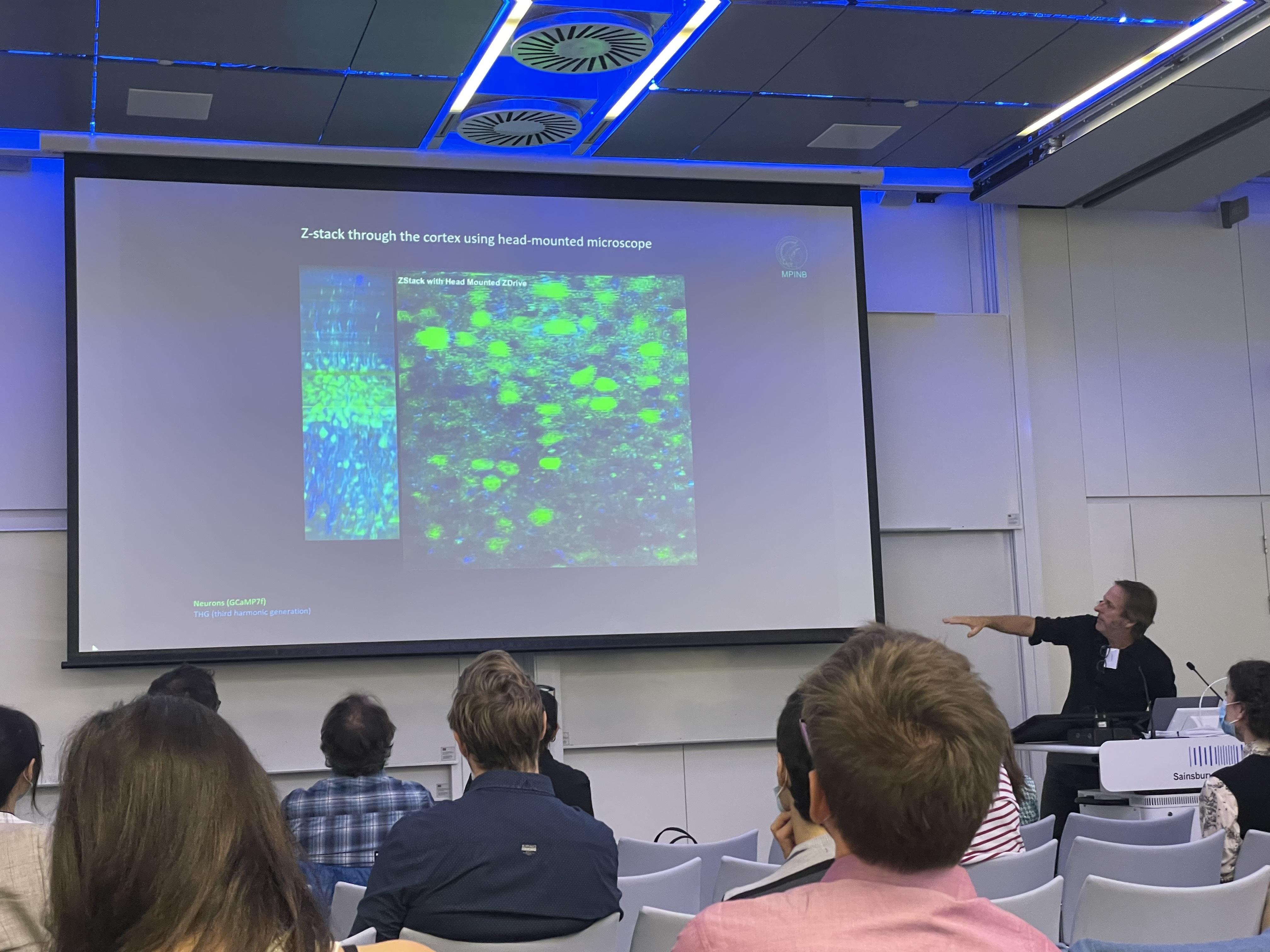

Jason Kerr, Professor at the Max Planck Institute for Neurobiology of Behavior, also shared his lab’s story about developing technology to understand freely moving behaviour. How does the brain distinguish self-motion from object-motion? To answer this question, the team carefully measured the head and eye positions of a freely moving mouse by mounting a light camera on its head, as well as the position of a cricket, which the mouse chased. They found that mice detected and tracked prey in a single quadrant of their peripheral field of view. To understand this result in the context of retinal functional architecture, they developed a 2-gram three-photon microscope mountable on the head of a freely moving mouse that could target deeper cortical layers than two-photon microscopes. The talk ended with the Kerr lab’s recent work developing technology to track the skeleton of moving animals as a proxy for brain activity, as these would reflect the animal’s decision to interact with the environment.

By combining head-mounted cameras, three-photon imaging, and body tracking, Jason Kerr’s team aims to establish dense recordings from animals as they distinguish self-motion from object-motion.

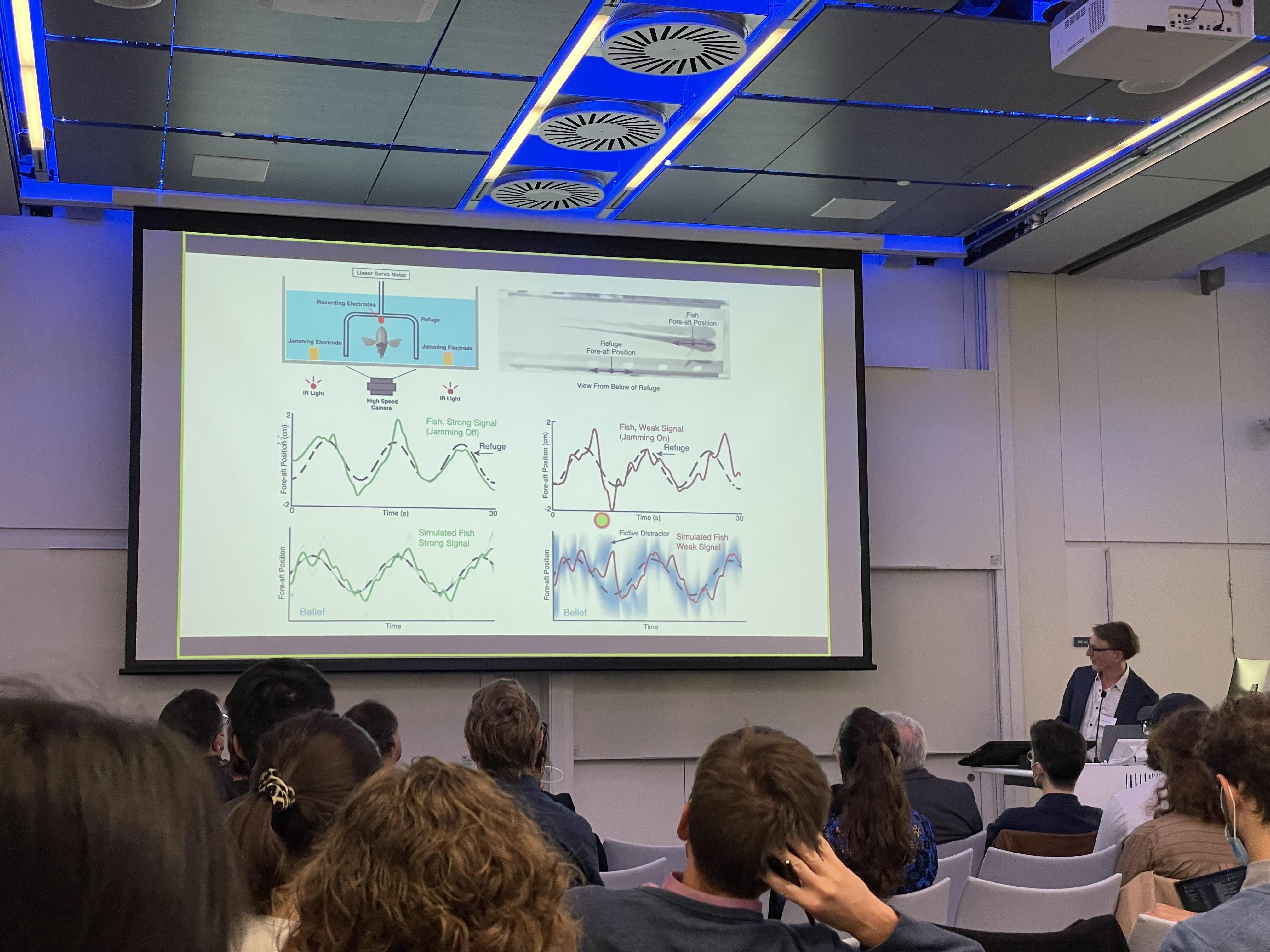

Malcolm MacIver, Professor at Northwestern University, shared his work to understand a peculiar behaviour in another model organism. He shared data that show fish oscillate their body when hiding from a predator to collect information about a refuge, such as weeds in a river. The fish engage in a ‘gamble’, expending energy but gaining information about the refuge in the process (this oscillatory movement increased when the electric field of the fish was blocked). He referred to this process as ergodic information harvesting (EIH). How does environment structure affect predator-prey interactions on land? In another experiment with mice, the team used a robot generating puffs, which mice avoided, to discover that there was coupling between what the faux-predator was doing and what the mice were estimating to be the robot’s position through peeking. Cryptic state updates could occur in tandem with the need to act, especially when trial and error could become ‘trial and death’ for an animal.

Malcolm MacIver’s team could use the EIH algorithm to control an underwater electrolocation robot.

Virtual reality tools

Developing technology to understand the brain’s role in freely moving behaviour extended to virtual reality (VR) tools. Dora Angelaki, Professor at New York University, discussed active sensing and flexible neural coding during visually guided virtual navigation. She began by explaining how sensory information generates motor output, which in turn affects sensory input, forming a feedback loop. Dora’s team achieved this feedback loop in VR, creating sequential decision-making tasks. Macaques wearing VR goggles that were trained to follow a virtual flashing target with a joystick performed the task well and could even generalise when the gain of the joystick was changed or they were presented with multiple fireflies. The team found that the medial superior temporal area or classical sensory areas of the brain have very different properties when performing this firefly task, compared to more constrained task environments, such as the two-alternative forced-choice task. The team also found, through human VR studies, that eye gaze played an important role in navigating complex environments.

Dora Angelaki provided a critique of two-alternative forced-choice tasks, which were developed in the 1970s and 80s and were suitable for the limited technology at the time. What we experience in real life is very different from these tasks, she said.

Jennifer Hoy, Assistant Professor at the University of Nevada, Reno, joined the symposium remotely to discuss active vision in development. Her team wanted to know, how do mice learn to identify, pursue, and approach objects over the course of development? Her team presented virtual crickets to a freely moving mouse to compare pursuit behaviour in adult versus juvenile mice. In adolescent mice, instead of a back-and-forth, approach-and-flee trajectory taken by juvenile and adult mice, the way they approached the cricket was more impetuous. Previous work had shown that leptin receptor neurons in the hypothalamus project to the periaqueductal grey. As leptin is a hormone that decreases appetite, the team decided to investigate the effect of hunger states on prey capture. When they excited this neural pathway, the approach of the mice to virtual prey was delayed and the approach frequency also went down. When the pathway was inhibited in adolescent mice, the approach behaviour was made even more impetuous.

Jennifer Hoy shared that human infants have an amazing visual orienting response and are able to process a variety of rich visual stimuli despite limited spatial acuity.

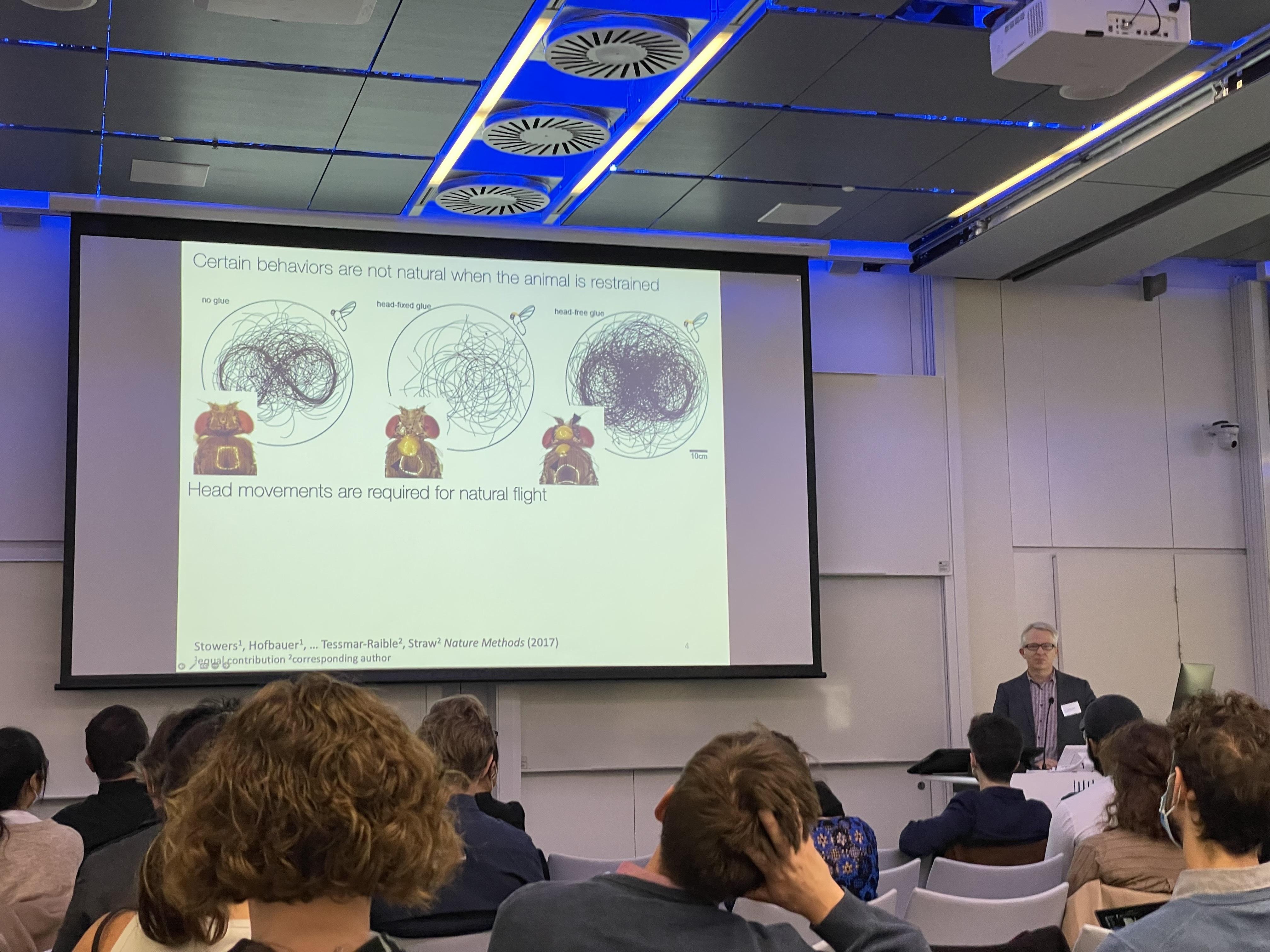

Andrew Straw, Professor at the University of Freiburg, set out to discuss VR and calcium imaging in the freely moving fruit fly Drosophila. Driven by the need to truly understand mechanisms of visually guided behaviour, the team turned to freely behaving animals. Andrew shared data from experiments on freely behaving flies, possible thanks to a multi-camera low-latency tracking software that allowed the team to create VR environments. His team also built small VR arenas where they studied the effect of insecticide on the optomotor behaviours of flies. Though not finalised, the results showed that insecticide exposure decreased the frequency of flight in Drosophila. Through calcium imaging, his team is currently recording from neurons of the ellipsoid body of flying Drosophila using an experimental setup where feedback can be used from a light reflector on a fly’s head to steer a mirror, keeping the spot of light centred on a detector.

Andrew Straw’s team found that in flies where the head was fixed to the thorax, flying in a figure-eight shape was almost impossible. When the head was glued to the thorax in tethered flying flies, however, the task was possible.

Studying vision in naturalistic behaviour

The decision to use Drosophila as a model organism was shared by Eugenia Chiappe, Principal Investigator at the Champalimaud Centre for the Unknown, who discussed the role of visual circuits of the brain in lower-level motor control. Eugenia’s team presented a freely walking fly with small or large moving dots, to which the fly showed varying degrees of turning behaviour depending on the fly’s ability to detect the dots – a classic optomotor response. Using a combination of freely moving behavioural analysis and patch clamp recording in head-fixed flies, the team found that visual and non-visual selectivities in lobula plate neurons integrate these multimodal signals in an optimal manner. They also found that neurons adjust the weights of the visual signals according to their reliability. By silencing or stimulating sensory neurons in the lobula plate, her team also showed how this system is tuned to operate in a short-timescale but elegant motor context.

Eugenia Chiappe’s observed how freely moving flies acquired information relevant for behaviour. The gain of the optomotor response was high in flying flies, but low in walking flies.

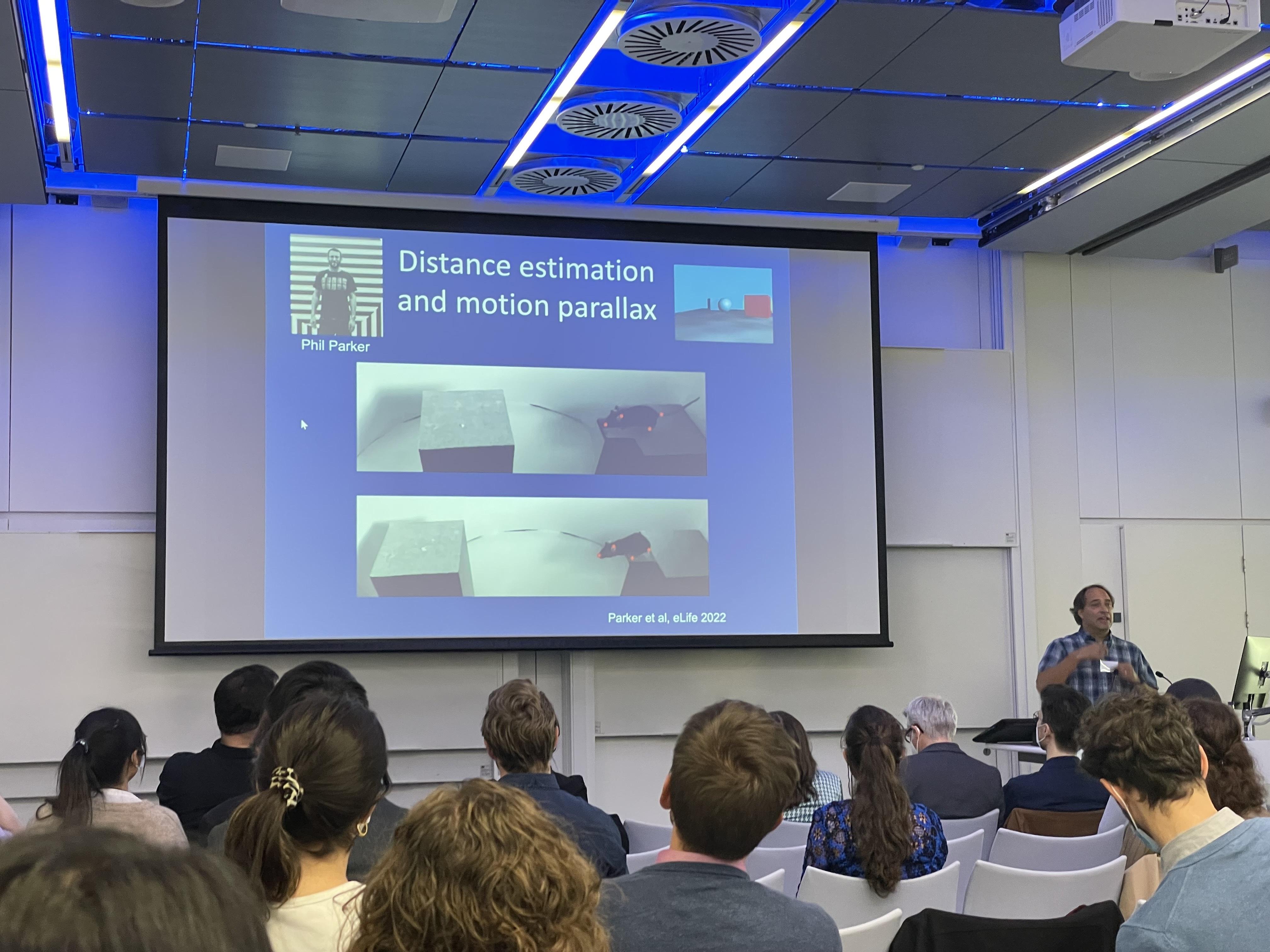

Cris Niell, Associate Professor at the University of Oregon, wanted to know how vision is used to move through the world by studying prey capture behaviour in mice. Previous work from his lab had shown that two populations in the brain’s striatum were engaged in different ways when mice detected versus approached a cricket. His team also found that mice bobbed their head before jumping across a gap, which enabled motion parallax to help the mouse estimate the distance. In other work, Cris’s team simultaneously recorded the visual scene, eye position, and neural activity of freely moving mice using a set of head-mounted cameras, and mapped the neurons’ visual receptive fields using machine learning. Through clustering analysis, they found that there was a striking temporal progression of responses in the preferred spatial frequency of visual system neurons. This strengthened the theory that the brain first processes the coarse organisation of a stimulus and proceeds to fill in the details.

Cris Niell described the simultaneous measurement of the visual scene, eye position, and neural activity of freely moving mice as the “dream experiment” he had envisioned as a postdoc himself.

Making sense of the world across sensory modalities

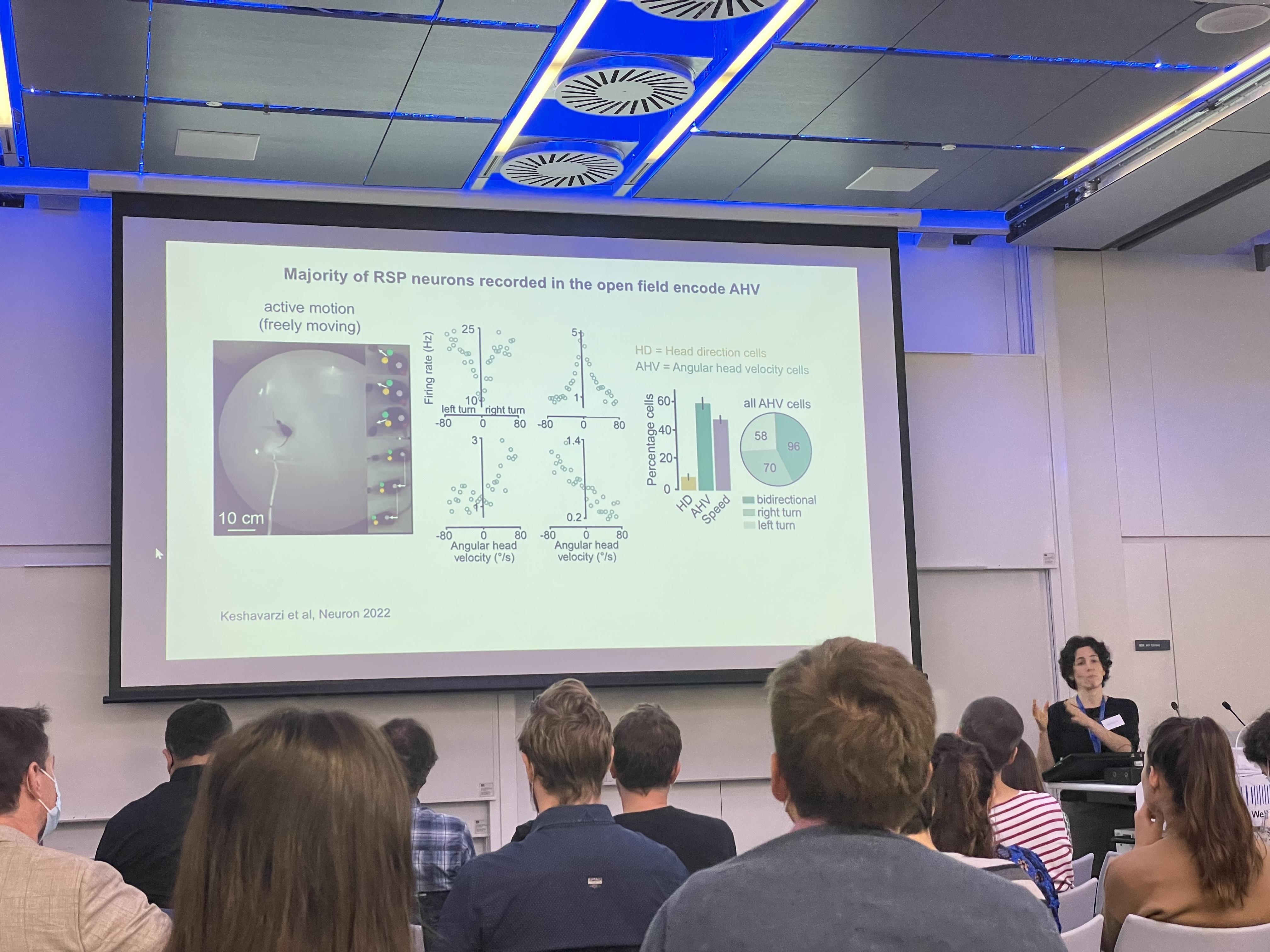

How mice use vision was also discussed by symposium co-organiser and SWC Senior Research Fellow Sepiedeh Keshavarzi, but in a different context. Sepi wanted to know, how do sensory representations in freely moving animals relate to those recorded under restrained conditions? Her work combined head-fixed and freely moving paradigms, focusing on the retrosplenial cortex (RSP) in the mouse brain. By using an experimental design where the mouse was head-fixed on a rotating platform with or without visual input, Sepi and colleagues found that visual and vestibular signals converge onto angular head velocity (AHV) cells in RSP. What is the functional importance of AHV signals? She collaborated with the Branco lab at SWC to investigate the phenomenon in freely moving mice. When they inactivated RSP axon terminals in the superior colliculus, they found that the signals encode the wrong orientation during escape behaviour. This highlighted the importance of head motion signals during freely moving behaviour.

Knowing that RSP sends projections to visual cortex, Sepiedeh Keshavarzi hypothesised that head-motion signals are passed on to visual cortex.

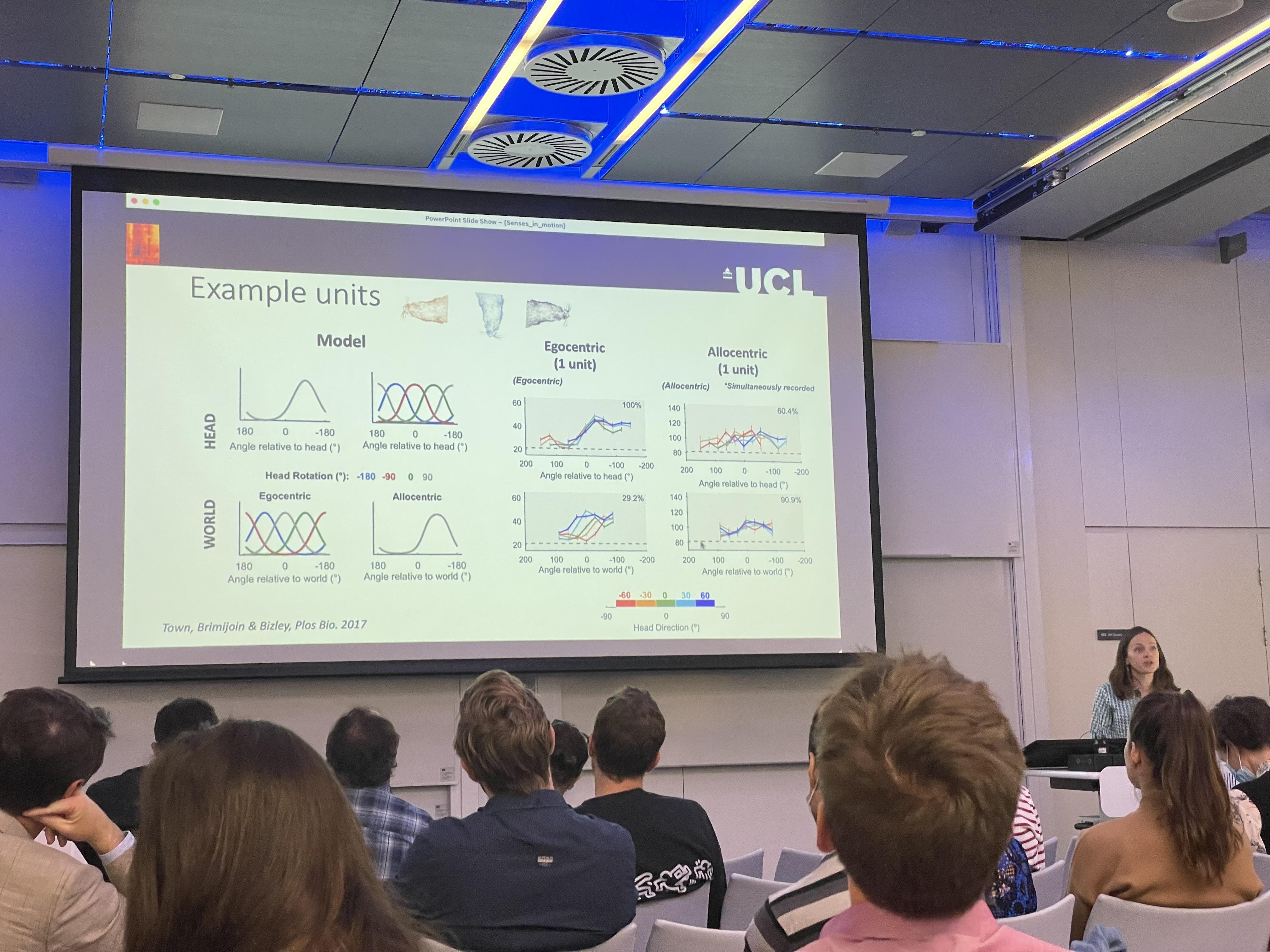

Jennifer Bizley, Professor at the UCL Ear Institute, focused on another sensory modality for explaining how the brain could inform our sense of place in the world. In auditory neuroscience studies, model organisms are often head-fixed. In contrast, Jennifer’s team found that data from freely moving ferrets reflected an egocentric over allocentric framework when compared to modelled responses. By constructing spatial receptive fields from sound sources that were different distances away, they found that in egocentric units, but not allocentric units, spatial receptive fields have a greater modulation to sound sources further away. In another study, Jennifer’s team found that theta oscillations in the ferret hippocampus are robust and persistent regardless of movement. Using an experimental paradigm where ferrets were placed on a rotating platform with sound sources coming from different directions, her team found that world-centred and head-centred representations led to different structures of behaviour. This showed that there are multiple coordinate frames in the auditory cortex.

Jennifer Bizley explained how naturalistic real-world sound localisation is critical for organising auditory scenes, such as listening to a friend in a busy restaurant by pinpointing their position in space. In the real world, the auditory scene is dynamic as you move through the environment, but you have no problem disambiguating self- from source-motion.

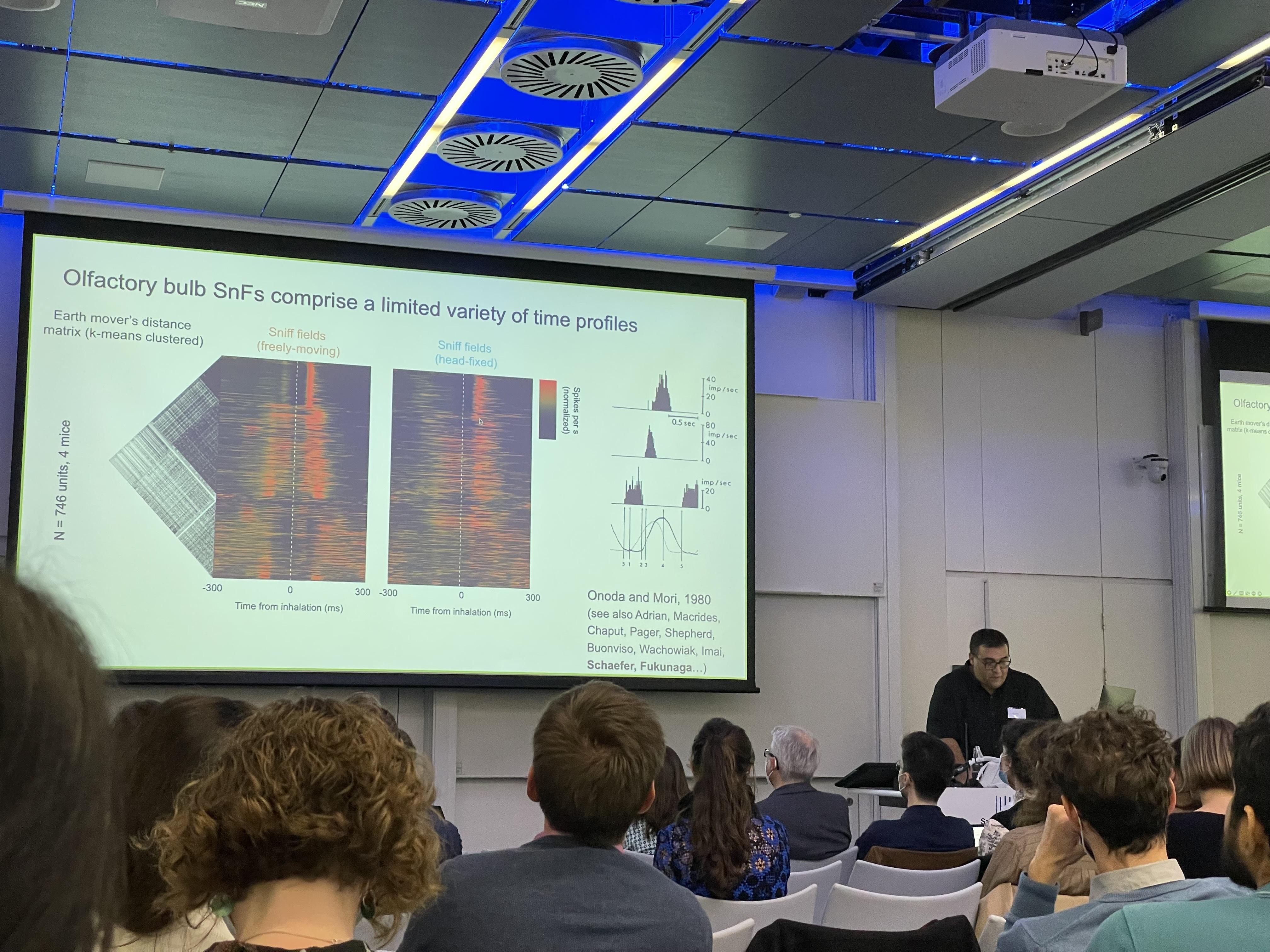

The "Nothing Seinfeld" condition

In addition to vision and audition, olfaction was also key to understanding ‘senses in motion’. Matt Smear, Associate Professor at the University of Oregon, began by proposing that sensory processes are acquisitive, not passive – especially in olfaction. To study active olfaction, the Smear lab recorded spontaneous neural activity during uninstructed behaviour while a mouse was exposed to ambient stimuli. Matt called this condition the “Nothing Seinfeld” condition, referring to George and Jerry’s “Nothing” show in the series. When the animal’s location was decoded from neural population activity in the olfactory bulb, neurons were found to contain significant information about the animal’s location. Time and sniff frequency also had a significant contribution to the animal’s place information. Matt speculated that place-related activity in the olfactory bulb incorporates odours into cognitive maps. The extensive connectivity between the hippocampus and olfactory bulb could explain how, as Helen Keller described, “smell is a potent wizard, that transports you across thousands of miles.”

Matt Smear’s team converted spike data to MIDI (Musical Instrument Digital Interface) signals to make sense of the data. Recording in both head-fixed and freely moving conditions, they found that at the population level, neural activity showed discrete states that reflected behaviourally defined states.