Working memory representations: practice makes perfect

An interview with Professor Peyman Golshani, UCLA, conducted by April Cashin-Garbutt

From recalling a shopping list to remembering a six-digit activation code, we rely on our working memory every day. Surprisingly, the mechanisms underpinning this ability are not well understood.

During a seminar at SWC, Professor Peyman Golshani presented his latest research into how working memory is represented in the brain during learning. In this Q&A, he shares more on the tools his lab developed to study working memory and the broader implications for understanding neuropsychiatric conditions.

How much do we rely on working memory for our cognitive functions?

We rely on working memory tremendously. Almost everything we do involves it – whether it's remembering a phone number or following instructions moments after reading them – working memory is fundamental to functioning in the world.

What first sparked your interest in trying to understand the mechanisms underlying working memory?

I knew that working memory was an extremely important cognitive attribute and also that it is disrupted in a number of psychiatric disorders including schizophrenia. I also thought it was more tractable to study than long-term memory because it involves shorter timescales – just a few seconds.

Despite being studied for over 50 years at the neurobiological level, we still don’t fully understand how neural circuits carry it out, which makes it a compelling area of study.

Why has it been so challenging to understand the mechanisms?

I think this is partly because the tasks used to study working memory were difficult to train in animals and were mostly limited to non-human primates. Early technologies only allowed researchers to record from neurons one at a time and lacked precision in manipulating specific neurons.

Now we have new tools, for example we can record from over 70,000 neurons at the same time and selectively inhibit or excite specific cells, which will help tremendously.

How have you overcome these challenges?

We adopted an olfactory-based task developed by our colleague, Chengyu Li, in China. Mice find olfactory tasks easier than visual or auditory ones, and they can perform complex comparisons of odours separated by several seconds.

We ensured odours were cleared quickly and controlled for mixing. Remarkably, mice learned the task within a week – far faster than traditional tasks that can take months.

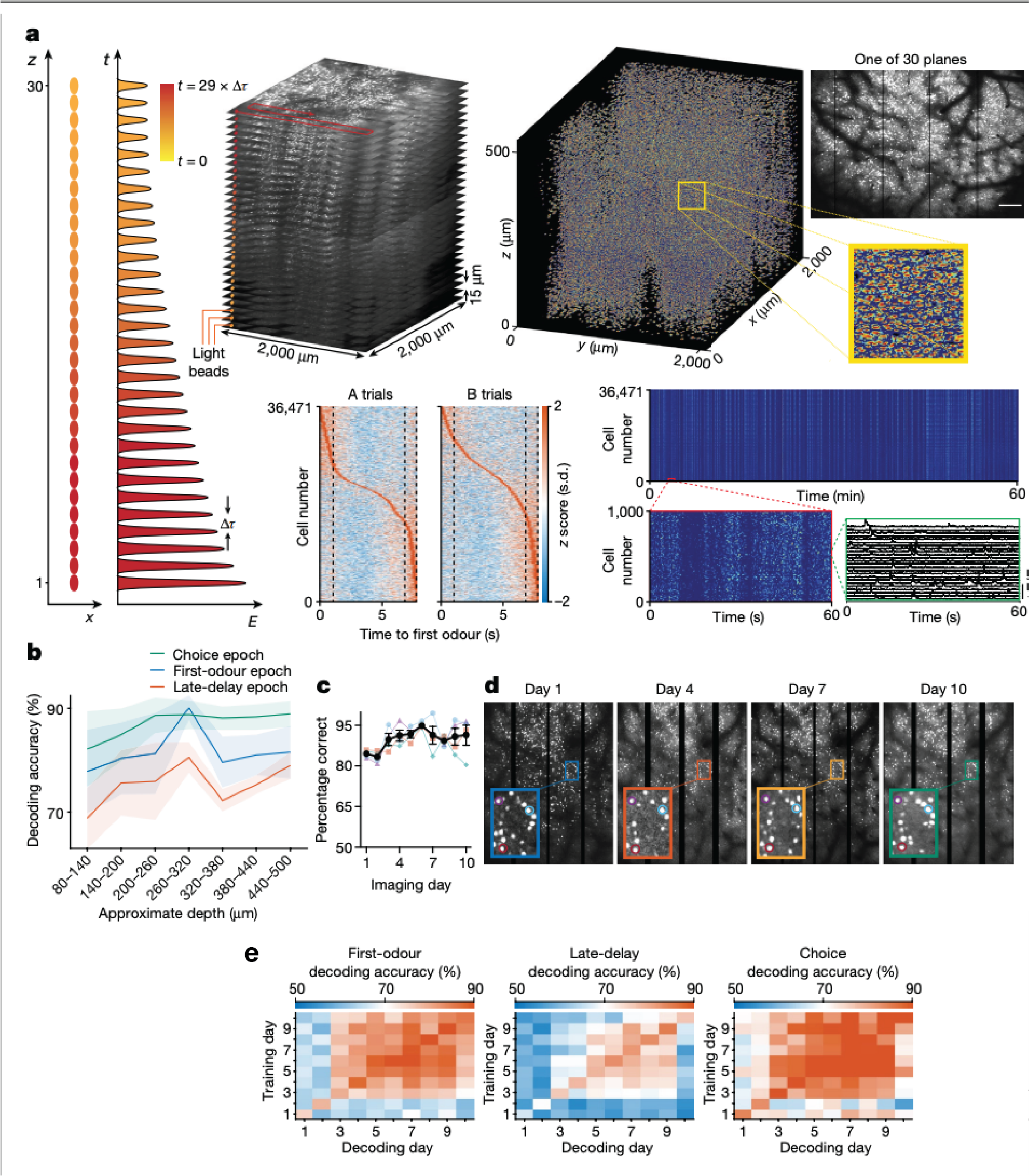

In terms of the imaging modality, we initially used our Thorlabs mesoscope, which could image hundreds to a few thousand neurons at a time. Later, we used a light bead imaging microscope (LBM) developed by Alipasha Vaziri’s lab. With LBM we can image up to 30 layers simultaneously across a large area, allowing us to record from around 70,000 neurons. This was critical for the results we observed.

Why did you use optogenetics and what did this reveal?

Optogenetics allowed us to inhibit neurons with high temporal precision – down to specific seconds within a task. This wouldn’t be possible with drugs, which linger for minutes or hours.

This allowed us to inhibit during the different phases of the task, such as the odour presentation, the beginning or end of the delay period, to understand their roles in working memory. This was really useful and wouldn’t have been possible in the past.

What were your main findings?

The main finding was that even after mice had learned the task and performed it proficiently during the first few days, the neural activity representing working memory was initially unstable with different sets of neurons involved each day.

Over time, as the mice kept practicing the task, these patterns stabilised, or “crystallised,” meaning the same neurons consistently represented the same information day after day. This was the novel finding.

Were you surprised by this crystallisation process and why do you think this occurs?

Yes, as while the task performance was stable early on, the underlying neural activity was not. It only stabilised after repeated practice.

We hypothesise that early instability allows flexibility in case the task changes, but once the task becomes predictable, the brain commits specific neurons to it.

What are the main implications of this research? Could it help us to understand some neuropsychiatric disorders?

This crystallisation process might be altered in neuropsychiatric disorders, for example it could occur too quickly or too slowly.

Our research may hold some clues about behavioural rigidity seen in conditions like autism or obsessive-compulsive disorder (OCD), where people fall into very crystallised patterns of behaviour that they can’t change easily.

Understanding the plasticity driving this process could offer new insights into these disorders.

What’s the next piece of the puzzle you hope to solve?

We’re trying to manipulate plasticity within the cells by deleting genes like NMDA receptors using CRISPR gene-editing technology to see how it impacts either learning of the task or the neural activity patterns.

We also want to study inhibitory neurons, which make up about 20% of the brain and may play a critical role. These cells often fire at very high rates, requiring new imaging techniques beyond calcium imaging.

Finally, can you give a brief overview of the open-source 2-photon microscopy tools you have been developing? What impact do you hope these tools will have?

We began building microscopes around a decade ago to reduce costs. Commercial versions were prohibitively expensive, so we created open-source one-photon miniature microscopes that cost around $1,000–$2,000 to build. We released all the designs and eventually over 800 labs built them.

Those microscopes were epifluorescence and the resolution was poor so you couldn’t see axons or dendrites. And so we wanted to build an enhanced version.

We’ve now developed a two-photon microscope that can image deeper with better resolution and good field of view, which can be built for about $10,000. We’re continuing to improve these tools to expand their capabilities.

About Peyman Golshani

Peyman Golshani, MD/PhD obtained his MD/PhD from UC Irvine where he trained with the late great Edward G. Jones on the development of corticothalamic function. He completed his neurology residency at UCLA after which he was appointed Assistant Professor. He is now a Professor in Residence in the Department of Neurology and Semel Institute at UCLA. His laboratory focuses on understanding large scale neural dynamics in cortex, hippocampus and striatum in cognitive and social tasks as well as understanding how these patterns go awry in model of neuropsychiatric disease. He is also developing new tools for recording neuronal activity in freely behaving animals.