Diversity of dopamine neurons

An interview with Professor Naoshige Uchida, Harvard University, conducted by April Cashin-Garbutt, MA (Cantab)

Dopamine neurons were traditionally thought to be activated by reward, but recent research has found that some are only activated by non-rewarding stimuli such as punishment or novelty. I spoke with Professor Naoshige Uchida to learn more about his lab’s research into the diversities of dopamine neurons and how the existence of multiple learning systems could be an adaptive strategy.

What are the key pieces of evidence indicating that dopamine neurons broadcast the discrepancy between actual and predicted reward to drive learning?

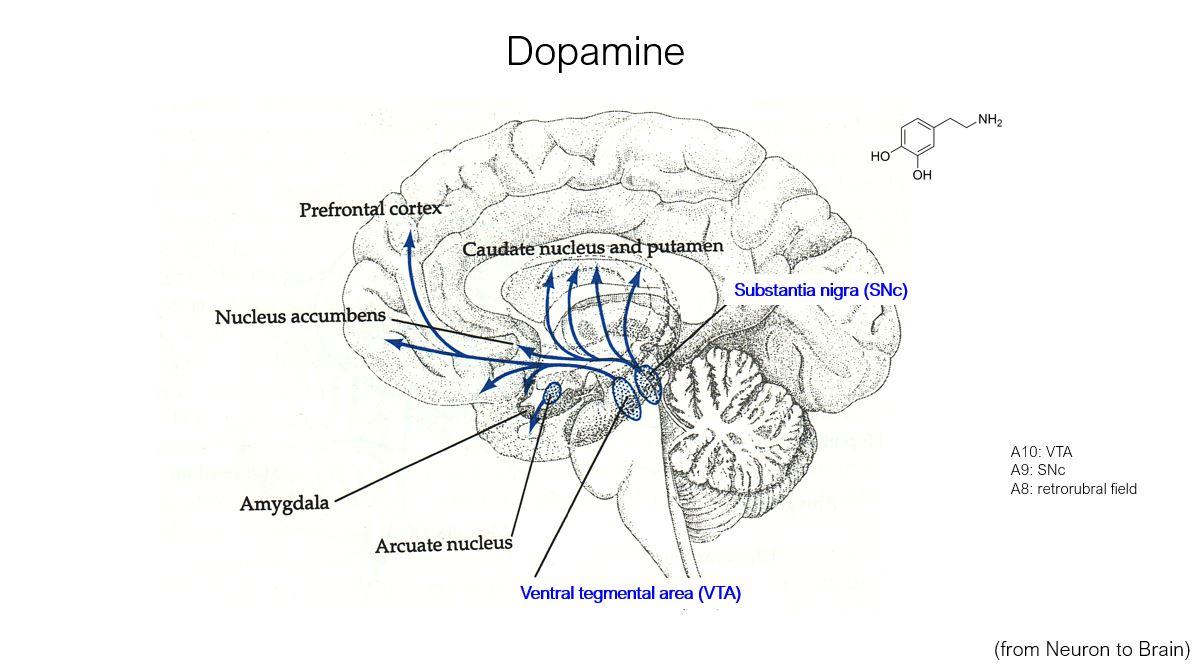

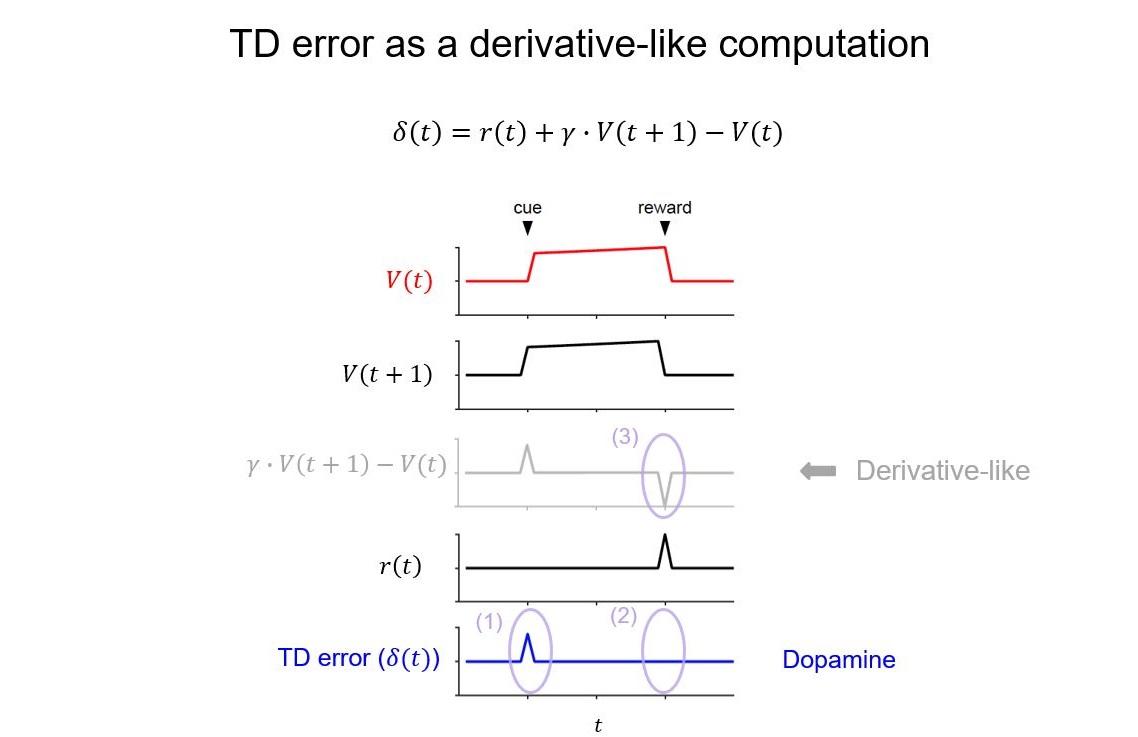

The biggest piece of evidence came a long time ago from studies by Professor Wolfram Schultz indicating that dopamine signals, almost precisely, resemble those from the temporal difference (TD) error learning model, which is a prediction error signal used to train computers.

This remarkable resemblance made many people think that dopamine neurons are broadcasting reward prediction error signal. There was also more quantitative work by Schultz that collected good evidence for dopamine neurons broadcasting the discrepancy between actual and predicted reward to drive learning.

What evidence has recently been found that challenges this view? Are all dopamine neurons activated by reward and what else can dopamine neuron activity be correlated with?

Some neurons respond to non-rewarding stimuli such as threatening stimuli or novelty. More recently, some neurons have been found to change their activity with movement and also slow fluctuations that are not typically predicted from conventional modelling. For example, slowly ramping up activity during freely moving tasks.

I think it is quite clear now that not all dopamine neurons are activated by reward, some are only activated by punishment. We have evidence that some dopamine neurons are activated by threatening stimuli such as air puffs and loud sounds. These findings are a big challenge to prediction error theories. We don’t know what exactly responses to movement are encoding and how to put them into a theoretical framework. There is much more work to do.

How can we think of these diverse dopamine signals under the framework of reinforcement learning theory?

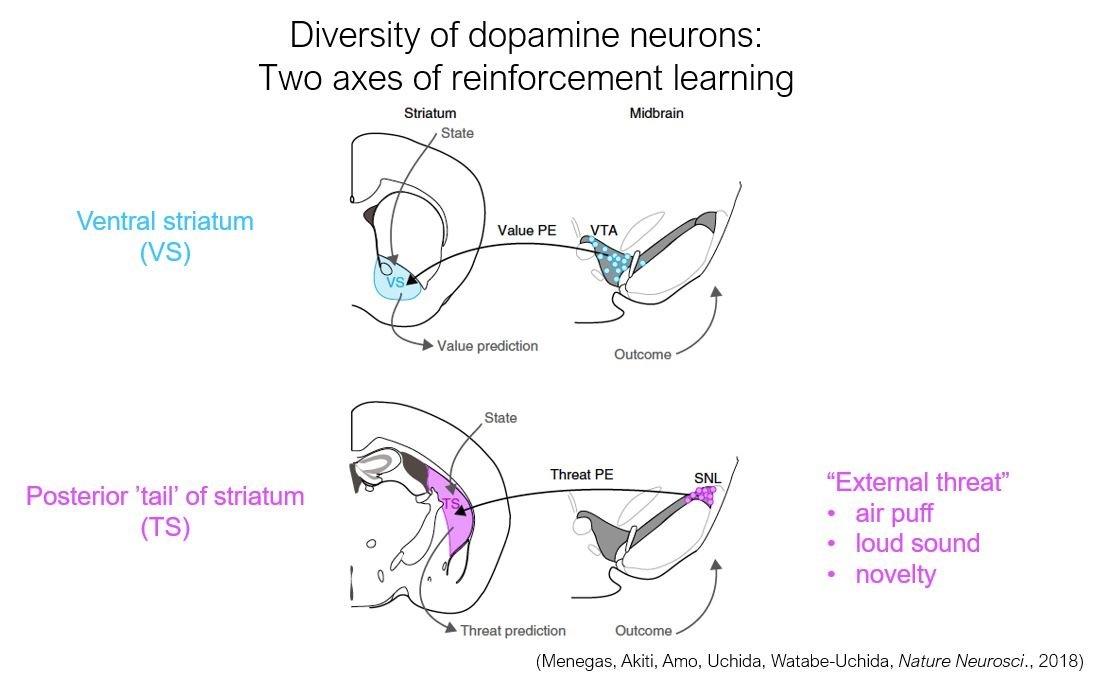

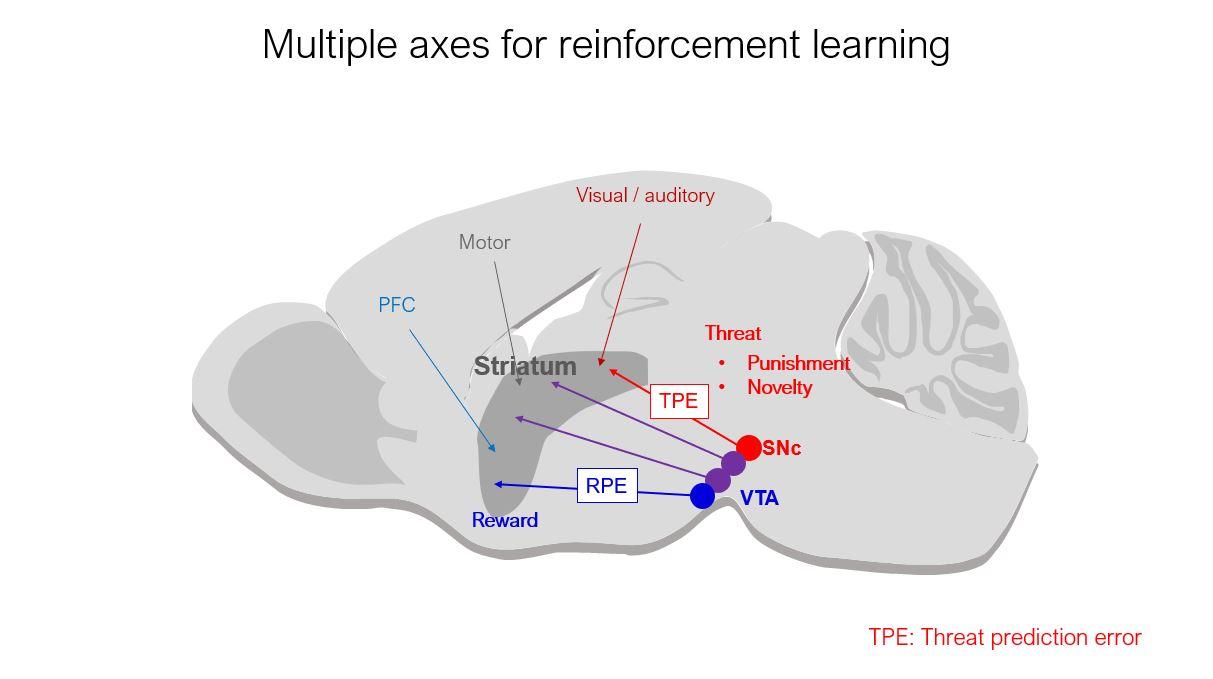

Our recent work in the posterior ‘tail’ of striatum indicated that dopamine neurons projecting to this area encode threat prediction error, so it is still a prediction error, but with respect to threatening signals.

There are a couple of interesting differences between threat prediction errors and conventional reward prediction errors, but an emerging view is that there are multiple reinforcement learning circuits predicting different things.

The conventional one is in the ventral striatum or nucleus accumbens and predicts outcome value, but the tail of the striatum is predicting threat. Given this line of thought, we can use the reinforcement learning framework to understand different dopamine systems.

We don't currently know how movement-related signals fit into this framework. However, because the cellular components are very similar between different regions of the striatum, I imagine we will be able to work this out but it is a big future challenge.

How could the existence of multiple learning systems be an adaptive strategy?

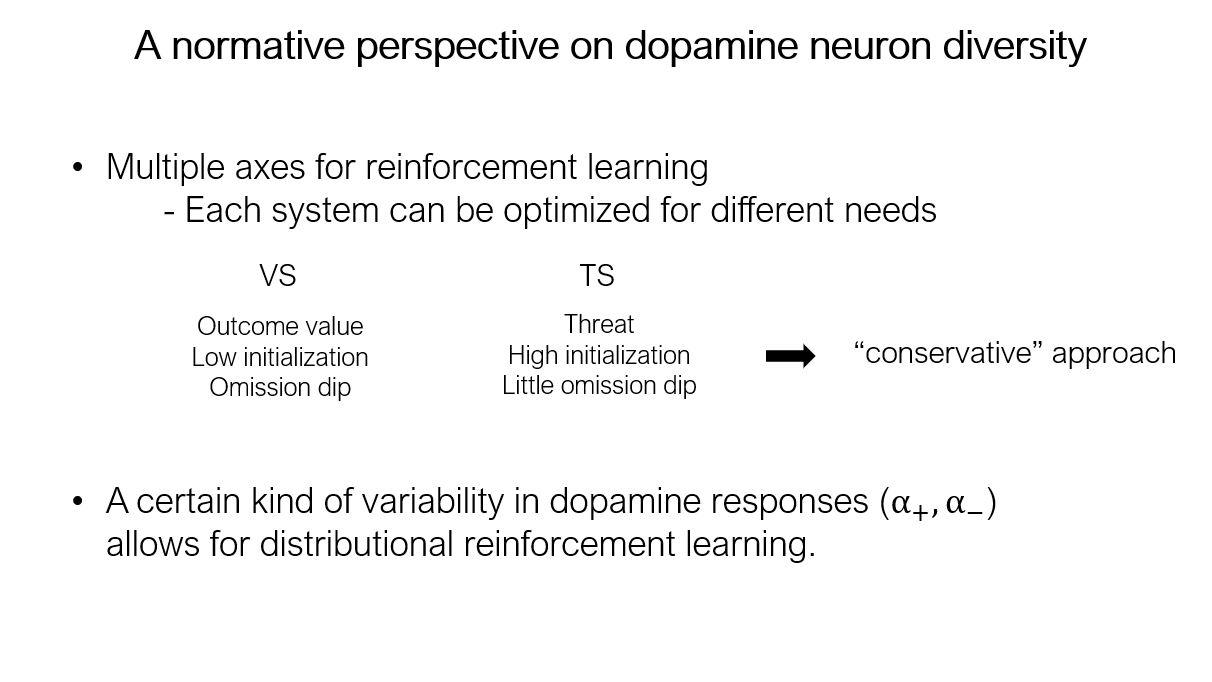

Having multiple learning systems could be an advantage in the sense that you can optimise each system to different needs depending on what the system is learning about. For instance, when you are learning about threat, you wouldn't slowly learn, otherwise you will be eaten! Instead you would learn fast and then gradually relax through experience.

I think having multiple learning system allows you to optimise each system for different needs and the decision-making circuit could take advantage of the multiple systems to make a final decision.

Is there any evidence that such an arrangement is conserved across species?

Yes, the multiple dopamine system idea was initially conceptualised in the fruit fly, Drosophila, and we are observing big parallels in the mammalian brain. It is also interesting to think about the evolutionary aspect in terms of how the multiple dopamine systems may have evolved or converged across species.

What is the next piece of the puzzle your research is going to focus on?

One major question is how to understand this multiple dopamine system idea. I'd like to push the idea of prediction error based learning for different things and see whether that's valid or whether we need different theories to understand other parts of the striatum.

Dopamine neurons project not only to the striatum but also to other regions such as the cortex. Figuring out what these dopamine signals are doing is also a very interesting direction to go.

About Professor Naoshige Uchida

Naoshige Uchida is a professor at the Center for Brain Science and Department of Molecular and Cellular Biology at Harvard University. He received his Ph.D. from Kyoto University in Japan, where he worked on the molecular mechanism of synaptic adhesions in Masatoshi Takeichi’s laboratory. He then studied olfactory coding in Kensaku Mori’s laboratory at the Brain Science Institute, RIKEN, Japan. He then joined Zachary F. Mainen’s laboratory at Cold Spring Harbor Laboratory, New York, where he developed psychophysical olfactory decision tasks in rodents.

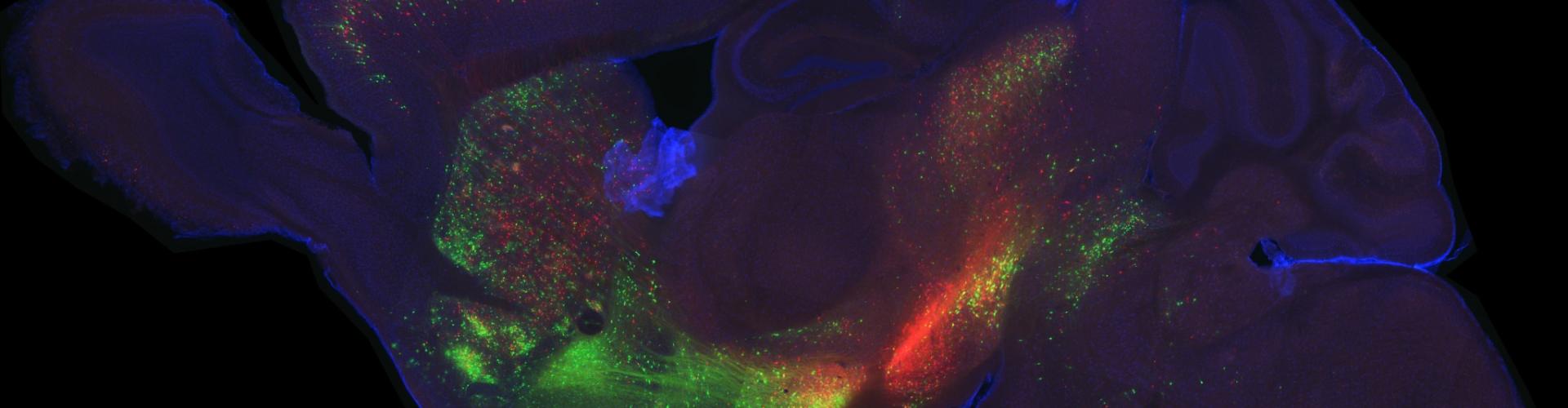

He started his laboratory at Harvard University in 2006. His current research focuses on the neurobiology of decision-making and learning, including neural computation in the midbrain dopamine system, functions of the cortico-basal ganglia circuit, foraging decisions and motor learning. His research combines quantitative rodent behaviors with multi-neuronal recordings, computational modeling, and modern tools such as optogenetics and viral neural circuit tracing.