Switching between egocentric and allocentric coordinates

An interview with Dr Andrew Alexander, Boston University, conducted by Hyewon Kim

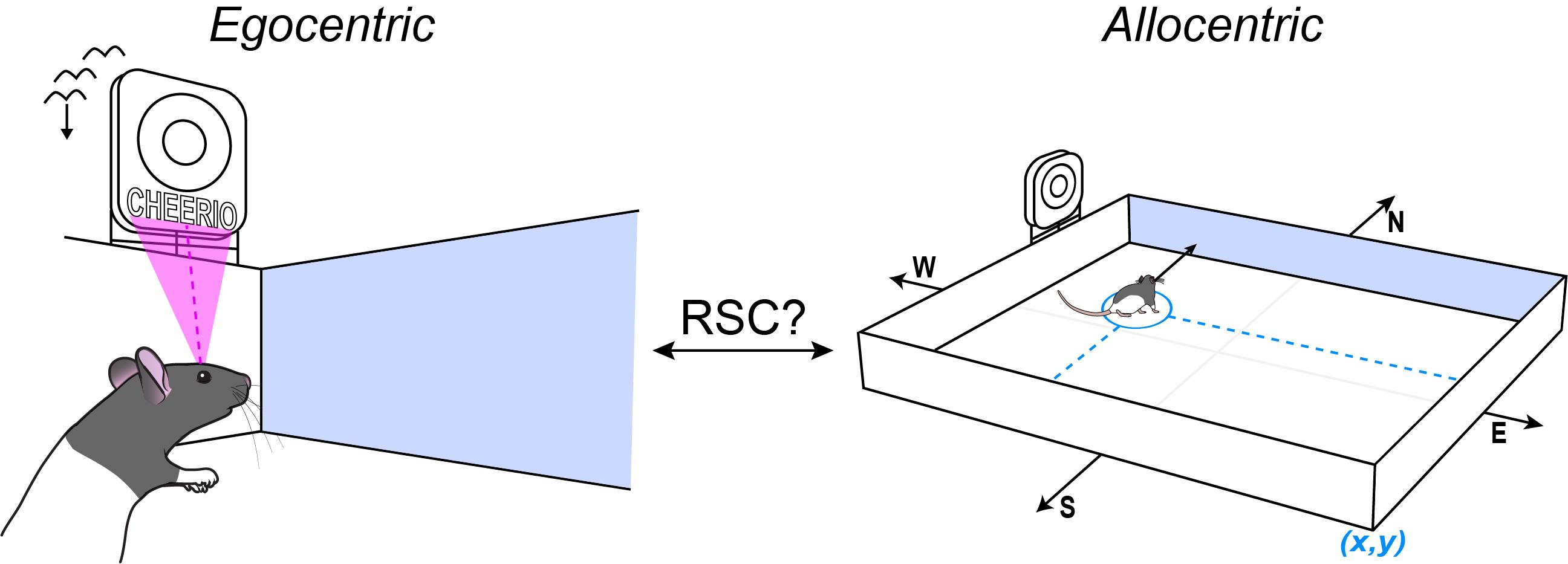

You may be able to picture in your head a bird’s eye view of your apartment and the nearest grocery store, but how does the switch occur between knowing where something is relative to your position (egocentrism) and taking action to navigate to locations based on an internal map (allocentrism)?

In a recent SWC Seminar, Dr Andrew Alexander shared his research on the role of the brain’s association cortices in transformations between allocentric and egocentric coordinate systems. In this Q&A, he discusses how he got interested in researching this topic, some of his key findings, and the next steps.

How are egocentric and allocentric perspectives defined?

An egocentric perspective is where things are relative to you. If you’re standing in a room, and a chair is to your right, for example, and you move to a different spot in the room and that same chair is to your right, then it has the same egocentric position relative to you at both locations.

An allocentric coordinate system is if you’re in the room and the chair is in a particular location, it’s the distance and direction to that chair relative to everything else in the room. So it’s not anchored to you.

The simplest way to think about it is that the egocentric view is what you currently see, and the allocentric view arises from your ability to think about what you’d see when you’re in another place in the world.

What first got you interested in researching spatial transformation?

I started by studying allocentric spatial representations such as place cells, and then became interested in how these receptive fields are constructed and anchored in a way that makes them useable. My colleagues and I eventually became interested in how cortical structures that are closer to sensory processing streams might be integrating egocentric information and tying it to allocentric space in some way. In a way, it was starting from allocentric and then moving out into seeing how sensory information is integrated.

What has already been known about the way the brain undergoes spatial transformations to enable navigation?

There would likely be some debate about this so I’ll just note a few things that I would say we know and that I think about often. First, I believe most of what we understand about spatial transformations we learned by examining sensorimotor behaviours. Some of this work essentially examines how the position of an object in the outside world (e.g. food) is detected by your sensors (e.g. your retina) and transformed into a series of motor commands that enable your effectors (e.g. your hand) to manipulate said object (e.g. eat the food).

To quickly summarize only part of decades of incredible work in this domain we’ve determined that 1) association cortices are involved in transformations and 2) neurons in these regions exhibit multi-selectivity wherein they are sensitive to the position of objects with respect to sensors and effectors simultaneously. This latter finding seems computationally critical for spatial transformations.

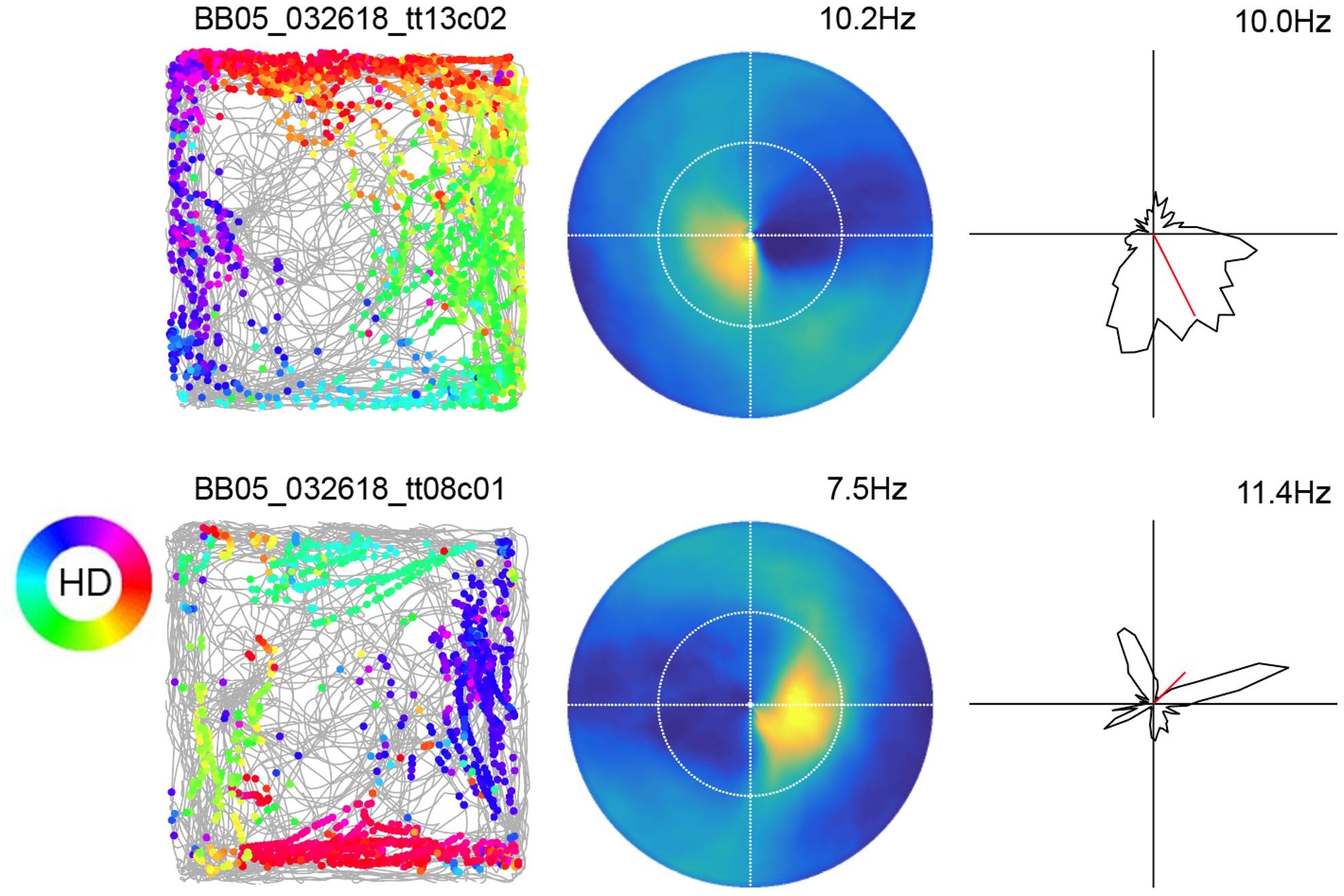

With respect to navigation a major thing we know is that egocentric sensory information is incorporated into our stored knowledge about allocentric space. For instance, we know that sensory landmarks can modulate where allocentric spatial receptive fields are positioned. In experiments where the animal runs around in an environment and a visual cue is moved – then, the allocentric map moves proportionately with it. We also know that allocentric spatial maps are modified over experience in an environment as we integrate more and more egocentrically-processed contextual information. So, we know that an integration between egocentric and allocentric coordinate systems is happening.

I think probably the most critical piece of the spatial transformation puzzle involves head direction neurons, which tell you where you’re oriented in allocentric space. Head direction neurons tell you when you’re facing the BT Tower, and for example, how you should change your orientation if you are using that landmark to navigate from the SWC to Regent’s versus Hyde Park. Several lines of evidence indicate that angular velocity coded by vestibular organs, which is an egocentric phenomenon – how you turn – can be transformed into allocentric direction, which is the direction of things relative to the outside world. I think this sense of allocentric direction is the foundation upon which all other allocentric spatial mappings lie.

How does an internal map-like model guide actions?

We’re still trying to figure that out. We know that you can close your eyes and picture a bird’s eye view of your apartment. You can also zoom out even further and picture a bird’s eye view of your apartment and the grocery store down the street, and where they are relative to each other. That is your internal allocentric model of where your apartment is and where you get your groceries. How we take that information that we keep in our minds for a long time and transform it into an action plan is not well understood, but it probably involves the retrosplenial cortex (RSP).

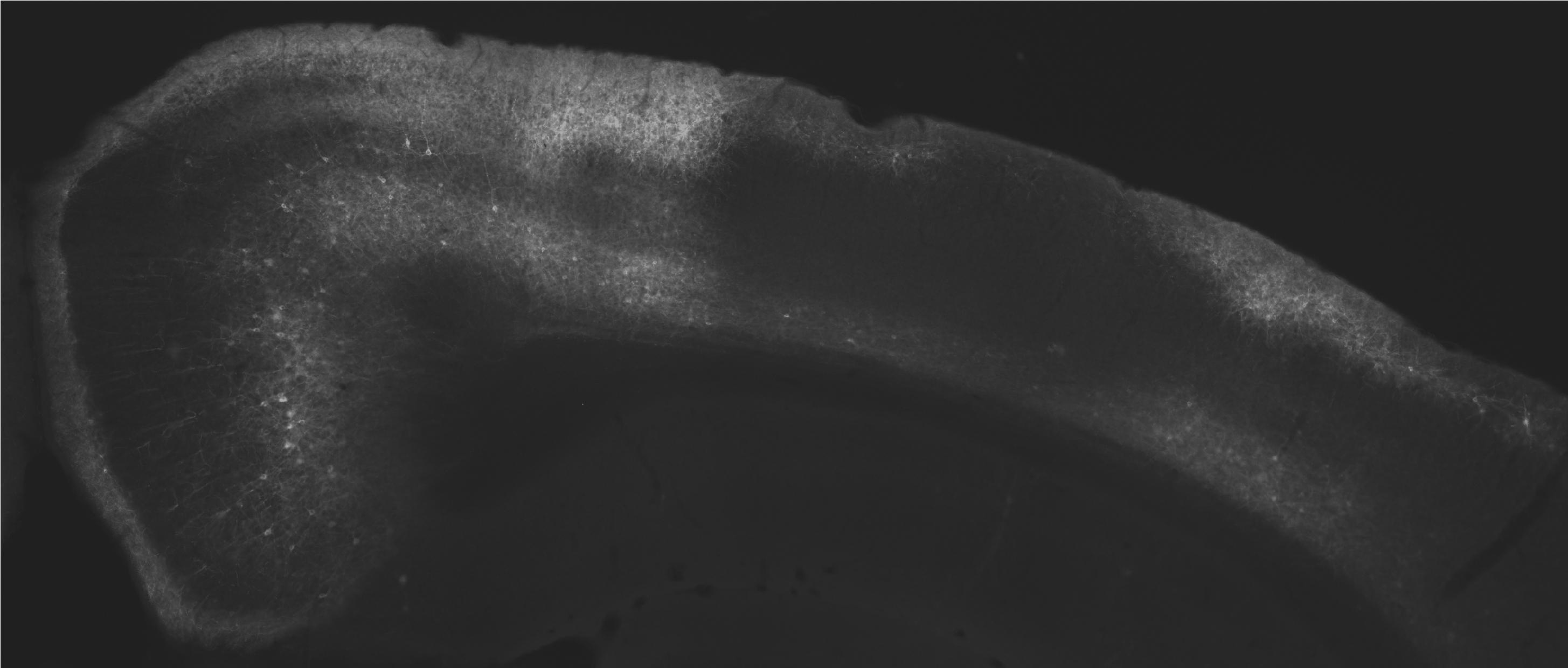

Neurons in rat retrosplenial cortex (RSC) fluorescently labelled on the left, neurons in other visual and parietal regions that project to RSC labelled in light grey in the middle and on the right.

How does your research explore the role of association cortices in spatial transformation?

We observe neural activity while animals perform navigation tasks. This allow us to look at how egocentric and allocentric information are combined. From there, we compare those responses to theoretical and computational work that is designed to actually compute the transformation. We look for how what we see in the brain matches these mathematical models.

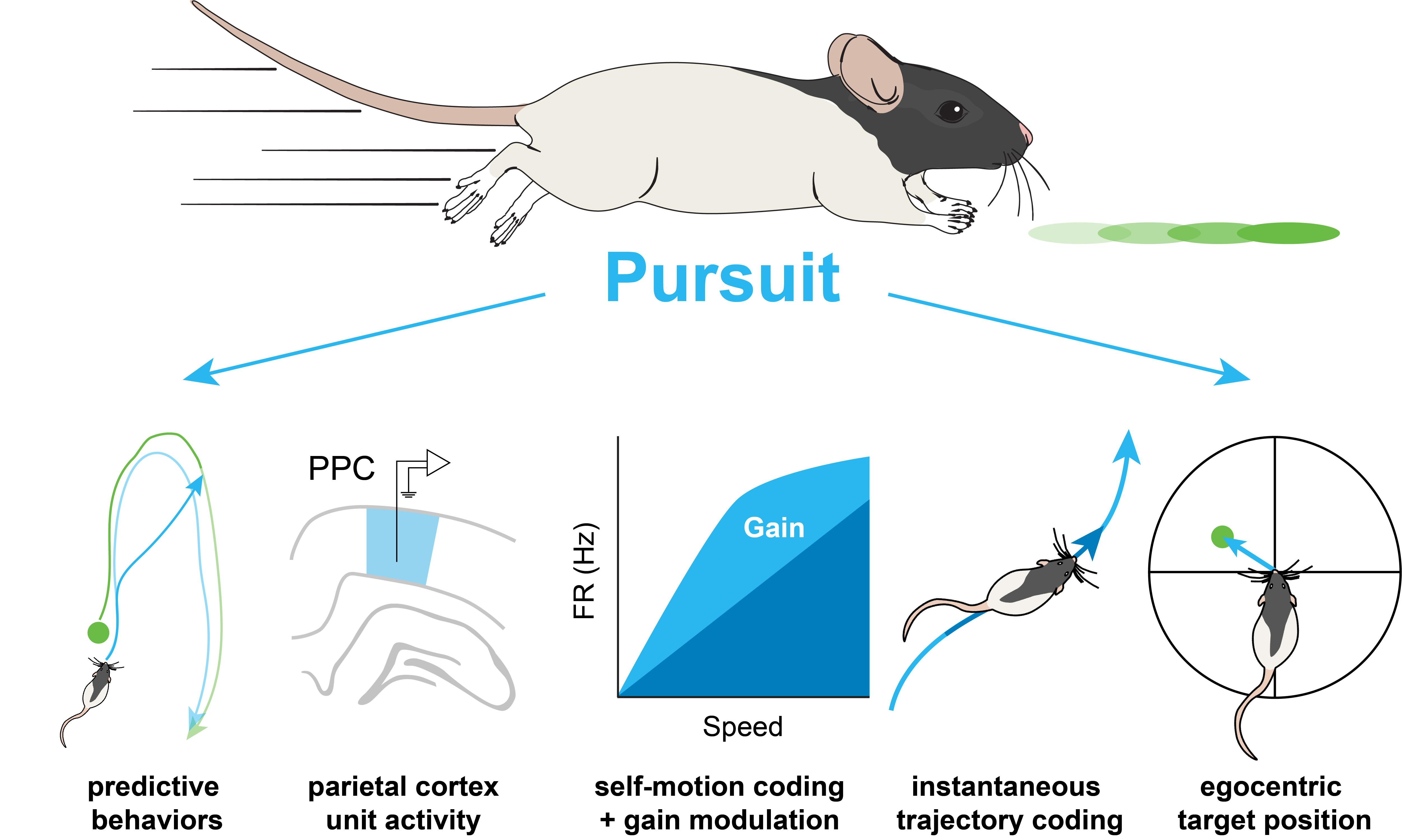

Why did you choose to study predation, or pursuit, behaviour?

I think it was related to a lot of what appears to be happening here at the SWC, which is to adopt a ‘behaviour is really important’ approach. I felt like we needed experiments that were more ethologically valid. We know that rats exhibit chasing behaviours from old work, where they took rats and put them in big outdoor environments, and just observed them. They perform chasing behaviours – they would follow the pack, or engage in chasing in the context of mating or in predation. I wanted something that was navigation-based, but also had some elements that were more complex than walking around aimlessly eating fruit loops (the primary way we reward our rats in the laboratory).

What have been some of your key findings so far?

The main thing that we have shown with respect to the spatial transformation is that RSP responses are at least sufficient to mediate a transformation. We haven’t shown that causally yet, which I think is a really hard question. With respect to the pursuit behaviour, we have shown that neural circuits that have activity patterns, like the ones that we observe in RSP, have fascinating changes to their firing because of more complex behaviours. What we also see during pursuit is that rats perform complex predictive behaviours. These predictive behaviours rely on continuous egocentric-allocentric spatial transformations and I think this task can potentially advance our understanding of this process. I think that is an exciting advance from the visual pursuit work.

In terms of transforming from egocentric to allocentric coordinates, or vice versa, what are some of the computational building blocks that are involved?

What the models predict is that there will be independent populations of neurons that are sensitive to egocentric or allocentric space. In addition, these populations converge onto other neurons within the circuit and the neurons that receive these convergent inputs might have sensitivity to both egocentric and allocentric spaces simultaneously. A population of these neurons with sensitivity to both egocentric and allocentric space can theoretically mediate a transformation between spatial coordinate systems. It’s still just a sufficient result – we see something indicating that the building blocks are there, but we have not shown that without them, you cannot do the transformations.

You talked about a subpopulation of neurons that map the position of external features in egocentric coordinates or the other way around. Were you surprised to find this?

It was really exciting. But actually, Neil Burgess and his group at UCL predicted that there would be egocentric boundary cells so I wasn’t necessarily surprised. It was something that I was hoping to see because of theoretical modelling work.

What are some of the next pieces of the puzzle you’re excited to work on?

We need to develop more tasks that allow us to probe when an egocentric-to-allocentric transformation or vice versa occurs. That’s something that I’ve been giving a lot of thought, but it’s tricky in rats because they can’t tell you ‘Hey, something clicked for me, now I understand where this landmark is relative to other landmarks I know.’ So I’m excited to develop new behavioural tasks, and then to start looking at circuits both within and outside of retrosplenial cortex, and how they interact when that moment occurs.

About Dr Andrew Alexander

Andy Alexander completed his PhD work at the University of California, San Diego in the lab of Dr. Douglas Nitz where he characterized basal forebrain activation dynamics and investigated the role of retrosplenial cortex in spatial coding. He is currently an NIH K99 postdoctoral fellow in the laboratory of Michael Hasselmo at Boston University where he continues to examine cortical computations relating to spatial transformations, navigation, and memory.