Exploring the function of dopamine in reward-driven action

An interview with Dr Mark Walton, University of Oxford, conducted by April Cashin-Garbutt

What happens in our brain when we seek what we crave, like an ice-cold glass of water on a hot summer’s day? In a recent SWC Seminar, Dr Mark Walton shared his work connecting striatal dopamine with reward-driven action. In this Q&A, he delves deeper into the historical backdrop of his research, the controversies around striatal dopamine’s role in signalling new information, and the implications of his findings.

How much is known about the role of dopamine in the striatum in signalling new information about potential future rewards? What controversies still exist about these dopamine signals?

Over many decades, researchers have demonstrated that surprise plays an important role in how animals learn. In the mid-1990s, it was shown that the way dopamine neurons respond to reward closely matches the reward surprise – or “prediction error” – signals that are used by reinforcement learning models and machine learning.

We also know dopamine is dysfunctional in many neurological and psychiatric disorders and dopamine pharmacological agents serve as one of the major treatment options in these conditions. However, researchers have found it hard to bridge between this very precise understanding of dopamine as a signal for updating values and the florid problems observed in these disorders.

For example, one classic condition associated with a loss of dopamine function is Parkinson’s disease. We usually think of Parkinson’s as primarily a movement disorder, which sounds entirely different from what you’d expect to observe in someone who had just lost an error signal telling them whether the rewards got better. There are ways to draw a connection between what actually happens when you disrupt dopamine - either experimentally or in disease models - and this beautiful theory of dopamine function as a prediction error signal, but there is a lot that is still unknown and hotly debated.

Why is it challenging to determine the precise content and function of these dopamine signals?

Until recently, we didn’t have good techniques to study dopamine at the kind of resolution we needed. People used electrophysiology to record from midbrain dopamine neurons, but the problem with dopamine is that there are many things that regulate the amount of dopamine that is released in the terminal regions. This means you can’t necessarily just go from seeing the firing of midbrain dopamine neurons to knowing what’s happening in brain regions where it’s being released. And then even after it has been released, there are lots of processes that affect the timescales at which dopamine acts.

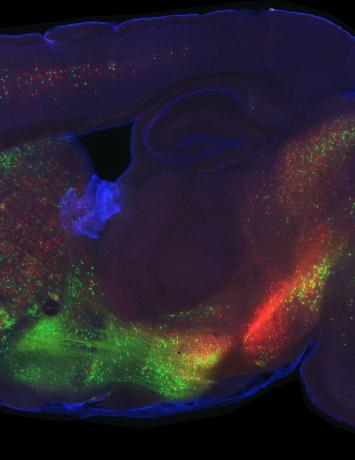

However, over the last 20 years, there have been several advances. Firstly, a technique called fast scan cyclic voltammetry (FSCV) was developed so it could be used in behaving animals performing cognitive tasks. And more recently, there has been the emergence of genetically encoded neural transmitter sensors – these are modified dopamine receptors that fluoresce, or light up, when dopamine binds to them. You can measure that change in fluorescence as a proxy of the amount of dopamine in the brain at that point.

This has revolutionised what we can do because we can track the moment-to-moment changes in dopamine in the regions where it is being released. And with techniques like optogenetics, we can now also manipulate dopamine on a moment-by-moment scale. We are really on the cusp of discovering a lot.

How does your research try to connect striatal dopamine with reward-driven action?

One of the things we tried to do is to think about the different contexts that an animal might encounter when trying to get reward. For example, we have been interested in whether dopamine signals are influenced by how much effort you need to put in to get a reward, or whether you just care about how good the reward is in the end, as we often have to pay costs to get rewards in the future.

More recently, we have been thinking about situations where we need to engage self-control. We know that all animals are set up to approach a good reward – that is a very stamped-in system. But sometimes that’s not the right thing to do – so how do we have that kind of instrumental control?

You could imagine a system where dopamine constantly signals that reward is available and motivates an approach response, but then other pathways that inhibit that action when it is inappropriate. On the other hand, you could have a system where dopamine is actually damped down in situations where you need to engage self-control to make it less likely that you will approach a reward that you should not.

Can the dopamine reward create new actions or simply recombine elemental actions stored in the striatum? If the latter, how do these elemental actions come to be?

I think what dopamine is doing is evaluating how good an action chosen elsewhere would be. One of the things we see a lot in our data is that if an animal is making a choice between two options, dopamine will report the value of the thing the animal is about to do. We sometimes jokingly call this ‘clairvoyant dopamine’.

Even when an animal choses what appears to be the worse choice, e.g. the animal for whatever reason chooses to approach a lower value option, dopamine signals that it is indeed the less good response. This may be involved in subsequent energisation of that action.

It’s almost like a part of the brain says, ‘this is the thing that we should do,’ and dopamine then provides a signal that indicates, ‘don’t put too much effort into that action because it’s not going to get you that much reward.’ That provides a second system to regulate whether, when, and how fast to act on the selected choice.

What have been your key findings so far?

One key finding was that dopamine signals are strongly shaped not just by prediction error-like updates, but also by the action that may need to take place in order to achieve what is desired. That was one of the first findings that brought together the reward prediction error ideas and movement ideas, which had previously been seen as being quite separate theories.

In addition, we have shown that these dopamine prediction error signals are cleverer than we thought. Originally, the standard idea that they just responded based on what you had directly experienced. But what we, and other groups, have shown is that the dopamine prediction error signals also can use predictions based on inferences derived from knowledge about the world – the structure of how the world works, for example. However, an interesting complication is that we have also shown that just because dopamine is reporting those signals, it does not mean that they are always involved in updating our behaviour. So, what their role is in these situations remains to be determined.

What implications do these findings have?

The first finding is quite important in that it starts to bring together these ideas about dopamine, reward and impulse control – it shows dopamine is not just concerned with the value of something, but actually what you have to do in order to get the reward.

The second finding suggests that during flexible decision-making, dopamine prediction errors might be playing a slightly different role than we originally thought. One of the things that we are thinking about is the possibility that the signals are giving a running commentary on whether you should stay engaged with something. So rather than simply providing a signal to determine whether you should select option X or option Y, they instead help determine if a current strategy is achieving its goal. In other words, it is constantly updating the value of whether or not it is worth persisting with the course of action that you are already on.

What’s the next piece of the puzzle your research is focusing on?

One is to move to slightly more naturalistic paradigms. People in my lab have developed large maze environments, which in principle means that once we understand how dopamine responds in a simpler task setting, we could start to build that understanding up to see how it translates as animals make decisions in more complex spatial environments.

We are also developing home cage testing, which means we will be able to see how a foraging animal allocates its time and behaviour across longer time periods. The animals live next to an operant box, and can visit the box to perform tasks, then come back to the home cage, whenever they choose.

At a more neuroscientific level, we’ve known for a long time that dopamine is regulated by, and itself regulates, many other neurotransmitters. We want to now think about how dopamine and other neuromodulators like acetylcholine and serotonin interact in order to shape our behaviour. For instance, with serotonin, people have proposed that it provides a signal for how uncertain you are in your current world. That could be quite useful for inferring whether or not you should learn from dopaminergic reward prediction errors. With acetylcholine, people have suggested it could be useful to gate movement or learning, either by co-activation with dopamine or by directly influencing dopamine release itself.

About Dr Mark Walton

Dr Mark Walton is a Wellcome Senior Research Fellow and Associate Professor at the Department of Experimental Psychology, University of Oxford. He did his DPhil in Neuroscience at Oxford, postdoctoral work at Oxford and, as a Wellcome Advanced Training Fellow, at the University of Washington, Seattle, before establishing his lab back in Oxford in 2010. Research in his group is focused on understanding the neural mechanisms shaping motivation and adaptive reward seeking, with a particular interest into how neurotransmitters such as dopamine regulate these processes in rodents on a moment-by-moment timescale. To do this, they use in vivo techniques to enable fine-scale measurement and manipulation of neurochemistry and neural circuits integrated with rich behavioural tasks that can tease apart animals’ behavioural strategies and motivations.