Neural representations of self-motion

An interview with Dr. Kathleen Cullen, Professor in Biomedical Engineering, Johns Hopkins University and co-director of the Johns Hopkins Center for Hearing and Balance, conducted by April Cashin-Garbutt, MA (Cantab)

How is self-motion distinct from other types of motion we experience?

Self-motion is motion we experience as we actually navigate and move through our world. This is in contrast to the visual motion that we sense and experience while watching a tennis match.

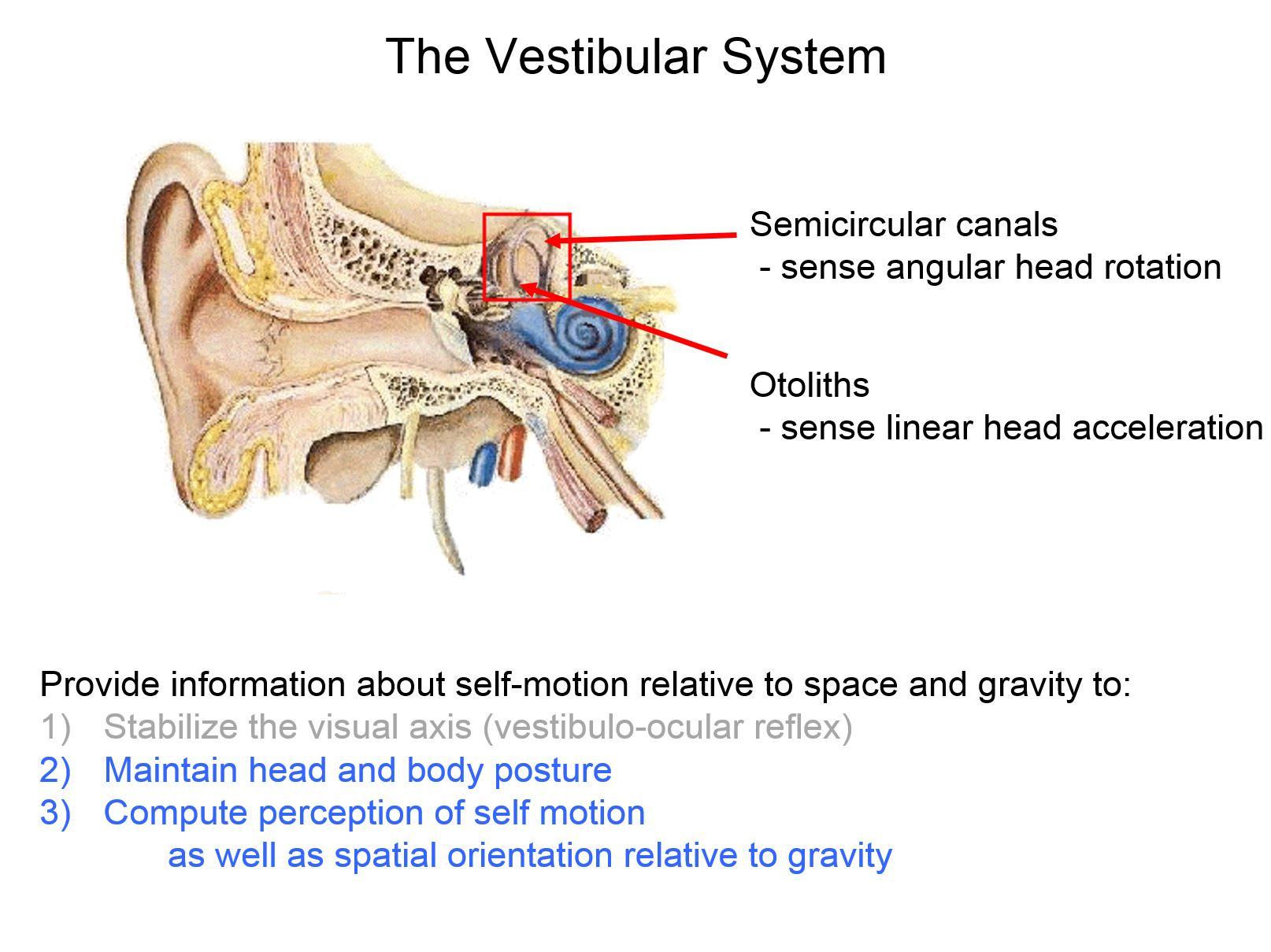

Our brain computes estimates of our self-motion in everyday life to ensure accurate motor control and perception. The information provided by the vestibular system is essential to this computation. The vestibular system detects the rotational velocity and linear acceleration of our heads relative to space.

During everyday behaviours, our perception of self-motion depends on the integration of vestibular information with other sensory cues, including visual and proprioceptive information.

However, most studies of navigation in the field of neuroscience have used an experimental design in which the animal model or person does not actually move through the environment, even though that is what navigation involves.

Experiments typically use a virtual reality setup, in which the subject views visual motion (optic flow) that is consistent with self-motion but the subject does not actually move through space. This means that additional sensory cues that would normally be present during actual self-motion, for example from the vestibular and proprioceptive systems, are not present.

My group’s research program is focused on understanding how the brain normally integrates inputs from multiple modalities in order to compute an accurate representation of how we are moving through space.

What first sparked your interest in the neural representations of self-motion?

As an undergraduate, I initially trained as an electrical engineer. I became interested in the vestibular system because it is a great model system in which computational methods, for instance linear systems' approaches, have provided fundamental insights into how the brain encodes and processes sensory information.

I then began my PhD at the time when researchers were just beginning to really explore how single neurons in the brain react to inputs from the senses when making voluntary movements.

Much of sensory physiology had (and still does) focus on quantifying the activity of neurons while animals passively experience sensory stimulation. As I was completing my PhD, it became very evident to me that this was artificial, because in everyday life our sensory systems are normally activated as a consequence of our own movements, as well as by externally applied stimuli.

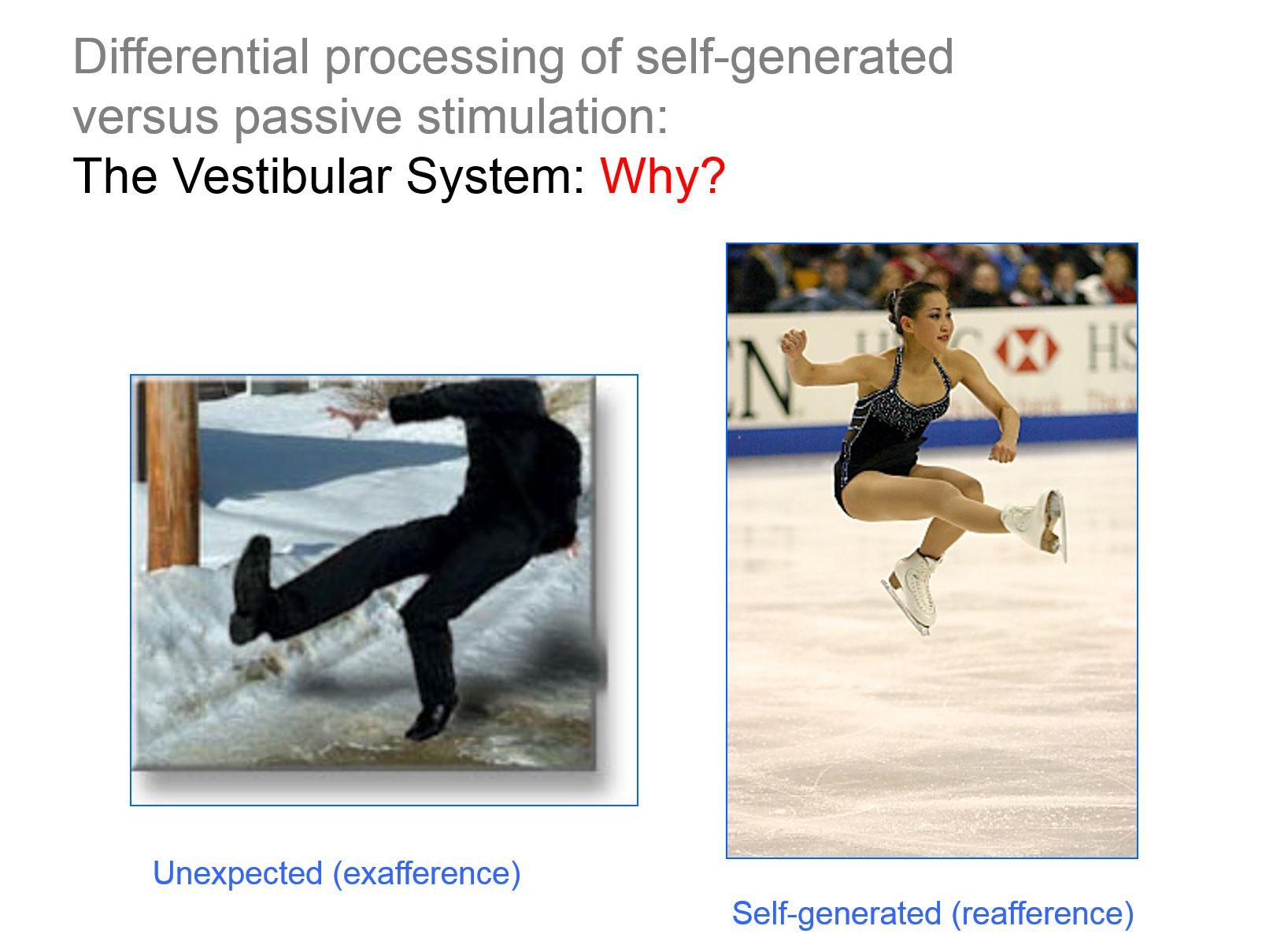

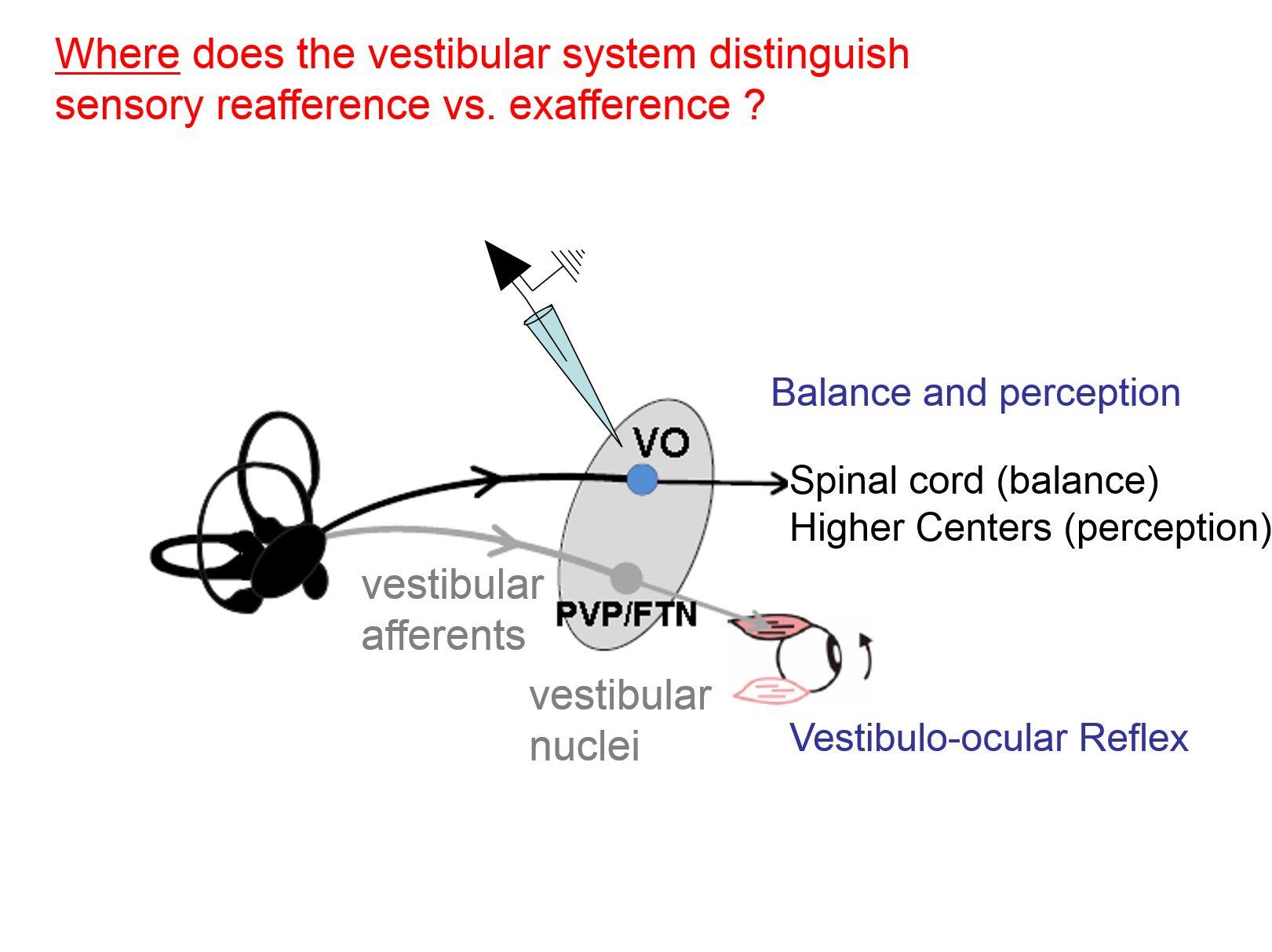

My group’s research program has since focused on how the brain integrates motor-related information with sensory inputs to distinguish sensory reafference and exafference. Reafferent sensory information is that which is a consequence of our own active movements, whereas exafference is externally applied.

In particular, my work has established how the ability to make this distinction in the vestibular system is essential for ensuring accurate motor and perceptual stability. My group has shown that at the first stage of central processing, vestibular information is processed in a behaviourally dependent manner.

How has your laboratory’s research addressed the question of how the brain computes accurate estimates of our self-motion relative to the world?

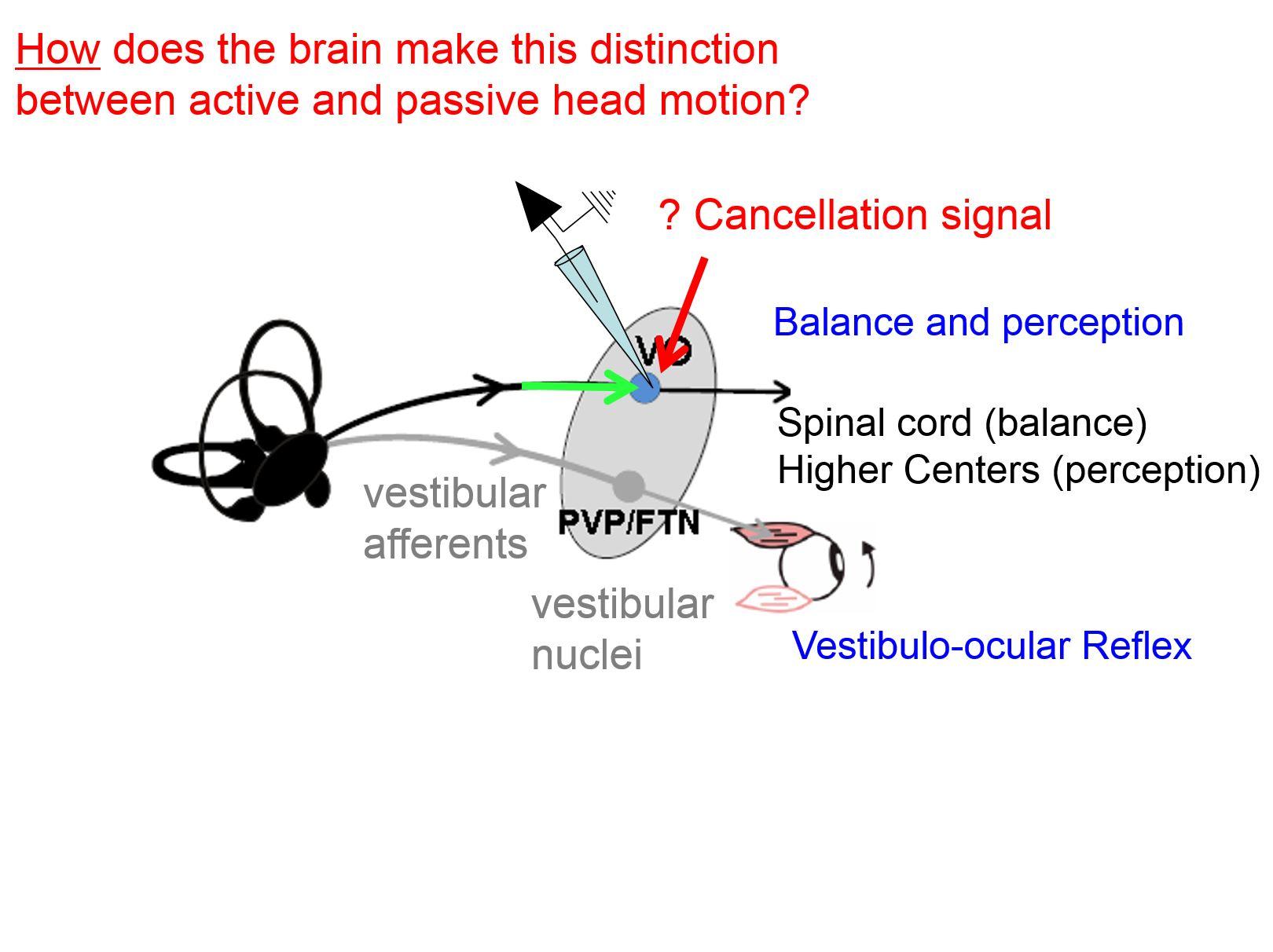

Previous behavioural and theoretical studies had suggested that, during self-motion, the intrinsic delays of feedback from sensory signals are too slow to ensure accurate behaviour. Instead, it had been proposed that the brain constructs an internal model of the sensory inflow it expects and compares this estimate with the actual sensory inflow it experiences. In particular, this difference is called a sensory prediction error signal.

For example, imagine an Olympic ice skater doing a triple Lutz jump during a competition. The skater has practised and executed this jump hundreds if not thousands of time times before. As a result, the brain has built an internal model of the sensory inflow they should be getting from the vestibular system.

The idea is that the brain computes a model of the expected sensory inflow through 3 dimensional space, which it then compares to the actual input. A small difference or error would occur if the skater lost their balance or was in in a slightly different motor state than anticipated when initiating the jump. This computed error in turn can drive changes in motor programming to help maintain balance.

While this theoretical proposal is interesting, whether and how the brain actually performs this computation was not known. So my group recorded the responses of individual neurons during active and passive self-motion to understand how the brain keeps track, in real time, of how we are moving through space. Our recordings from cerebellar output neurons reveal that the brain does indeed compute sensory prediction errors in order to allow us to maintain balance.

We have further shown that this very elegant computation effectively suppresses actively generated - also called reafferent - vestibular input at the first stage of central processing. It is one of the clearest examples reafferent suppression known in the brain.

Why is it beneficial to be able to suppress vestibular signals during active movements?

My group’s work has shown that during active self-motion, the brain processes the remarkable ability to suppress, almost instantaneously, vestibular input coming in from the 8th nerve when it is actively generated. This suppression occurs at the first central synapse.

The fundamental mechanism is important both for accurate motor control and perceptual stability. First, it is essential to suppress or gate out the efficacy of vestibulo-spinal reflexes during active movements. Consider the situation where an individual is slipping on ice and has lost their balance. The unexpected vestibular input will rapidly activate vestibulo-spinal reflex pathways that are vital for generating corrective body movements to help this person keep their balance.

On the other hand, consider another situation where the same individual experiences comparable self-generated vestibular sensory input - but it is instead self-generated – for example when returning a challenging tennis serve. In this second example, the corrective movements produced by vestibulo-spinal reflex pathways could be counterproductive since they would counteract the intended movement. Thus, it is essential to suppress the modulation of vestibulo-spinal pathways during active movements.

Second, the ability to distinguish between sensory exafference and reafference is a hallmark of higher-level perceptual and cognitive processing. Consider a situation where an individual experiences an earthquake. The ability to instantaneously determine that the resultant and salient vestibular stimulation is not self-generated could be essential for survival.

Our recent recordings from neurons in the ventral thalamus have in fact shown that thalamocortical vestibular pathway also selectively encode such unexpected self-motion.

Why did you compare the natural self-motion signals experienced by mice, monkeys and humans? What did this study reveal?

To start, in 2014 we quantified the self-motion inputs experienced by human subjects during typical everyday activities. We discovered that the structure of active motor control combined with body biomechanics shape the statistics of natural vestibular stimuli during active self-motion. As a result, natural vestibular stimuli do not follow a power law. This is different than what has been reported for other sensory systems.

We also discovered that when scientists study neural coding in the vestibular system, they have only looked at a phenomenally narrow range of movements, an order of magnitude less in amplitude than the range we normal experience in our daily lives.

Because my group is interested in understanding the neural coding strategies that are used to encode vestibular information, we then studied natural self-motion in two important animal models- monkeys and mice, in comparison to humans. We found similarities across all three species, with the natural self-motion experienced by humans most closely resembling that experienced by monkeys.

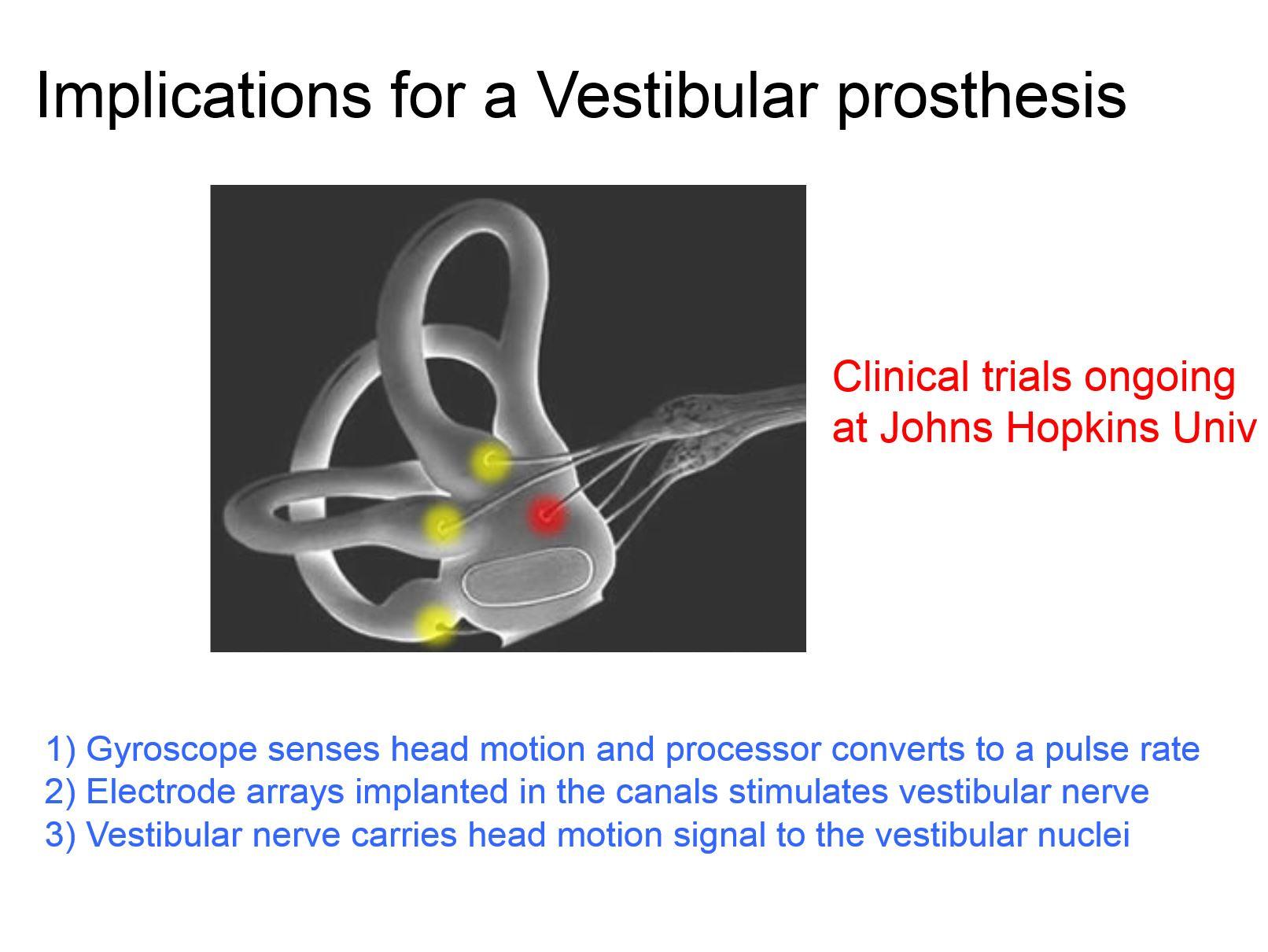

The monkey is an important basic research and clinical model - due to its evolutionary proximity to humans. The similarity in natural self-motion experienced by monkeys and humans is an advantage for advancing translational approaches to restore function in patients with profound bilateral vestibular loss. For example, a vestibular prosthetic is already in clinical trials at Hopkins, which maps motion to nerve stimulation using our linear and non-linear cascade model that is based data from monkeys. Our on going work in monkeys is focused on understanding how to improve the prosthetic so that vestibular pathways more naturally encode this artificial input in patients.

Mice have also become an important model for probing the functional circuitry of vestibular pathways. For instance, we can now use techniques like optogenetics to perturb the vestibular circuits in ways it is currently not possible from a circuit's point of view in monkey. Most investigators who work in the field of navigation work in mice so used the mouse model to demonstrate that the principles we saw in our monkey model were not monkey-specific and the same general principle applied to the mouse.

What implications does your research have for perception and action?

My group’s research has shown how vestibular information is gated in a behaviourally dependent manner during everyday activities.

The cerebellum computes expected self-motion within milliseconds so that the brain can send an appropriate signal to the vestibular nuclei and spinal cord to selectively cancel the sensory consequences of expected active self-motion. This then allows the pathways to rapidly adjust our balance to unexpected passive self-motion.

We have also shown that the output of the cerebellum reveals rapid updating of an internal model as unexpected sensory inputs become expected. Specifically, the cerebellum learns to expect modified vestibular inputs when a new relationship is established between motor output and the resultant proprioceptive feedback.

Any persistent difference between expected and actual vestibular input during an active movement, will provide an error signal that drives adaptive changes to our motor planning pathways. This ability is essential for learning a new motor skill or monitoring and calibrating on going behaviours.

In addition, we have shown that the mechanism underlying the distinction between active and passive vestibular stimulation also ensures perceptual stability. We have recently reported that thalamocortical vestibular pathways also selectively encode unexpected self-motion. In this context, our ability to interpret self-motion stimuli as ‘self-generated’ rather than ‘externally generated’ relates to our subjective awareness of initiating and controlling our own volitional actions in the world – our sense of agency.

What is the next piece of the puzzle your research is going to focus on?

To date, my group’s research has demonstrated that both descending vestibulo-spinal and ascending thalamocortical pathways selectively encode unexpected passive self-motion.

This is an elegant computation that occurs within milliseconds. Your brain's model of expected motion is compared with what actually happens and, within milliseconds, the difference is computed and an error signal is sent to make compensatory movements. The next question is, how does this happen?

Our work is now focused on the fundamental question of how the brain computes a selective estimate of unexpected passive motion within milliseconds so that we can send an appropriate signal to the spinal cord to rapidly adjust our balance, learn new motor skills, and ensure perceptual stability.

In addition our group is interested in elucidating how the brain combines sensory inflow during real navigation. The prevailing view is that directional tuning of head direction cells is achieved by integrating angular head velocity information from the vestibular nuclei; but what my group's research has clearly shown is that the vestibular system is not just sending out a MEMS-like angular velocity signal.

This then establishes that the brain does not simply rely on a vestibular input to build a head direction cell representation, but instead most likely integrates motor information with sensory information - across multiple modalities. We are working to better understand this computation.

About Professor Kathleen Cullen

Dr. Cullen’s area of interest is systems and computational neuroscience, specializing in neural mechanisms responsible the sense of balance and spatial orientation, the control of eye movements, and motor learning.

Dr. Cullen received a bachelor’s degree in Biomedical Engineering and Neuroscience from Brown University and a PhD in Neuroscience from the University of Chicago. After doctoral studies, Dr. Cullen was a Fellow at the Montreal Neurological Institute where she worked in the Department of Neurology and Neurosurgery.

In 1994, Dr. Cullen became an assistant professor in the Department of Physiology at McGill University, with appointments in Biomedical Engineering, Neuroscience, and Otolaryngology. In 2002, Cullen was appointed a William Dawson Chair in recognition of her work in Systems Neuroscience and Neural Engineering and served as Director of McGill’s Aerospace Medical Research Unit.

In 2016, Dr. Cullen moved to Johns Hopkins University, where she is now a Professor in Biomedical Engineering, and co-director of the Johns Hopkins Center for Hearing and Balance. She also holds joint appointments in the Departments of Neuroscience and in Otolaryngology – Head and Neck Surgery.

In addition to her research activities, Dr. Cullen currently serves as the Program Chair and Vice President of the Society for the Neural Control of Movement and is a member of steering committee of the Kavli Neuroscience Discovery Institute at Johns Hopkins.

Dr. Cullen has been an active member of the Scientific Advisory Board of the National Space Biomedical Research Institute, which works with NASA to identify health risks in extended space flight.

She has also served as a reviewing editor on numerous Editorial Boards including the Journal of Neuroscience, Neuroscience, the Journal of Neurophysiology, and the Journal of Research in Otolaryngology.

Dr. Cullen has received awards including the Halpike-Nylen medal of the Barany Society for “outstanding contributions to basic vestibular science”, the Sarrazin Award Lectureship from the Canadian Physiological Society (CPS), and was elected Chair of the Gordon Research Conference on eye movement system biology.

Cullen has served as Communications Lead for the Brain@McGill and was Chair of the 2016 Canadian Association for Neuroscience meeting. She has published over 120 articles, book chapters, and given over 150 national and international invited lectures.