Reflecting causality in synaptic changes

An interview with Professor Alison Barth, Carnegie Mellon University, conducted by Hyewon Kim

You step onto the tube on your first day in London and someone yells in your direction. Are you not supposed to be on the train, perhaps because it’s the last stop? Are you not allowed to have a beverage on the tube? Did you drop something on the way? Your brain goes through all possible associations between these events until an answer is found. In a SWC Seminar, Professor Alison Barth shared her work exploring synaptic changes in the brain during association learning. In this Q&A, she touches on how she came to research the topic, her most surprising findings, methodology, and more.

What was already known about the representation of causality and association learning in the brain?

There is a big gap between what people have been doing at the biochemical level of synapses and how individual sets of synapses are modified by short-term stimulation, and we know all sorts of things about those pathways. Some of that research is almost 50 years old now – we have been able to establish ex vivo that synapses can be changed by patterns of activity. But figuring out whether those phenomena are happening in the brain has been more complicated. In multiple kinds of brain areas or training paradigms, we have evidence that some of those biochemical phenomena are also happening in vivo. So, the next step is to figure out when they’re happening, what the sequence of changes are amongst various types of connections in different brain areas, and how these changes alter brain function.

20 years ago, it was absolutely thrilling to see that some of the changes you would have predicted, based on the biochemistry or small-scale electrophysiology, were actually happening in the brain! But those kinds of experiments weren’t very comprehensive. What you would do is subject the animal to some sort of training, experience, or imbalance in sensory input, then see that the synapses change. It was a thrill to relate some of the biochemical in vitro findings to in vivo. But that as an explanation for how we learn is still limited.

There’s an apocryphal story about a drunk guy looking for his keys under the streetlamp when a neighbour asks what he is doing. He says he is looking for his keys. The neighbour then asks if he lost them there. He replies, ‘No, but it’s dark everywhere else.’ Similarly, we know that some of the biochemistry can be implemented in vivo. But what we don’t understand is this: In the context of an animal that is naturally behaving and learning, which are the pathways, connections, and types of neurons that are engaged? Almost certainly, these simple mechanisms will be involved, but there is such a tapestry of different types of neurons and connections that we cannot say such changes are happening across all neurons. A subset of synapses likely change first, enabling or triggering changes in other synapses. This probably works as an ecosystem of dynamic, specific players.

My work tries to build that understanding from the small components to how those phenomena might be engaged in the living animal. It’s like modern day biochemistry. Biochemists, dealing with molecules, knew those molecules must somehow be involved. I, dealing with synapses, see that some of them are changing. How can we understand how in vivo experience – the interesting things that happen in the world – drive synaptic change? And how do those synaptic changes enable us to make a causal association between events?

What made you become interested in researching this topic?

I find memory really interesting. I have a terrible memory, but have figured out ways to compensate. It’s fascinating to see all the different ways I can work around things. For example, since I have a bad memory for names, I can turn names into places, as I have a better memory for places. Or, if you put what you know into a web or framework, it can help you see the relationship between things that, if you had to memorise isolated components, would be really hard to see.

One of the things I love about memory research is that there is a beautiful interplay between theory, experimental data, and quantitative modelling or computational analysis. It has so many different levels of analysis, you could never get bored!

How does your research try to understand what an animal is realising about the stimulus and reward? Why did you employ high-throughput sensory-association training?

One of the things that we know about learning, especially in its early stages, is that it is highly variable. Some animals will learn something in a snap of a finger – in a few hundred trials or even less. Other animals might take days and days. So, it’s very difficult to average across all of these. One of the nice things about being able to train lots of animals with ease using high-throughput training is that you can make very precise measurements and then start to see which of them are correlated with their acquisition of a memory or of an association.

For the kinds of detailed measurements we’re making, it’s important not to have just one or two animals and then draw major conclusions from these. I suspect that just as I’ve always had easier strategies for learning something that my teachers may have wanted me to learn in another way, animals are using very different strategies from what we expect – especially for simple tasks. So, if we look at a small number of animals, we run the risk of generalising results that are quite idiosyncratic.

Why did you focus on learning-dependent changes in the cortical column?

People have seen that synapses can be altered in isolation. Excitatory synapses, like glutamatergic synapses, particularly between glutamatergic neurons, have probably been the best studied of all types of synapses. Until about 10 years ago, it was not well appreciated that there was such a diversity of different types of both excitatory and inhibitory neurons. We suspected that they were different based on how they looked, but we couldn’t tell whether it was a continuum of difference or whether these neurons had different jobs to do.

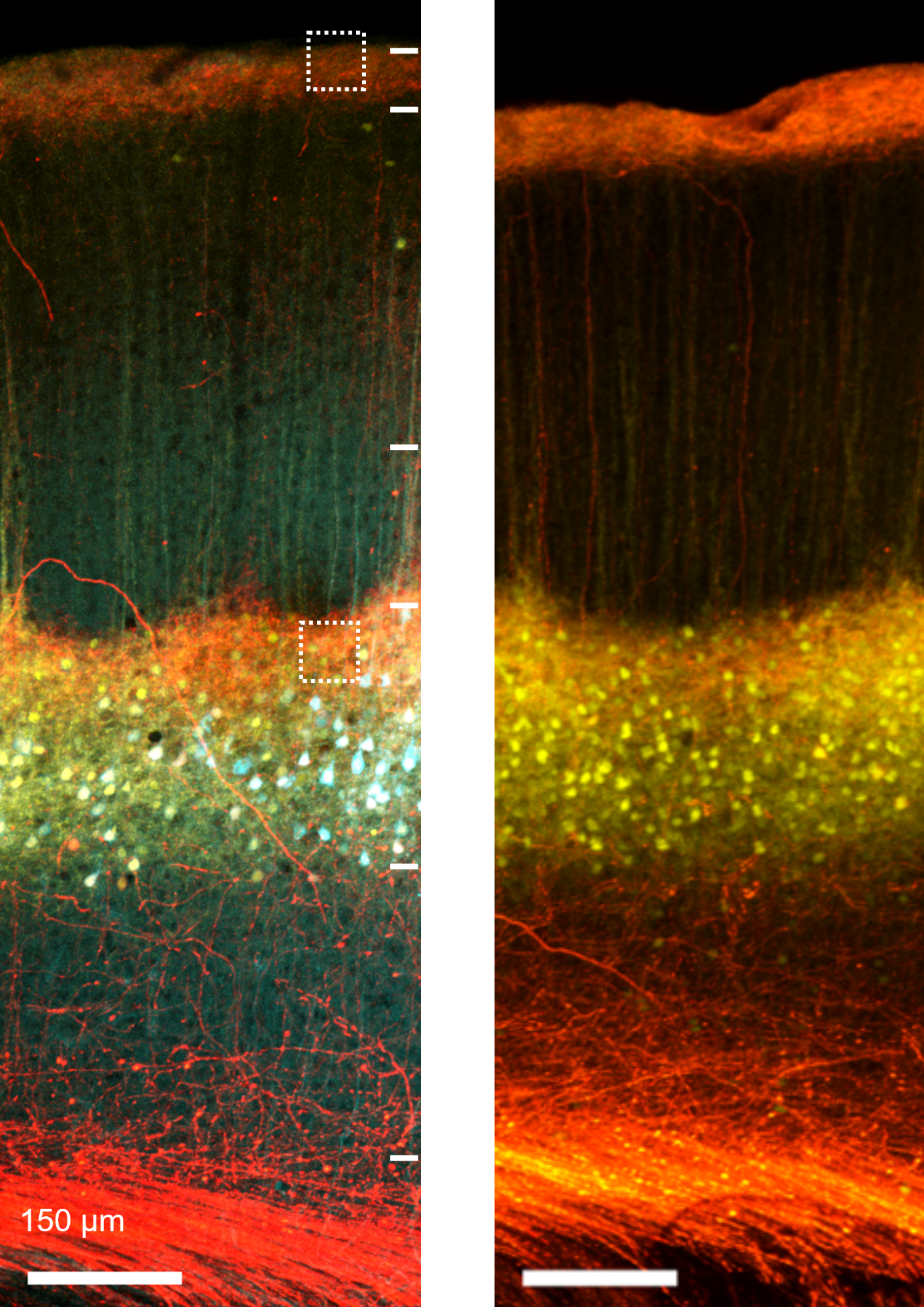

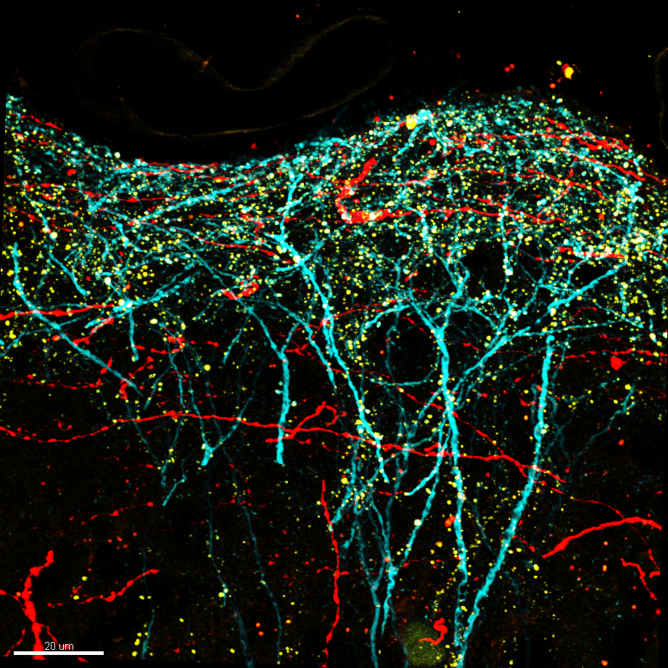

Confocal image of thalamocortical synapses onto pyramidal neurons in mouse somatosensory cortex. Layer 5 pyramidal neurons were genetically targeted for viral labeling using a cell fill (turquoise), an excitatory synapse marker (yellow; PSD95-mCitrine) and axons from the posterior-medial (POm) thalamus were labeled with the red fluorophore tdTomato.

The cortical column is composed of excitatory and inhibitory neurons that are quite stereotyped in identity. The analogy I use is that if you had a bowl of jellybeans and you scattered them on the table, with a black and white camera, you would be able to see that some are darker and some are lighter. But they are all just shades of grey, and it sort of looks like a continuum. If you had a colour camera, you’d be astonished to see that there is a mix of different colours and different identities. No one will mistake the popcorn jellybean for the raspberry jellybean.

Now that we know that there are these different cell types, we can start to look at how their stereotyped connections are altered. The cerebral cortex is a great place to do this. All of those different ‘colours’ – symbolising types of neurons – are intermixed, but in very specific ways. This provides a nice, reduced section of the brain carrying with it some common features that are recapitulated from one brain area to another – from visual cortex to tactile cortex to auditory cortex to association cortex.

What have been your key findings so far?

One question we wanted to answer was which synapses during learning, especially at the onset, are altered first. You might think that all the synapses are slowly getting stronger. But actually, we found that some synapses are selectively getting strengthened very early on. And other synapses take longer. We don’t know why, but it’s clear that there is a sequence.

Confocal image of perirhinal synapses onto pyramidal neurons in mouse somatosensory cortex. Layer 5 pyramidal neurons were genetically targeted for viral labeling using a cell fill (turquoise), an excitatory synapse marker (yellow; PSD95-mCitrine) and axons from perirhinal cortex were labeled with the red fluorophore tdTomato.

Our other major finding is that one of the earliest things to happen in synaptic change is a reduction in inhibition. That is interesting from a theoretical standpoint because if you decrease inhibition, you might allow an explosion of excitation – enabling the excitation to explore spaces and representations, then selectively strengthening those excitatory synapses. People have thought a lot about whether inhibition is regulated and if it is a necessary step in strengthening excitatory synapses. But to find evidence for it in a pathway-specific manner is important. Such evidence would also help you to constrain hypotheses about whether regulation of inhibitory synapses is directly or indirectly necessary.

What were you most surprised to find?

One of the most surprising results was that this disinhibition is super sensitive to the causal structure of the training environment. In life, two things are always related. We figure out that there is a causal association between A and B. For example, if I were to drop a ball, you would see the ball fall, and you would hear the ball hit the ground. Every time, the ball would fall, then you would hear the sound, and you would conclude that the falling ball is causing the sound.

If sometimes you drop the ball, but you don’t hear a sound, it would start to erode that causal association. We know that intuitively, but the brain actually pays attention to whether these two events are sometimes or always associated. What we found was that the statistics of that association – is A always linked to B, or is A only sometimes linked to B – were directly regulating the strength of inhibitory synapses.

So, if we eroded that association – so A sometimes leads to B, but sometimes you see B but not A, and sometimes you have A but not B – we didn’t drive the same change in inhibition. That then suggests that somewhere in the brain, there is a system that sees the consistent association and that the enables plasticity at those synapses. That sensitivity to the statistical structure of the environment is fascinating. These changes only happen when there is something causal to be learned. If that causality is unclear, these changes are not likely to happen.

Another finding that’s interesting is that it doesn’t look like these changes were limited to the region of the brain that we were stimulating. They may be broader and that is pretty new data – I’m still not entirely confident about the result. But, I think it’s fascinating that there may be a very broad network implementing these changes.

Where does the idea of ‘superstition’ come in?

In the early stages of learning, you don’t know whether A and B are linked. In fact, it could be that A is linked to B, but it could be that X or Y is linked to B. You don’t know. Let’s say it’s your first day in London and you walk into the tube. There are a lot of people walking around and you’re trying to figure out what you need to do. You’re standing in the tube and someone yells at you. You may wonder if they’re yelling at you because you’re in the wrong place, or because you were not moving, or because they didn’t like the way you looked, or because they are in a bad mood. Maybe you have a cup of coffee, and they’re yelling because you’re not allowed to have coffee on the tube. You don’t know what the exact problem is. So you’re sensitive to what it could have been. Afterwards, you realise that they were yelling at you because there was someone in a wheelchair right behind you and you needed to step aside.

Your brain searches for all these different hypothetical scenarios for to determine what the right variable is. In the early stages of learning, the brain is set up to explore all of this space. You see this in animals. When I was here at the SWC in 2020, a student from the Stephenson-Jones lab was talking about motor sequence learning – animals were trained to learn a sequence of movements. Every once in a while, an animal would add a movement in there, like a grooming movement. But if it successfully led to the reward, that accessory, irrelevant movement would get stabilised in the motor sequence.

In the early stages of learning, you don’t know which parts of what you have done is critical. Sometimes you keep things in that repertoire because you think it might be important. The Lionesses won on Wednesday night. Was it your lucky flag that you were carrying, the face paint you were wearing, or the type of beer you were drinking, the pub that you went to? What was it that helped England win? Sports fanatics are famous for having their rituals and superstitions and they search for what was causal. All of that search space is where superstition comes from.

What implications could your findings have for humans?

We’re very interested in whether some of the principles that we find could be used to leverage how humans learn things. Can we put the brain into a state where it’s primed to learn better? I think that’s super interesting. There are some things that are very difficult to learn, but it might be easier if you are in a new environment, for example. Then, you could change the environment. I think this is especially relevant in a time where we are doing lots of online learning and having Zoom interactions. If being in a new spatial environment actually helps prime the brain to absorb material, that would be powerful.

We might also prime students, for example, by training them in one task. It could be something physical, like athletic exercise – go outside, perform a physical routine, dance, do jumping jacks, or the like that is a bit complicated – then bring them into the classroom to teach them something more complicated. The brain is now primed and ready to learn.

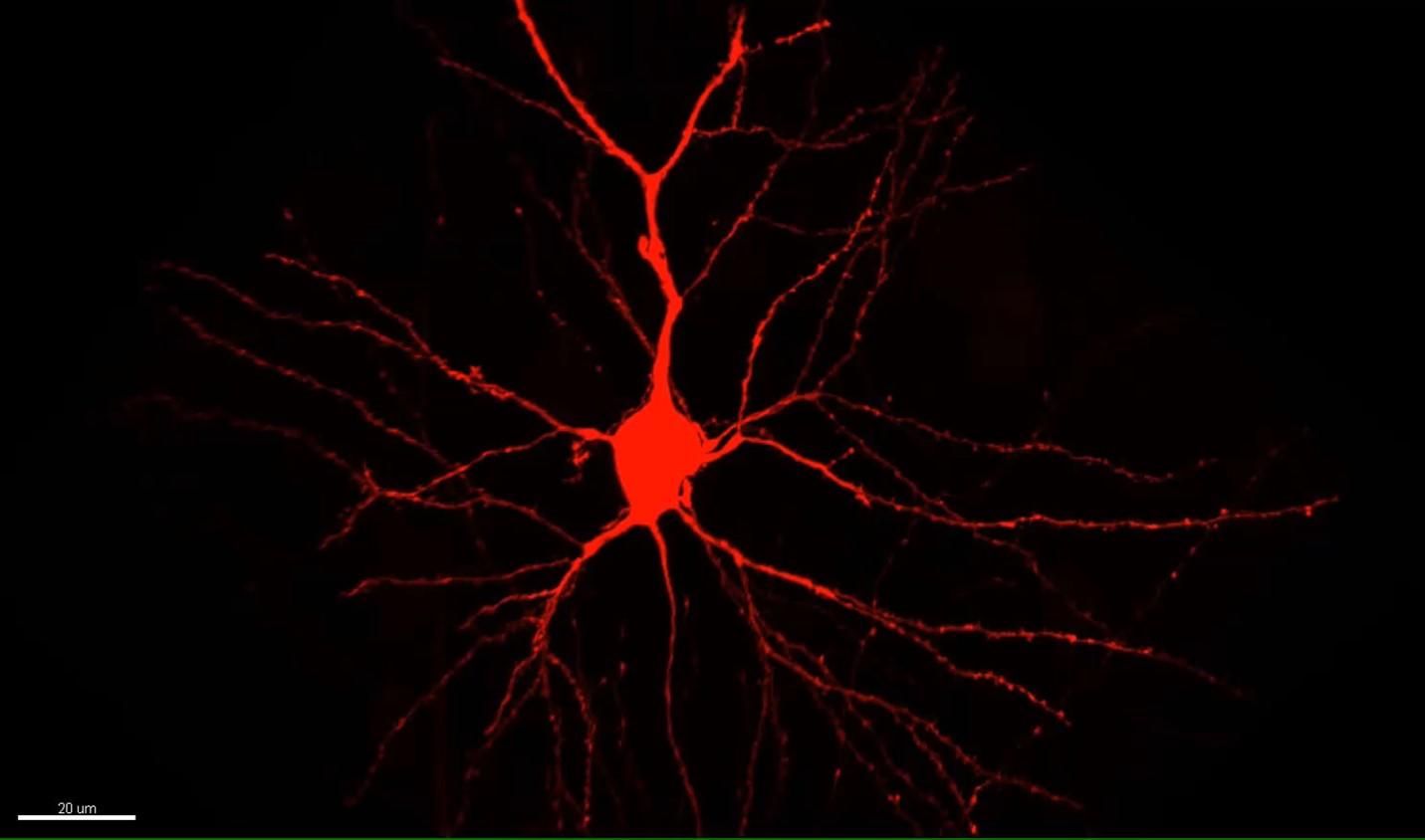

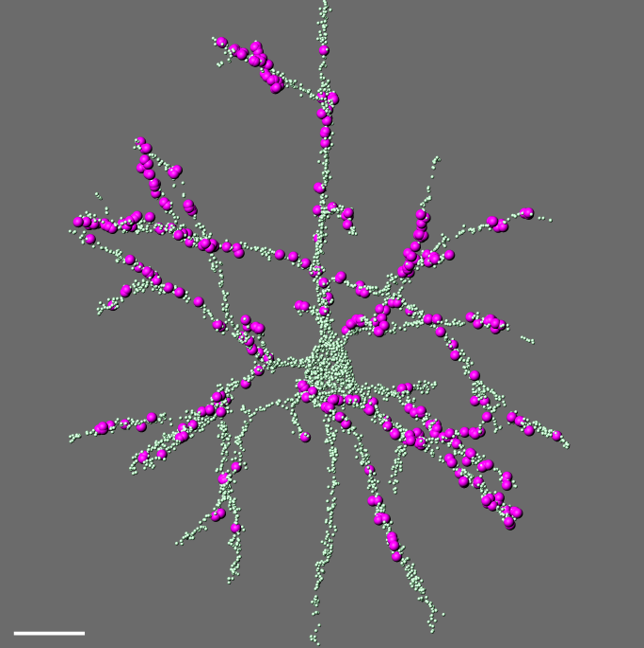

Comprehensive digital reconstruction of thalamocortical synapses onto a layer 5 pyramidal neuron in mouse somatosensory cortex. All synapses are labeled in green; thalamocortical contacts from the posterior medial (POm) nucleus in magenta.

What are you most excited to work on in the future?

We made a gamble that by trying to understand the brain from the bottom up, constructing what the neurons were calculating, and looking at their synaptic inputs and outputs, we’d be able to generate new hypotheses about function. This “constructivist” approach is quite different from looking at the activity of 1000s of neurons and trying to infer what those neurons are doing. That’s a top-down approach, where you collect a lot of neural activity then try to make an inference about what’s going on. What we’re doing is different – we’re looking at synapses, seeing how they’re changing, then making inferences based on that to understand how the output of the network, the firing of neurons, will be different.

The fact that we can find pathway-specific changes in synaptic function validates the constructivist approach as a way to understand how the brain is receiving and interpreting information, and adapting to information in the external world. It’s tantalising to be able to collect a lot of neural activity, but I think it’s critical to know which neurons you’re recording from. There are so many different subtypes of neurons that if you just blindly record from cells, it may be very difficult to extract that information. I think a lot of the changes we will see in neural activity are probably related to brain state modulation and not to the specific engram for learning something.

About Professor Alison Barth

Alison Barth received her Ph.D. in Cell and Molecular Neuroscience at UC-Berkeley, and was a postdoctoral fellow in neurophysiology at Stanford. She joined the Department of Biological Sciences at Carnegie Mellon in 2002, where she is a Professor in the Department of Biological Sciences, Biomedical Engineering, as well as the Neuroscience Institute. She served as the founding director of Carnegie Mellon’s neuroscience initiative from 2015-2018. Her research focuses on cellular and synaptic changes that occur in the mammalian neocortex during learning, using both electrophysiological recordings and quantitative anatomical measurements to examine cell-type and pathway-specific changes. Barth is the recipient of multiple awards, including a McKnight Award, the Sloan Foundation Award, the New Innovator Award from the Society for Neuroscience, the Humboldt Foundation Friedrich Wilhelm Bessel Award as a visiting faculty member in Berlin for 2010, and was awarded the Leverhulme Visiting Professorship at University College London for 2020. She holds a patent for the fosGFP transgenic mouse and is the inventor of multiple other technologies for neuroscience research and disease treatments.