The cellular logic of sensory and motor circuits underlying vision-based navigation

Margrie Lab

Research Area

One of the most compelling aspects of brain function is its ability to simultaneously stream different types of sensory information and combine these to form a coherent percept of the world around us. During locomotion, for example, the brain’s representations of external information must also be integrated with internal motion signals that are reported by its accelerometer, the vestibular organ. Therefore, as we successfully navigate through the world, motor, vestibular and other sensory representations are being continually updated so that our internal cognitive model may be used to assess the environment to make decisions and perform appropriate actions, many of which are highly flexible and context-specific.

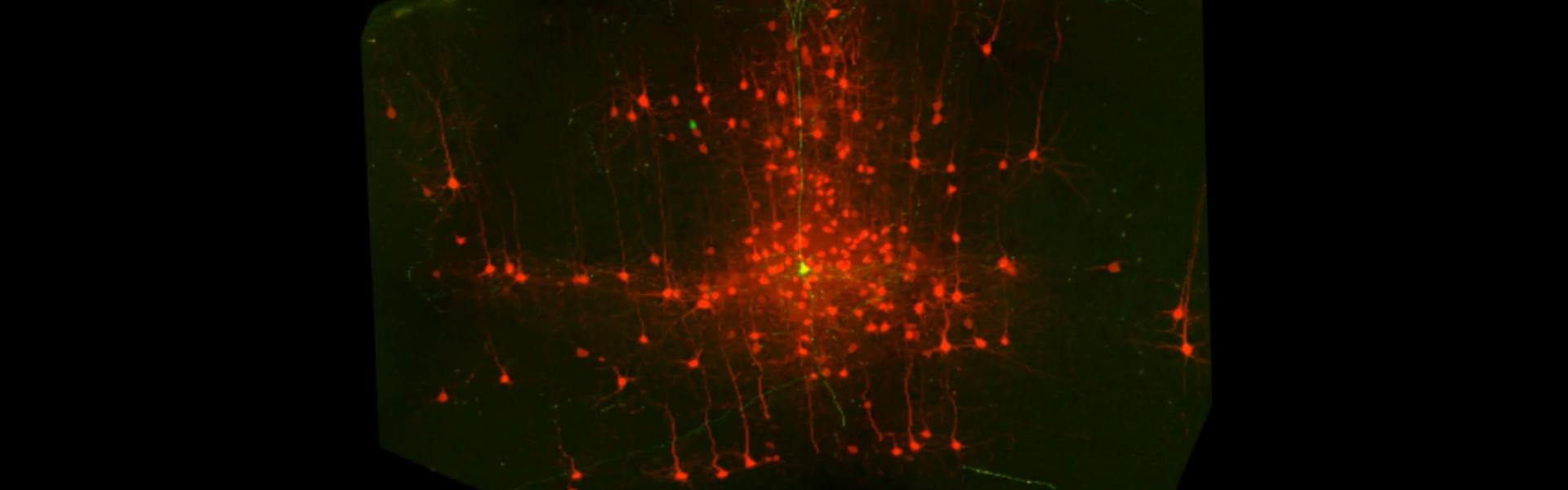

This is not a simple computational process since it is reliant on large-scale network interconnectivity and global integration within and between many functionally discrete areas. Our lab is therefore dedicated to mapping, with single-cell resolution, the connectivity and function of these circuits. We employ almost all available technical approaches, including many developed in our lab and through collaboration. These include 3D electron microscopic analysis, in vitro and in vivo intracellular and extracellular recordings, whole-brain viral tracing and circuit mapping, in vivo imaging and optogenetics, modelling, and behaviour. Our lab works almost exclusively on mice, which offer a tractable experimental system for establishing causal relationships between the functional connectivity of mammalian brain circuits and behaviour.

Research Topics

Egocentric sensory representations

One critical problem that the brain must solve involves the binding of sensory objects in the external world to an egocentric (i.e. head-centred) reference frame. That is, the brain must be able to discern which elements of, for example, apparent visual motion in the outside world might be caused by translation of the head (and eyes) during self-motion. Due to the retrosplenial (RSP) cortex's anatomical connectivity with structures such as the hippocampal formation and the primary visual cortex (VISp), and the fact it contains many different functional cell types (e.g. angular head velocity and head direction neurons), it is believed to play a fundamental role in solving this problem. One objective of our lab is to better understand how the RSP-VISp circuitry combines head motion information with external visual motion signals to generate a coherent percept of visual motion during ecologically relevant episodes of locomotion and navigation.

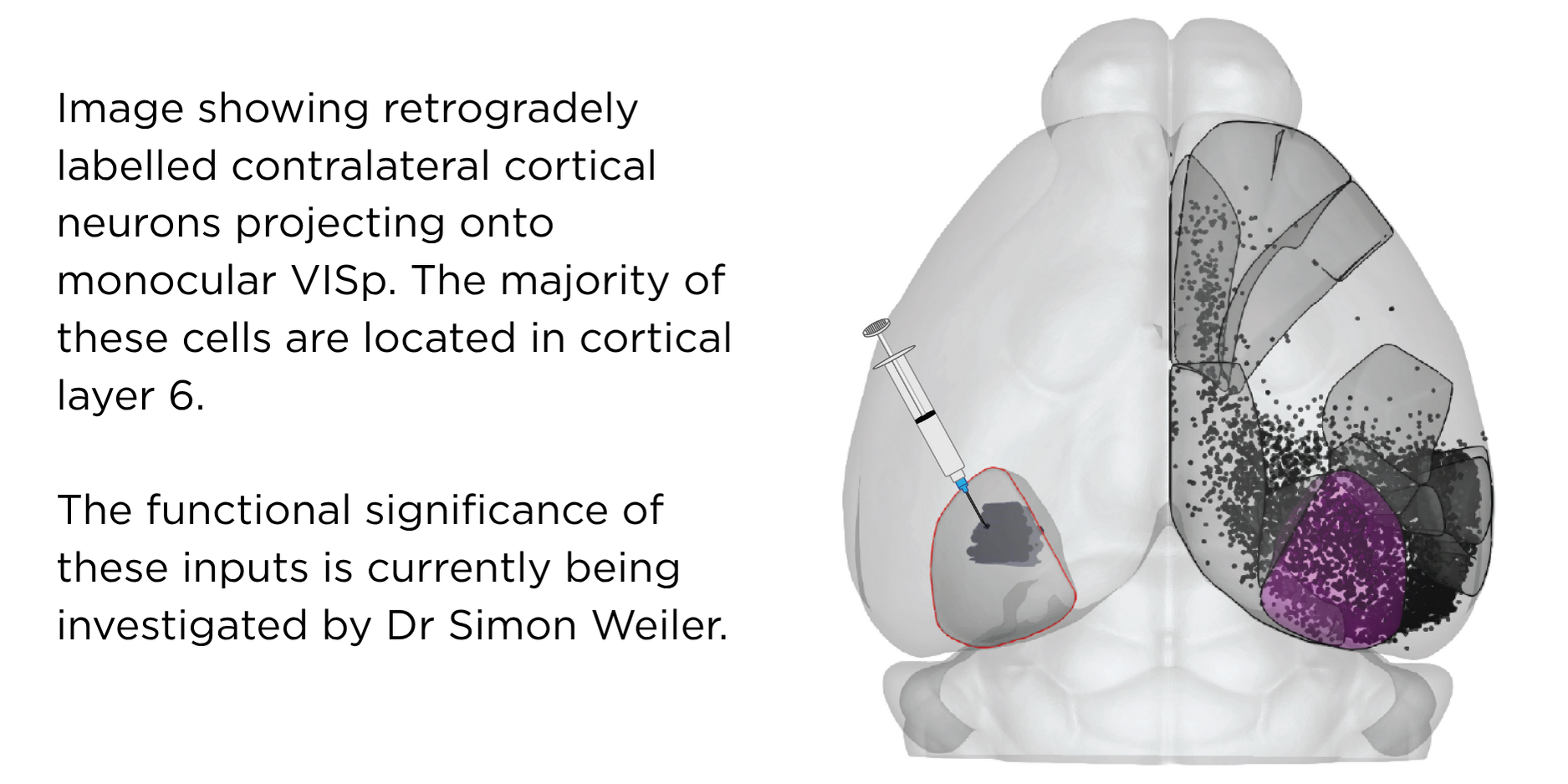

Interhemispheric sensory representations and storage

One general question the lab focuses on is how sensory stimuli (e.g. vestibular, visual objects, olfactory cues) are accurately represented by downstream cortical cells and circuits. We are at present particularly interested in how visual cortical networks comprised of cells located in different cortical layers, areas and hemispheres process visual and non-visual signals to form coherent representations of visual flow information. Recent work in our lab has established that there is a significant role for L6 cells in relaying these signals not only within the cortical columns but also between major sensory and motor cortical areas, including across the two hemispheres. In collaboration with the Harris lab, we are also interested in understanding how sensory representations in different areas and layers within the cortex may be stored (e.g. during sleep) so that they may be accessed for future decision-making.

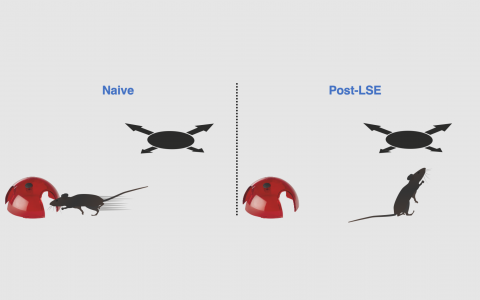

Flexible decision-making underlying naturalistic behaviour

Sensory information is used to guide behaviour. Our lab has developed several behavioural paradigms for assessing, for example, visual and vestibular sensory integration and perception that are necessary for understanding how information is combined to achieve optimal behavioural performance. We are also interested in how the life and sensory history of an animal influences future actions, a process that likely involves diverse brain areas beyond the cortex such as the striatum and the substantia nigra. To study this we use visually-guided escape behaviour that has been shown to be highly adaptive, depending on the recent social, sensory and threat escape history of the mouse. Some of this work is carried out in collaboration with the Stephenson-Jones Lab at SWC focusing on the role of Basal Ganglia circuits.

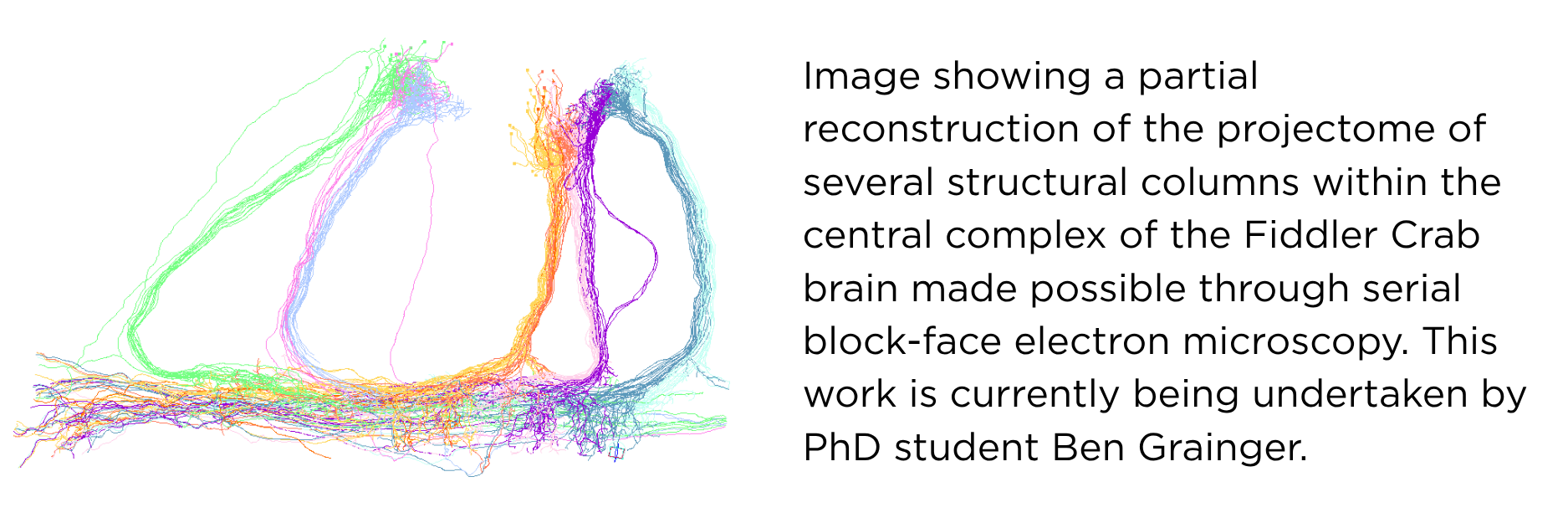

A new model system for understanding fundamental elements of navigation

Their impressive genetic toolkits have made the fly and the mouse the most common systems neuroscience models to experimentally unlock circuit design and brain function. However, interpreting the naturalistic behaviours of a mouse—which has, by orders of magnitude, a more complex nervous system than a fly—is often not straight-forward. This is particularly relevant regarding naturalistic behaviours such as exploration and navigation when, in the absence of an overt action, it is not possible for an external observer to know when or where a mouse might think it is located or heading in space.

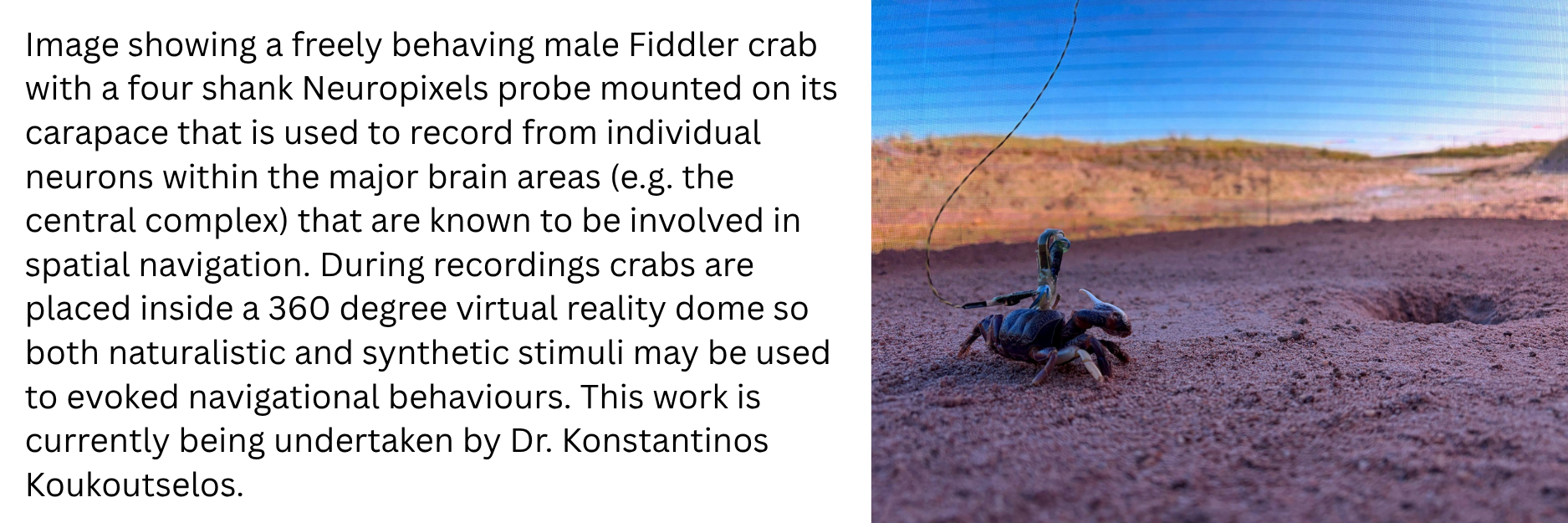

On the other hand the Fiddler crab (Uca tangeri) is a semi-terrestrial crustacean that lives a burrow-centric existence. During low tide and feeding for example they are known to maintain their horizontal body axis according to the heading direction of their burrow and this ongoing re-orienting as the crab canvases the shoreline does not require direct visual contact with the burrow. Indeed, several elegant experimental field studies have shown that Fiddler crabs use local landmarks to update their angle and position and estimate their idiothetic (self-generated) distance by integrating steps. These elements are believed to be fundamental to successful navigation. In collaboration with the Branco Lab at SWC we have successfully created the world’s first in-lab “Crabitat”, designed to house large numbers of individuals for experimental in vivo research. Importantly we have found that Fiddler crabs continue to exhibit many naturalistic behaviours including runs to burrows while carrying experimental recording devices such as mini two-photon microscopes on their carapace. Since the nervous system of the Fiddler crab is very similar to Drosophila, it offers a relatively simple neuroanatomical system that can support recordings in freely moving individuals that exhibit behavioural readouts of internally generated percepts of spatial location, heading direction and distance to goal. Having recently established in vivo Neuropixels recordings in freely moving crabs, the projectome and genome are currently now under construction.

Our Method Developments (past 25 years)

Many of the most challenging research questions require the development of new or the refinement of existing methodological approaches. Historically, new methods in particular almost always deliver new insight and discovery. Our team-based collaborative approach has resulted in the development of several novel methods and applications that, in some cases, have become field-defining tools.

Bimbard et al (2025) An adaptable, reusable, and light implant for chronic Neuropixels probes. eLife. Feb 18;13:RP98522.

Cloves M and Margrie TW (2024) In vivo dual-plane 3-photon microscopy: spanning the depth of the mouse neocortex. Biomed Optics Express 2024 Nov 26;15(12):7022-7034.

Weiler S, Velez-Fort M and Margrie TW (2024) Overcoming off-target optical stimulation-evoked cortical activity in the mouse brain in vivo. iScience Oct 15;27(11):111152.

*Tyson et al (2022) Accurate determination of marker location within whole-brain microscopy images. Scientific Reports 12:867.

*Claudi et al (2021) Visualizing anatomically registered data with brainrender. eLife Mar 19: 10:e65751.

*Tyson et al (2021) A deep learning algorithm for 3D cell detection in whole mouse brain image datasets. PLoS Computational Biology May 28; 17 (5):e1009074.

*Claudi et al (2020) BrainGlobe Atlas API: a common interface for neuroanatomical atlases. Journal of Open Source Software 5:2668.

Schwarz et al (2018) Architecture of a mammalian glomerular domain revealed by novel volume electroporation using nanoengineered microelectrodes. Nature Communications. Jan 12;9(1):183.

*Niedworok et al (2016) aMAP is a validated pipeline for registration and segmentation of high-resolution mouse brain data. Nature Communications 7:11879.

Rancz et al (2011) Transfection via whole-cell recordings in vivo: Bridging single-cell physiology, genetics and connectomics. Nature Neuroscience 14(4):527-532.

Komai S, Denk W, Osten P, Brecht M and Margrie TW (2006) Two-photon targeted patching in vivo. Nature Protocols 1(2):647-652.

Margrie TW et al (2003) Targeted whole-cell recordings in the mammalian brain in vivo. Neuron 39:11-20.

Margrie TW, Brecht M and Sakmann B. (2002) Whole-cell recordings from neurons of the anaesthetized and awake mammalian brain in vivo. European Journal of Physiology-Pflügers Archiv 444(4):491-498.

BrainGlobe

Current and former members of the lab have developed several experimental and computational neuroanatomical tools* that represent the spawning of The BrainGlobe Initiative (BGI). Today, BGI is considered by our peers to reflect the very best of open, collaborative and field-defining scientific innovation. Over recent years more than 100 scientists and data analysts—ranging from high school students to professors—from more than 70 institutions have contributed to its development. The central team, now based within The Neuroinformatics Unit at SWC, continue to work to make tools as accessible as possible to different fields across developmental and systems neuroscience. It now contains more than 190 brain atlases from 13 different species including mice, rats, zebrafish, birds, bees and very soon, the Fiddler crab. With thousands of users world-wide, BGI tools have been downloaded more than 3 million times. We and the broader BGI network are especially grateful for core funding from The MRC (former UK National Institute for Medical Research), Wellcome and the Gatsby Charitable Foundation (Sainsbury Wellcome Centre) and grants from The Chan Zuckerberg Initiative. In 2025, BGI received The Neuro-Irv and Helga Cooper Foundation International Award for Open Science.